Project Description

At SCC, we have a rotating task of COOTW (Commanding Office of the Week). This task involves responding to customer requests from jira and slack help channels, monitoring production systems and doing small chores. Usually, we have documentation to help the COOTW answer questions and quickly find fixes. Most of these are distributed across github, trello and SUSE Support documentation. The aim of this project is to explore the magic of LLMs and create a conversational bot.

Goal for this Hackweek

- Build data ingestion

Data source:

- SUSE KB docs

- scc github docs

- scc trello knowledge board

Test out new RAG architecture

https://gitlab.suse.de/ngetahun/cootwbot

This project is part of:

Hack Week 23 Hack Week 24

Activity

Comments

Be the first to comment!

Similar Projects

Try out Neovim Plugins supporting AI Providers by enavarro_suse

Description

Experiment with several Neovim plugins that integrate AI model providers such as Gemini and Ollama.

Goals

Evaluate how these plugins enhance the development workflow, how they differ in capabilities, and how smoothly they integrate into Neovim for day-to-day coding tasks.

Resources

- Neovim 0.11.5

- AI-enabled Neovim plugins:

- avante.nvim: https://github.com/yetone/avante.nvim

- Gp.nvim: https://github.com/Robitx/gp.nvim

- parrot.nvim: https://github.com/frankroeder/parrot.nvim

- gemini.nvim: https://dotfyle.com/plugins/kiddos/gemini.nvim

- ...

- Accounts or API keys for AI model providers.

- Local model serving setup (e.g., Ollama)

- Test projects or codebases for practical evaluation:

- OBS: https://build.opensuse.org/

- OBS blog and landing page: https://openbuildservice.org/

- ...

Liz - Prompt autocomplete by ftorchia

Description

Liz is the Rancher AI assistant for cluster operations.

Goals

We want to help users when sending new messages to Liz, by adding an autocomplete feature to complete their requests based on the context.

Example:

- User prompt: "Can you show me the list of p"

- Autocomplete suggestion: "Can you show me the list of p...od in local cluster?"

Example:

- User prompt: "Show me the logs of #rancher-"

- Chat console: It shows a drop-down widget, next to the # character, with the list of available pod names starting with "rancher-".

Technical Overview

- The AI agent should expose a new ws/autocomplete endpoint to proxy autocomplete messages to the LLM.

- The UI extension should be able to display prompt suggestions and allow users to apply the autocomplete to the Prompt via keyboard shortcuts.

Resources

Uyuni Health-check Grafana AI Troubleshooter by ygutierrez

Description

This project explores the feasibility of using the open-source Grafana LLM plugin to enhance the Uyuni Health-check tool with LLM capabilities. The idea is to integrate a chat-based "AI Troubleshooter" directly into existing dashboards, allowing users to ask natural-language questions about errors, anomalies, or performance issues.

Goals

- Investigate if and how the

grafana-llm-appplug-in can be used within the Uyuni Health-check tool. - Investigate if this plug-in can be used to query LLMs for troubleshooting scenarios.

- Evaluate support for local LLMs and external APIs through the plugin.

- Evaluate if and how the Uyuni MCP server could be integrated as another source of information.

Resources

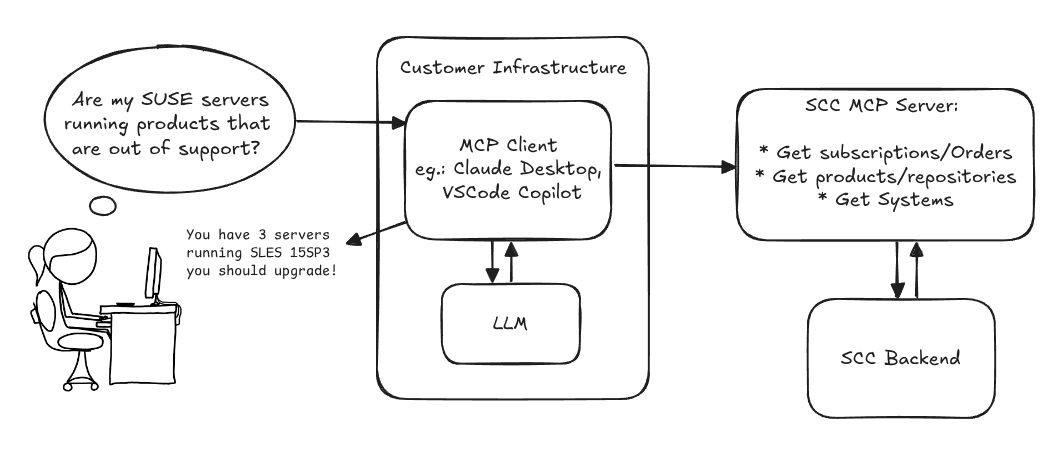

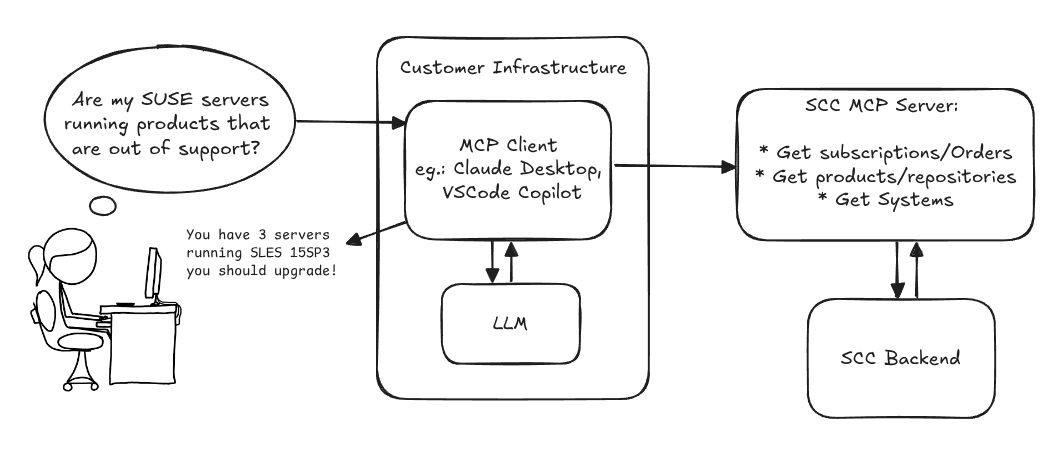

MCP Server for SCC by digitaltomm

Description

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json:

SUSE Edge Image Builder MCP by eminguez

Description

Based on my other hackweek project, SUSE Edge Image Builder's Json Schema I would like to build also a MCP to be able to generate EIB config files the AI way.

Realistically I don't think I'll be able to have something consumable at the end of this hackweek but at least I would like to start exploring MCPs, the difference between an API and MCP, etc.

Goals

- Familiarize myself with MCPs

- Unrealistic: Have an MCP that can generate an EIB config file

Resources

Result

https://github.com/e-minguez/eib-mcp

I've extensively used antigravity and its agent mode to code this. This heavily uses https://hackweek.opensuse.org/25/projects/suse-edge-image-builder-json-schema for the MCP to be built.

I've ended up learning a lot of things about "prompting", json schemas in general, some golang, MCPs and AI in general :)

Example:

Generate an Edge Image Builder configuration for an ISO image based on slmicro-6.2.iso, targeting x86_64 architecture. The output name should be 'my-edge-image' and it should install to /dev/sda. It should deploy

a 3 nodes kubernetes cluster with nodes names "node1", "node2" and "node3" as:

* hostname: node1, IP: 1.1.1.1, role: initializer

* hostname: node2, IP: 1.1.1.2, role: agent

* hostname: node3, IP: 1.1.1.3, role: agent

The kubernetes version should be k3s 1.33.4-k3s1 and it should deploy a cert-manager helm chart (the latest one available according to https://cert-manager.io/docs/installation/helm/). It should create a user

called "suse" with password "suse" and set ntp to "foo.ntp.org". The VIP address for the API should be 1.2.3.4

Generates:

``` apiVersion: "1.0" image: arch: x86_64 baseImage: slmicro-6.2.iso imageType: iso outputImageName: my-edge-image kubernetes: helm: charts: - name: cert-manager repositoryName: jetstack

Explore LLM evaluation metrics by thbertoldi

Description

Learn the best practices for evaluating LLM performance with an open-source framework such as DeepEval.

Goals

Curate the knowledge learned during practice and present it to colleagues.

-> Maybe publish a blog post on SUSE's blog?

Resources

https://deepeval.com

https://docs.pactflow.io/docs/bi-directional-contract-testing

Bugzilla goes AI - Phase 1 by nwalter

Description

This project, Bugzilla goes AI, aims to boost developer productivity by creating an autonomous AI bug agent during Hackweek. The primary goal is to reduce the time employees spend triaging bugs by integrating Ollama to summarize issues, recommend next steps, and push focused daily reports to a Web Interface.

Goals

To reduce employee time spent on Bugzilla by implementing an AI tool that triages and summarizes bug reports, providing actionable recommendations to the team via Web Interface.

Project Charter

Description

Project Achievements during Hackweek

In this file you can read about what we achieved during Hackweek.

"what is it" file and directory analysis via MCP and local LLM, for console and KDE by rsimai

Description

Users sometimes wonder what files or directories they find on their local PC are good for. If they can't determine from the filename or metadata, there should an easy way to quickly analyze the content and at least guess the meaning. An LLM could help with that, through the use of a filesystem MCP and to-text-converters for typical file types. Ideally this is integrated into the desktop environment but works as well from a console. All data is processed locally or "on premise", no artifacts remain or leave the system.

Goals

- The user can run a command from the console, to check on a file or directory

- The filemanager contains the "analyze" feature within the context menu

- The local LLM could serve for other use cases where privacy matters

TBD

- Find or write capable one-shot and interactive MCP client

- Find or write simple+secure file access MCP server

- Create local LLM service with appropriate footprint, containerized

- Shell command with options

- KDE integration (Dolphin)

- Package

- Document

Resources

Backporting patches using LLM by jankara

Description

Backporting Linux kernel fixes (either for CVE issues or as part of general git-fixes workflow) is boring and mostly mechanical work (dealing with changes in context, renamed variables, new helper functions etc.). The idea of this project is to explore usage of LLM for backporting Linux kernel commits to SUSE kernels using LLM.

Goals

- Create safe environment allowing LLM to run and backport patches without exposing the whole filesystem to it (for privacy and security reasons).

- Write prompt that will guide LLM through the backporting process. Fine tune it based on experimental results.

- Explore success rate of LLMs when backporting various patches.

Resources

- Docker

- Gemini CLI

Repository

Current version of the container with some instructions for use are at: https://gitlab.suse.de/jankara/gemini-cli-backporter

Song Search with CLAP by gcolangiuli

Description

Contrastive Language-Audio Pretraining (CLAP) is an open-source library that enables the training of a neural network on both Audio and Text descriptions, making it possible to search for Audio using a Text input. Several pre-trained models for song search are already available on huggingface

Goals

Evaluate how CLAP can be used for song searching and determine which types of queries yield the best results by developing a Minimum Viable Product (MVP) in Python. Based on the results of this MVP, future steps could include:

- Music Tagging;

- Free text search;

- Integration with an LLM (for example, with MCP or the OpenAI API) for music suggestions based on your own library.

The code for this project will be entirely written using AI to better explore and demonstrate AI capabilities.

Result

In this MVP we implemented:

- Async Song Analysis with Clap model

- Free Text Search of the songs

- Similar song search based on vector representation

- Containerised version with web interface

We also documented what went well and what can be improved in the use of AI.

You can have a look at the result here:

Future implementation can be related to performance improvement and stability of the analysis.

References

- CLAP: The main model being researched;

- huggingface: Pre-trained models for CLAP;

- Free Music Archive: Creative Commons songs that can be used for testing;

Kubernetes-Based ML Lifecycle Automation by lmiranda

Description

This project aims to build a complete end-to-end Machine Learning pipeline running entirely on Kubernetes, using Go, and containerized ML components.

The pipeline will automate the lifecycle of a machine learning model, including:

- Data ingestion/collection

- Model training as a Kubernetes Job

- Model artifact storage in an S3-compatible registry (e.g. Minio)

- A Go-based deployment controller that automatically deploys new model versions to Kubernetes using Rancher

- A lightweight inference service that loads and serves the latest model

- Monitoring of model performance and service health through Prometheus/Grafana

The outcome is a working prototype of an MLOps workflow that demonstrates how AI workloads can be trained, versioned, deployed, and monitored using the Kubernetes ecosystem.

Goals

By the end of Hack Week, the project should:

Produce a fully functional ML pipeline running on Kubernetes with:

- Data collection job

- Training job container

- Storage and versioning of trained models

- Automated deployment of new model versions

- Model inference API service

- Basic monitoring dashboards

Showcase a Go-based deployment automation component, which scans the model registry and automatically generates & applies Kubernetes manifests for new model versions.

Enable continuous improvement by making the system modular and extensible (e.g., additional models, metrics, autoscaling, or drift detection can be added later).

Prepare a short demo explaining the end-to-end process and how new models flow through the system.

Resources

Updates

- Training pipeline and datasets

- Inference Service py

MCP Server for SCC by digitaltomm

Description

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json: