Join the Gitter channel! https://gitter.im/uyuni-project/hackweek

Uyuni is a configuration and infrastructure management tool that saves you time and headaches when you have to manage and update tens, hundreds or even thousands of machines. It also manages configuration, can run audits, build image containers, monitor and much more!

Currently there are a few distributions that are completely untested on Uyuni or SUSE Manager (AFAIK) or just not tested since a long time, and could be interesting knowing how hard would be working with them and, if possible, fix whatever is broken.

For newcomers, the easiest distributions are those based on DEB or RPM packages. Distributions with other package formats are doable, but will require adapting the Python and Java code to be able to sync and analyze such packages (and if salt does not support those packages, it will need changes as well). So if you want a distribution with other packages, make sure you are comfortable handling such changes.

No developer experience? No worries! We had non-developers contributors in the past, and we are ready to help as long as you are willing to learn. If you don't want to code at all, you can also help us preparing the documentation after someone else has the initial code ready, or you could also help with testing :-)

The idea is testing Salt (including bootstrapping with bootstrap script) and Salt-ssh clients

To consider that a distribution has basic support, we should cover at least (points 3-6 are to be tested for both salt minions and salt ssh minions):

- Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)

- Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)

- Package management (install, remove, update...)

- Patching

- Applying any basic salt state (including a formula)

- Salt remote commands

- Bonus point: Java part for product identification, and monitoring enablement

- Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)

- Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)

- Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

If something is breaking: we can try to fix it, but the main idea is research how supported it is right now. Beyond that it's up to each project member how much to hack :-)

- If you don't have knowledge about some of the steps: ask the team

- If you still don't know what to do: switch to another distribution and keep testing.

This card is for EVERYONE, not just developers. Seriously! We had people from other teams helping that were not developers, and added support for Debian and new SUSE Linux Enterprise and openSUSE Leap versions :-)

In progress/done for Hack Week 25

Guide

We started writin a Guide: Adding a new client GNU Linux distribution to Uyuni at https://github.com/uyuni-project/uyuni/wiki/Guide:-Adding-a-new-client-GNU-Linux-distribution-to-Uyuni, to make things easier for everyone, specially those not too familiar wht Uyuni or not technical.

openSUSE Leap 16.0

The distribution will all love!

https://en.opensuse.org/openSUSE:Roadmap#DRAFTScheduleforLeap16.0

Curent Status We started last year, it's complete now for Hack Week 25! :-D

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file) NOTE: Done, client tools for SLMicro6 are using as those for SLE16.0/openSUSE Leap 16.0 are not available yet[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...). Works, even reboot requirement detection[W]Patching (if patch information is available, could require writing some code to parse it, but IIRC we have support for Ubuntu already) Works, tested with an openssh patch[W]Applying any basic salt state (including a formula) Locale formula to adjust TZ to UTC, and apply highstate[W]Salt remote commands[W]Bonus point: Java part for product identification.[I]Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform) No time[W]Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs) https://github.com/uyuni-project/uyuni-docs/pull/4566[I]Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite) *No time

Resources:

- Research card: https://github.com/uyuni-project/uyuni-docs/pull/3468

- PRs: https://github.com/uyuni-project/uyuni/pull/11120 and https://github.com/uyuni-project/uyuni/pull/11265

- PR for doc: https://github.com/uyuni-project/uyuni-docs/pull/4566

Debian 13 and Raspberry Pi OS 13

The new version of the beloved Debian GNU/Linux OS

Curent Status: While we could not progress with the onboarding, there's a PR for the code and two PRs for the doc, in preparation for the fix on Salt.

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)[W]Onboarding (salt minion from UI, salt minion from bootstrap script, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...)[I]Patching (if patch information is available, could require writing some code to parse it, but IIRC we have support for Ubuntu already). We don't support patches yet.[W]Applying any basic salt state (including a formula)[W]Salt remote commands[I]Bonus point: Java part for product identification, and monitoring enablement **Change done, but can't be tested as this requires sumatoolbox[I]Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform) Not enough time[W]Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)[I]Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite) Not enough timePR: https://github.com/uyuni-project/uyuni/pull/11279

PRs for doc: https://github.com/uyuni-project/uyuni-docs/pull/4568 and https://github.com/uyuni-project/uyuni-docs/pull/4569

Zorin OS

In particular the Education version (https://help.zorin.com/docs/getting-started/system-requirements/ and https://zorin.com/os/education/)

Because of the IDLINK and the UBUNTUCODENAME values, it should be compatible with the bundle and client tools for Ubuntu 22.04 out of the box.

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)[W]Onboarding (salt minion from UI, salt minion from bootstrap script, and salt-ssh minion) WORKS, but requires a patch for Salt[W]Package management (install, remove, update...)[ ]Patching (if patch information is available, could require writing some code to parse it, but IIRC we have support for Ubuntu already)[ ]Applying any basic salt state (including a formula)[ ]Salt remote commands[ ]Bonus point: Java part for product identification, and monitoring enablement[ ]Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)[ ]Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)[ ]Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

It's a distro based on Ubuntu. This is the content of /etc/os-release:

PRETTY_NAME="Zorin OS 17.2"

NAME="Zorin OS"

VERSION_ID="17"

VERSION="17.2"

VERSION_CODENAME=jammy

ID=zorin

ID_LIKE="ubuntu debian"

HOME_URL="https://zorin.com/os/"

SUPPORT_URL="https://help.zorin.com/"

BUG_REPORT_URL="https://zorin.com/os/feedback/"

PRIVACY_POLICY_URL="https://zorin.com/legal/privacy/"

UBUNTU_CODENAME=jammy

PR: https://github.com/uyuni-project/uyuni/pull/9510

Pending

FUSS

FUSS is a complete GNU/Linux solution (server, client and desktop/standalone) based on Debian for managing an educational network.

https://fuss.bz.it/

Seems to be a Debian 12 derivative, so adding it could be quite easy.

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)[W]Onboarding (salt minion from UI, salt minion from bootstrap script, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator) --> Working for all 3 options (salt minion UI, salt minion bootstrap script and salt-ssh minion from the UI).[W]Package management (install, remove, update...) --> Installing a new package works, needs to test the rest.[I]Patching (if patch information is available, could require writing some code to parse it, but IIRC we have support for Ubuntu already). No patches detected. Do we support patches for Debian at all?[W]Applying any basic salt state (including a formula)[W]Salt remote commands[ ]Bonus point: Java part for product identification, and monitoring enablement[ ]Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)[ ]Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)[ ]Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

PR: https://github.com/uyuni-project/uyuni/pull/9502

It's basically a Debian 12. I don't even know whether it makes sense to separate it.

root@fuss-12:/tmp# cat /etc/os-release

PRETTY_NAME="Debian GNU/Linux 12 (bookworm)"

NAME="Debian GNU/Linux"

VERSION_ID="12"

VERSION="12 (bookworm)"

VERSION_CODENAME=bookworm

ID=debian

HOME_URL="https://www.debian.org/"

SUPPORT_URL="https://www.debian.org/support"

BUG_REPORT_URL="https://bugs.debian.org/"

root@fuss-12:/tmp# venv-salt-call grains.items| grep -v osvw|grep -A 1 " os"

os:

Debian

os_family:

Debian

osarch:

amd64

oscodename:

bookworm

osfinger:

Debian-12

osfullname:

Debian GNU/Linux

osmajorrelease:

12

osrelease:

12

osrelease_info:

- 12

openSUSE MicroOS

PRs: https://github.com/uyuni-project/uyuni/pull/6550 and https://github.com/uyuni-project/uyuni/pull/7858 Doc for the PoC: https://github.com/uyuni-project/uyuni/wiki/openSUSE-Tumbleweed-and-openSUSE-MicroOS-for-Uyuni

Check the doc to see what works, and what does not

NOTE: Broken for now because of SELinux, see the link above about the PoC.

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[P]Package management (install, remove, update...) See the doc above[ ]Patching No tests available for testing[P]Applying any basic salt state (including a formula) See the doc above[W]Salt remote commands[ ]Bonus point: Java part for product identification, and monitoring enablement[ ]Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)[ ]Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)[ ]Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

A transactional OS, similar to SLE Micro but based on openSUSE Tumbleweed. Supporting it could be problematic, because of the bundle extra work, but we can at least give it a try.

Astra Linux

PR: https://github.com/uyuni-project/uyuni/pull/1915

Originally it was a GNU/Linux developed for the Russian army and intelligence agencies, but it now offers a free (as in free beer) version for general usage. It is based on Debian GNU/Linux, so maybe getting some basic support will not be that hard.

Our team in Russia told me about it, so I joined their Telegram support channel some months ago.

Right now there are more than 1600 users at their Telegram channel (linked to Matrix.org with a bridge, which I suspect is what most of the users use) with a lot of traffic each day talking not only about support, but also about news regarding the distribution.

The distribution is now Linux Foundation Corporate Member (Silver).

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)- After adding the repositories to

spacewalk-common-channelsworks fine.

- After adding the repositories to

[W]Onboarding (both salt and salt-ssh)- WebUI works (salt and salt-ssh), with some caveats: https://github.com/uyuni-project/uyuni/pull/1915

- I can bootstrap using a script.

[W]Package management- Works!

[ ]Patching- Can't test yet, no patches available.

[W]Applying any basic salt state- Works!

[W]Salt remote commands- Works!

Already implemented in past Hack Weeks

- Amazon Linux 2023

- Raspbian/Raspberry Pi OS 12

- openEuler (22.03)

- openSUSE Leap Micro

- openSUSE Leap 15.5

- SUSE Linux Enterprise 15 SP5

- Ubuntu 22.04

- AlmaLinux9

- Oracle Linux 9

- AlmaLinux8

- Alibaba Cloud Linux 2

- Oracle Linux 6/7/8

- Debian 10/9

Others

Interested on testing other distributions? Ping me and let's try.

[W] = works

[F] = Fails

[P] = in Progress

[I] = Ignored (state the reason) text text text text text text text

Looking for hackers with the skills:

uyuni susemanager distribution linux management testing java bash python terraform cucumber obs documentation salt

This project is part of:

Hack Week 19 Hack Week 20 Hack Week 21 Hack Week 22 Hack Week 23 Hack Week 24 Hack Week 25

Activity

Comments

-

almost 6 years ago by nicoladm | Reply

Hi, nice project. I was trying to help with the debian 9 onboarding testing https://github.com/uyuni-project/uyuni/issues/1356 since the process is still fairly manual. Looks like mgr-create-bootstrap-repo needs tweaking https://github.com/uyuni-project/uyuni/issues/1495 i am not a developer but i can probably tweak scripts and help with some guidance

-

almost 6 years ago by juliogonzalezgil | Reply

Hi @nicoladm

If that's the only think that's failing, it's not so hard to fix.

The packages to be added to a bootstrap repository by

mgr-create-bootstra-repoare at https://github.com/uyuni-project/uyuni/blob/master/susemanager/src/mgrbootstrapdata.pyYou just need to add a new variable

PKGLISTDEBIAN9with the list of packages required to bootstrap with salt (salt itself and all dependencies). Most probably the list will be similar to Ubuntu18.04.Then at

DATAyou need a new entrydebian8-amd64-uyuni(similar to ubuntu-18.04-amd64-uyuni) using the basechanneldebian-9-pool-amd64and adapting the rest.And finally, you maybe you will need to adjust https://github.com/uyuni-project/uyuni/tree/master/susemanager-utils/susemanager-sls/salt/bootstrap (specifically

init.sls) if the bootstrap procedure itself fails to find the repository.It would be good if you can add both Debian9 and Debian10 :-)

-

almost 6 years ago by nicoladm | Reply

systems used: KVM VM openSUSE Leap 15.1 with Uyuni 2020.01 KVM VM debian 9.9 Salt minion version: salt-minion2019.2.0+ds-1all.deb

NOTE: The following repo as mentioned by mateiw on github should contain the patch for salt-minion deb package that suppose to fix the problems related with removing/disabling Debian repos during the bootstrap hence i am using this version salt patch (https://build.opensuse.org/package/show/systemsmanagement:saltstack:products:testing:debian/salt): https://download.opensuse.org/repositories/systemsmanagement:/saltstack:/products:/testing:/debian/Debian_10/

Debian 9 repos synced successfully, created a test/qa channel using the Content lifecycle section and created an activation key (1-qa-debian9-test) with the qa channels added to it.

spacecmd softwarechannel_listchildchannels debian-9-amd64-main-security debian-9-amd64-main-updates debian9-opensuse-salt debian9-servers-qa-debian9-debian-9-amd64-main-security debian9-servers-qa-debian9-debian-9-amd64-main-updates debian9-servers-qa-debian9-debian9-opensuse-salt spacecmd activationkey_listchildchannels 1-qa-debian9-test debian9-servers-qa-debian9-debian-9-amd64-main-security debian9-servers-qa-debian9-debian-9-amd64-main-updates debian9-servers-qa-debian9-debian9-opensuse-saltAfter the bootstrap the file pushed by salt is empty.

/etc/apt/sources.list.d/susemanager\:channels.listFirst question that is puzzling me: Is it normal that the channels are not subscribed automatically even if the activation key has the debian channells assigned correctly? Has this something to do with the susemanager-sls state you have mentioned right?

I will have a closer look to the below files on the uyuni server tomorrow and start play with them

/usr/share/susemanager/mgr_bootstrap_data.py /usr/sbin/mgr-create-bootstrap-repo-

almost 6 years ago by juliogonzalezgil | Reply

What's the content of

/etc/apt/sources.list.d/susemanager\:channels.list> Is it normal that the channels are not subscribed automatically even if the activation key has the debian channells assigned correctly?

Well, if the activation key had the channels assigned BEFORE the onboarding, then that's a bug.

If you add channels to an activation key AFTER the onboarding, then already onboarded clients will not get the channels.

-

almost 6 years ago by nicoladm | Reply

>What's the content of /etc/apt/sources.list.d/susemanager:channels.list

root@debian9-uyuni:~# cat /etc/apt/sources.list/susemanager\:channels.list # Channels managed by SUSE Manager # Do not edit this file, changes will be overwrittenTo double check I have tried to deselect and reselect the debian channels from the activation key and bootstrapped again and i can confirm I had the same behaviour - i needed to manually subscribe the channels from Systems --> Debian host --> Software --> Software channels because shown as none, disable service.

Where is the place to raise this bug?

-

almost 6 years ago by juliogonzalezgil | Reply

Did the onboarding complete without issues?

-

almost 6 years ago by nicoladm | Reply

Not yet, at least not automatically.

I am facing like a chicken and the egg situation where i need salt-minion-2019.2.0+ds-1.all-deb to be installed in order for the bootstrap to work properly (disable default debian channels and assign the susemanager channels and so on).

I am looking at the bootstrap script there might be something we need to tweak there as well which is failing with:

pkg_|-salt-minion-package_|-salt-minion_|-latest(retcode=2): No information found for 'salt-minion'. file_|-/etc/salt/minion.d/susemanager.conf_|-/etc/salt/minion.d/susemanager.conf_|-managed(retcode=2): One or more requisite failed: bootstrap.salt-minion-package file_|-/etc/salt/pki/minion/minion.pub_|-/etc/salt/pki/minion/minion.pub_|-managed(retcode=2): One or more requisite failed: bootstrap.salt-minion-package service_|-salt-minion_|-salt-minion_|-running(retcode=2): One or more requisite failed: bootstrap.salt-minion-package, bootstrap./etc/salt/pki/minion/minion.pem, bootstrap./etc/salt/minion.d/susemanager.conf, bootstrap./etc/salt/pki/minion/minion.pub, bootstrap./etc/salt/minion_id file_|-/etc/salt/minion_id_|-/etc/salt/minion_id_|-managed(retcode=2): One or more requisite failed: bootstrap.salt-minion-package file_|-/etc/salt/pki/minion/minion.pem_|-/etc/salt/pki/minion/minion.pem_|-managed(retcode=2): One or more requisite failed: bootstrap.salt-minion-package<\code>Regarding the mgr-bootstrap i made the changes you suggested to /usr/share/susemanager/mgrbootstrapdata.py and it seems to be working fine:

mgr-create-bootstrap-repo --with-custom-channels 1. SLE-12-SP4-x86_64 2. debian9-amd64-uyuni Enter a number of a product label: 2 Creating bootstrap repo for debian9-amd64-uyuni copy 'libsodium18-1.0.11-2.amd64-deb' copy 'dctrl-tools-2.24-2+b1.amd64-deb' copy 'libzmq5-4.2.1-4+deb9u2.amd64-deb' copy 'python-chardet-2.3.0-2.all-deb' copy 'python-croniter-0.3.12-2.all-deb' copy 'python-crypto-2.6.1-7.amd64-deb' copy 'python-dateutil-2.5.3-2.all-deb' copy 'python-enum34-1.1.6-1.all-deb' copy 'python-ipaddress-1.0.17-1.all-deb' copy 'python-jinja2-2.8-1.all-deb' copy 'python-markupsafe-0.23-3.amd64-deb' copy 'python-minimal-2.7.13-2.amd64-deb' copy 'python-msgpack-0.4.8-1.amd64-deb' copy 'python-openssl-16.2.0-1.all-deb' copy 'python-pkg-resources-33.1.1-1.all-deb' copy 'python-psutil-5.0.1-1.amd64-deb' copy 'python-requests-2.12.4-1.all-deb' copy 'python-six-1.10.0-3.all-deb' copy 'python-systemd-233-1.amd64-deb' copy 'python-tornado-4.4.3-1.amd64-deb' copy 'python-tz-2016.7-0.3.all-deb' copy 'python-urllib3-1.19.1-1.all-deb' copy 'python-yaml-3.12-1.amd64-deb' copy 'python-zmq-16.0.2-2.amd64-deb' copy 'python-pycurl-7.43.0-2.amd64-deb' copy 'salt-common-2019.2.0+ds-1.all-deb' copy 'salt-minion-2019.2.0+ds-1.all-deb' copy 'dmidecode-3.0-4.amd64-deb' Exporting indices... ll /srv/www/htdocs/pub/repositories/debian/9/bootstrap/ total 0 drwxr-xr-x 1 root root 26 Feb 11 23:21 conf drwxr-xr-x 1 root root 154 Feb 11 23:21 db drwxr-xr-x 1 root root 18 Feb 11 23:21 dists drwxr-xr-x 1 root root 8 Feb 11 23:21 pool-

almost 6 years ago by juliogonzalezgil | Reply

> Is it normal that the channels are not subscribed automatically even if the activation key has the debian channells assigned correctly?

Then in this case, I think it normal. The first thing the bootstraping does is disabling the repositories, and then add the bootstrap repository.

no information found for 'salt-minion'seems to show that the package was not found, despite I can at the log you offer.First, check that you can find the package at

/srv/www/htdocs/pub/repositories/debian/9/bootstrap/(most probably you will).If that's the case, then it's time to check the

susemanage-slspackage, as there is where the association between an OS and the bootstrap repository happens, according to the salt grains available during bootstrap (checksusemanager-utils/susemanager-sls/salt/bootstrap/).Maybe a patch is needed there, most probably at the

init.slsfile.-

almost 6 years ago by juliogonzalezgil | Reply

BTW, if you can join Rocket.chat maybe we'll be able to collaborate faster than only using the website :-)

-

-

-

-

-

-

-

-

almost 6 years ago by juliogonzalezgil | Reply

Having a look at Amazon Linux 2 already :-)

-

almost 5 years ago by juliogonzalezgil | Reply

Just discovered that Amazon Linux 2 is now publishing XML information, and not just sqlite:

http://amazonlinux.default.amazonaws.com/2/core/2.0/x8664/34112b4f91c3e1ecf2b2e90cfd565b12690fa3c6a3e71a5ac19029d2a9bd3869/repodata/repomd.xml http://amazonlinux.default.amazonaws.com/2/core/2.0/x8664/34112b4f91c3e1ecf2b2e90cfd565b12690fa3c6a3e71a5ac19029d2a9bd3869/repodata/primary.xml.gz

So it seems we I will be able to bring this back to life without maybe any changes to reposync.

Plan is reopen my PR, test again, and if it works see if I can fix the product detection (no promisies) and some more stuff.

As for Astra Linux, let's see if I can convince OBS guys to fix the repos they added, so I can enable for Uyuni.

-

-

almost 6 years ago by nicoladm | Reply

@juliogonzalezgil thanks Julio for the suggestions!! will start to do some testing hopefully this afternoon/evening

-

almost 6 years ago by Pharaoh_Atem | Reply

@juliogonzalezgil What about OpenMandriva? They seem interesting...

-

almost 6 years ago by juliogonzalezgil | Reply

@Pharaoh_Atem join and try :-D

So far I will be happy if can complete Amazon Linux, Astra Linux and (maybe) Oracle Linux. No more time during this hackweek :-\

So either for next hackweek, or you (or someone else) can have a look :-)

-

almost 6 years ago by juliogonzalezgil | Reply

For reference, we are using a rocket.chat channel as so far only Nicola and I working on this.

If someone from the community wants to join during this hackweek, we can move to Freenode or Gitter.

-

-

almost 6 years ago by truquaeb | Reply

I'm trying to move away from Spacewalk, but getting stuck with my Fedora clients. It seems there aren't Uyuni client tools built for Fedora, I've got the repo's syncing and salt seems to work, machines are registered. Can't access the susemanager repos. I assume client tools need to be built, but where can I start?

-

almost 6 years ago by juliogonzalezgil | Reply

Well, that depends. New distributions will only support salt (unless community actually takes care of maintaining traditional for them)

You could start by creating an OBS repository based on Fedora and try to build salt there. You can use https://build.opensuse.org/project/show/systemsmanagement:Uyuni:Master:CentOS8-Uyuni-Client-Tools as inspiration.

But I guess we'd also need changes at other packages, so Uyuni is able to recognize this new distribution. My PR to Add Astra Linux (https://github.com/uyuni-project/uyuni/pull/1915) can also be used as insperation (Astra Linux is Debian Based, but even with this in mind, it can be of help).

-

-

almost 5 years ago by pagarcia | Reply

More to add: Alibaba Cloud Linux 2 (WIP here: https://github.com/paususe/uyuni/commits/paususe-aliyun), Alma Linux and Rocky Linux.

-

almost 5 years ago by juliogonzalezgil | Reply

As soon as we have someone to take care of them, I will add them :-)

I understand you want to take care of Alibaba, right @pagarcia?

-

almost 5 years ago by pagarcia | Reply

Yes, I will try to have Alibaba Cloud Linux 2 done before Hackweek even.

-

almost 5 years ago by juliogonzalezgil | Reply

Alibaba Clolud Linux 2 added, with all checkboxes. Please add join the project, using the button above.

If you will also handle Alma, let me know and I'll add it.

-

-

-

-

over 3 years ago by juliogonzalezgil | Reply

Project is updated, in preparation for Hackweek 21.

As always, we can add more distributions if someone wants to work on them. I plan to focus on finishing Ubuntu 22.04, and testing AlmaLinux9 :-)

-

almost 3 years ago by juliogonzalezgil | Reply

For reference, project is now updated with the plans for Hackweek 22.

Same as always, we can add more distributions if someone wants to work on them.

For now the plans are: - OpenEuler - openSUSE Leap Micro and openSUSE MicroOS - openSUSE Leap 15.5 and SLES 15 SP5

-

almost 3 years ago by smflood | Reply

Please can someone correct the SUSE spacewalk GitHub links for openSUSE Leap Micro ( https://github.com/SUSE/spacewalk/issues/20349 and https://github.com/SUSE/spacewalk/issues/20306 ) as they both give "Page not found"

-

almost 3 years ago by juliogonzalezgil | Reply

Yes, I reused things reported for SUSE Manager, and those are private. But they are described at https://github.com/uyuni-project/uyuni/pull/6550

-

-

almost 3 years ago by juliogonzalezgil | Reply

Closing for now, and reopening for next hackweek.

For this hackweek: - Support for SLE1SP5 and openSUSE Leap 15.5 is added (pending merging) - Support for openSUSE Leap Micro 5.3 is almost there, we just need a fix for the bootstrap problem to have the basic stuff (under research), and then the other fixes that are common for SLE Micro as well. - Support for openSUSE MicroOS is not there, but we'll see if we can get the bundle built in the next few months, as PoC.

-

-

over 2 years ago by deneb_alpha | Reply

hello @juliogonzalezgil, for this hackweek I would like to work on adding Zorin OS. In particular I would like to enable the Education version.

Some details:

- https://help.zorin.com/docs/getting-started/system-requirements/

- https://zorin.com/os/education/

It's a distro based on Ubuntu. This is the /etc/os-release:

- NAME="Zorin OS"

- VERSION="16.3"

- ID=zorin

- ID_LIKE=ubuntu

- PRETTY_NAME="Zorin OS 16.3"

- VERSION_ID="16"

- HOME_URL="https://zorin.com/os/"

- SUPPORT_URL="https://help.zorin.com/"

- BUGREPORTURL="https://zorin.com/os/feedback/"

- PRIVACYPOLICYURL="https://zorin.com/legal/privacy/"

- VERSION_CODENAME=focal

- UBUNTU_CODENAME=focal

-

about 2 years ago by juliogonzalezgil | Reply

Just for reference, the project is updated and ready for Hackweek 23. But if anyone wants to explore more OS, please add comments, as @deneb_alpha did :-)

-

about 2 years ago by juliogonzalezgil | Reply

Completed this hack week: - Amazon Linux 2023 - Raspberry Pi OS 12

Progress on: - openEuler - openSUSE Tumbleweed and openSUSE MicroOS (PoC)

-

over 1 year ago by juliogonzalezgil | Reply

For awareness: I updated the project for Hack Week 24.

As usual: if anyone wants to explore more OS, rather than those suggested, just comment here :-)

-

about 1 year ago by juliogonzalezgil | Reply

Progress for me:

- Code and doc for openSUSE Leap 16.0, done (links at the desc above). With that, a bug report to agama, one fix for the uyuni site, one for the doc, one problem reported to the openSUSE Leap releng about

/etc/os-releasefor Leap. - openSUSE Tumbleweed and MicroOS, did some extra testing, but could not complete what was done last year. SELinux breaks the bundle at MicrOOS, and probably at Tumbleweed (Tumbleweed does not enable it by default)

- Helped a bit Raul with FUSS, and a bit Marina with Zorin OS.

- Code and doc for openSUSE Leap 16.0, done (links at the desc above). With that, a bug report to agama, one fix for the uyuni site, one for the doc, one problem reported to the openSUSE Leap releng about

-

-

about 1 year ago by raulosuna | Reply

Work during the hackweek regarding FUSS:

- Reposync working

- Onboarding works from the UI. Need to test bootstrap script and salt-ssh minion

- Installing a new package works. Need to test updates and removals.

- Everything else needs to be tested, but being a 1:1 clone of Debian 12 I don't expect any issues.

Hope to be able to continue work during next Learning Tuesday (Dec. 3rd). PR (draft): https://github.com/uyuni-project/uyuni/pull/9502

-

about 1 year ago by deneb_alpha | Reply

I didn't have a complete week to focus on hackweek due to some releases to be done at the beginning of the week.

For the Zorin OS 17.2 support, this is where we are now:

- research how the OS is designed (3 "flavors" with different set of packages) The "base OS" is Ubuntu 22.04 but they enhance it with additional repos and more updated packages.

- The salt bundle used for Ubuntu 22.04 works for installing/removing/searching packages, but salt needs a patch.

- I researched how to contribute to salt and what to change in the code for supporting Zorin OS. The patch is ready and the code works locally. the patch needs to be finalized with tests and submitted to upstream salt. The channels changes are ready, there are several additional repos to be added and a few more additional GPG keys too.

Initial draft PR: https://github.com/uyuni-project/uyuni/pull/9510

I'll continue to work on it during the next Learning Tuesday (Dec 3rd) The initial work will be presented at the next Uyuni Community Hours on Nov 28th.

-

11 months ago by Jasbon | Reply

This looks like a solid and well-organized initiative for expanding Uyuni's support for additional GNU/Linux distributions! Are you looking to contribute to this project, need help understanding a specific part, or want assistance testing one of these distributions?

-

9 months ago by juliogonzalezgil | Reply

Hello! We are usually looking for contributions and tests. Sometimes adding support is trivial if you see some PRs, so the test can even be a contributor as well :-)

-

-

Similar Projects

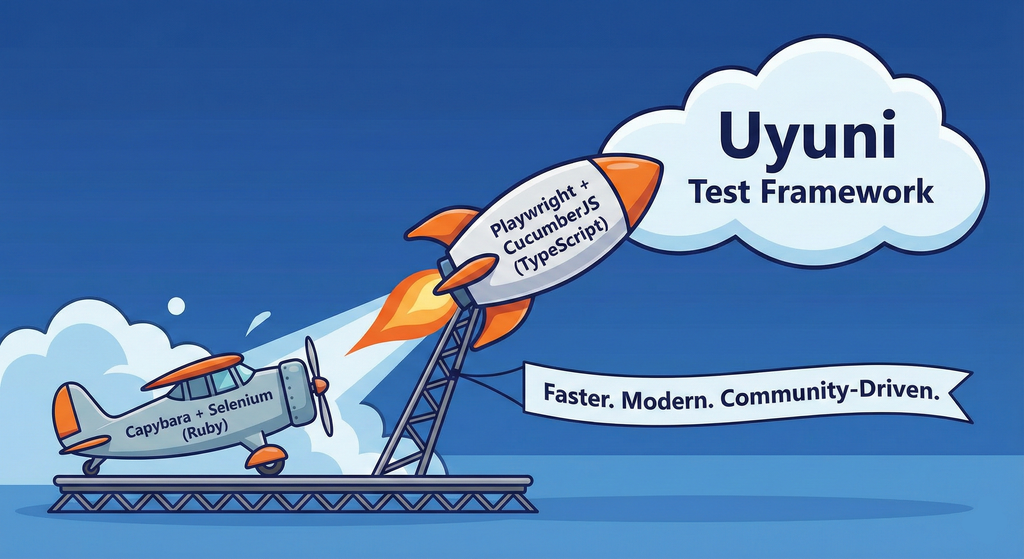

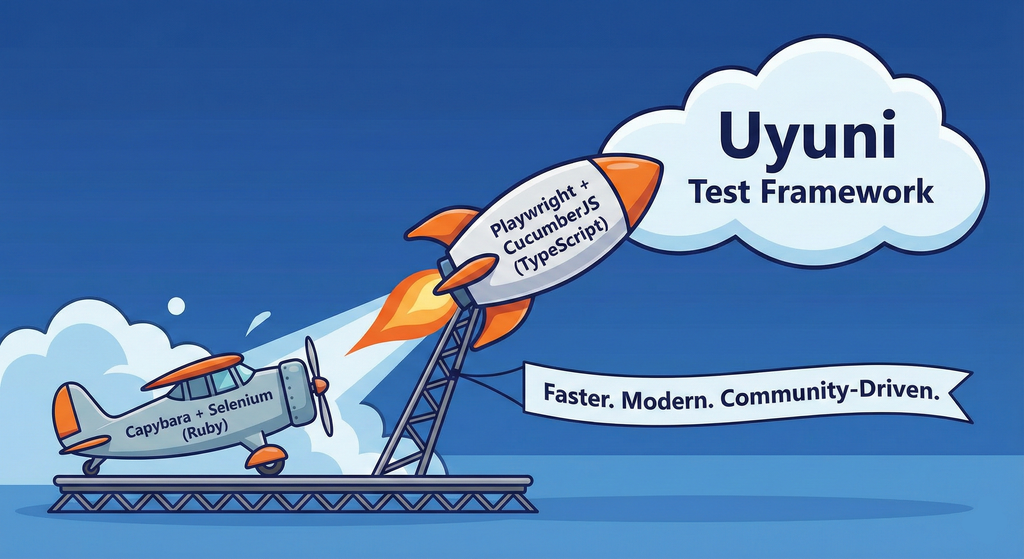

Move Uyuni Test Framework from Selenium to Playwright + AI by oscar-barrios

Description

This project aims to migrate the existing Uyuni Test Framework from Selenium to Playwright. The move will improve the stability, speed, and maintainability of our end-to-end tests by leveraging Playwright's modern features. We'll be rewriting the current Selenium code in Ruby to Playwright code in TypeScript, which includes updating the test framework runner, step definitions, and configurations. This is also necessary because we're moving from Cucumber Ruby to CucumberJS.

If you're still curious about the AI in the title, it was just a way to grab your attention. Thanks for your understanding.

Nah, let's be honest ![]() AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

Goals

- Migrate Core tests including Onboarding of clients

- Improve test reliabillity: Measure and confirm a significant reduction of flakiness.

- Implement a robust framework: Establish a well-structured and reusable Playwright test framework using the CucumberJS

Resources

- Existing Uyuni Test Framework (Cucumber Ruby + Capybara + Selenium)

- My Template for CucumberJS + Playwright in TypeScript

- Started Hackweek Project

Uyuni Saltboot rework by oholecek

Description

When Uyuni switched over to the containerized proxies we had to abandon salt based saltboot infrastructure we had before. Uyuni already had integration with a Cobbler provisioning server and saltboot infra was re-implemented on top of this Cobbler integration.

What was not obvious from the start was that Cobbler, having all it's features, woefully slow when dealing with saltboot size environments. We did some improvements in performance, introduced transactions, and generally tried to make this setup usable. However the underlying slowness remained.

Goals

This project is not something trying to invent new things, it is just finally implementing saltboot infrastructure directly with the Uyuni server core.

Instead of generating grub and pxelinux configurations by Cobbler for all thousands of systems and branches, we will provide a GET access point to retrieve grub or pxelinux file during the boot:

/saltboot/group/grub/$fqdn and similar for systems /saltboot/system/grub/$mac

Next we adapt our tftpd translator to query these points when asked for default or mac based config.

Lastly similar thing needs to be done on our apache server when HTTP UEFI boot is used.

Resources

Set Up an Ephemeral Uyuni Instance by mbussolotto

Description

To test, check, and verify the latest changes in the master branch, we want to easily set up an ephemeral environment.

Goals

- Create an ephemeral environment manually

Create an ephemeral environment automatically

Resources

https://github.com/uyuni-project/uyuni

https://www.uyuni-project.org/uyuni-docs/en/uyuni/index.html

Uyuni Health-check Grafana AI Troubleshooter by ygutierrez

Description

This project explores the feasibility of using the open-source Grafana LLM plugin to enhance the Uyuni Health-check tool with LLM capabilities. The idea is to integrate a chat-based "AI Troubleshooter" directly into existing dashboards, allowing users to ask natural-language questions about errors, anomalies, or performance issues.

Goals

- Investigate if and how the

grafana-llm-appplug-in can be used within the Uyuni Health-check tool. - Investigate if this plug-in can be used to query LLMs for troubleshooting scenarios.

- Evaluate support for local LLMs and external APIs through the plugin.

- Evaluate if and how the Uyuni MCP server could be integrated as another source of information.

Resources

mgr-ansible-ssh - Intelligent, Lightweight CLI for Distributed Remote Execution by deve5h

Description

By the end of Hack Week, the target will be to deliver a minimal functional version 1 (MVP) of a custom command-line tool named mgr-ansible-ssh (a unified wrapper for BOTH ad-hoc shell & playbooks) that allows operators to:

- Execute arbitrary shell commands on thousand of remote machines simultaneously using Ansible Runner with artifacts saved locally.

- Pass runtime options such as inventory file, remote command string/ playbook execution, parallel forks, limits, dry-run mode, or no-std-ansible-output.

- Leverage existing SSH trust relationships without additional setup.

- Provide a clean, intuitive CLI interface with --help for ease of use. It should provide consistent UX & CI-friendly interface.

- Establish a foundation that can later be extended with advanced features such as logging, grouping, interactive shell mode, safe-command checks, and parallel execution tuning.

The MVP should enable day-to-day operations to efficiently target thousands of machines with a single, consistent interface.

Goals

Primary Goals (MVP):

Build a functional CLI tool (mgr-ansible-ssh) capable of executing shell commands on multiple remote hosts using Ansible Runner. Test the tool across a large distributed environment (1000+ machines) to validate its performance and reliability.

Looking forward to significantly reducing the zypper deployment time across all 351 RMT VM servers in our MLM cluster by eliminating the dependency on the taskomatic service, bringing execution down to a fraction of the current duration. The tool should also support multiple runtime flags, such as:

mgr-ansible-ssh: Remote command execution wrapper using Ansible Runner

Usage: mgr-ansible-ssh [--help] [--version] [--inventory INVENTORY]

[--run RUN] [--playbook PLAYBOOK] [--limit LIMIT]

[--forks FORKS] [--dry-run] [--no-ansible-output]

Required Arguments

--inventory, -i Path to Ansible inventory file to use

Any One of the Arguments Is Required

--run, -r Execute the specified shell command on target hosts

--playbook, -p Execute the specified Ansible playbook on target hosts

Optional Arguments

--help, -h Show the help message and exit

--version, -v Show the version and exit

--limit, -l Limit execution to specific hosts or groups

--forks, -f Number of parallel Ansible forks

--dry-run Run in Ansible check mode (requires -p or --playbook)

--no-ansible-output Suppress Ansible stdout output

Secondary/Stretched Goals (if time permits):

- Add pretty output formatting (success/failure summary per host).

- Implement basic logging of executed commands and results.

- Introduce safety checks for risky commands (shutdown, rm -rf, etc.).

- Package the tool so it can be installed with pip or stored internally.

Resources

Collaboration is welcome from anyone interested in CLI tooling, automation, or distributed systems. Skills that would be particularly valuable include:

- Python especially around CLI dev (argparse, click, rich)

Set Uyuni to manage edge clusters at scale by RDiasMateus

Description

Prepare a Poc on how to use MLM to manage edge clusters. Those cluster are normally equal across each location, and we have a large number of them.

The goal is to produce a set of sets/best practices/scripts to help users manage this kind of setup.

Goals

step 1: Manual set-up

Goal: Have a running application in k3s and be able to update it using System Update Controler (SUC)

- Deploy Micro 6.2 machine

Deploy k3s - single node

- https://docs.k3s.io/quick-start

Build/find a simple web application (static page)

- Build/find a helmchart to deploy the application

Deploy the application on the k3s cluster

Install App updates through helm update

Install OS updates using MLM

step 2: Automate day 1

Goal: Trigger the application deployment and update from MLM

- Salt states For application (with static data)

- Deploy the application helmchart, if not present

- install app updates through helmchart parameters

- Link it to GIT

- Define how to link the state to the machines (based in some pillar data? Using configuration channels by importing the state? Naming convention?)

- Use git update to trigger helmchart app update

- Recurrent state applying configuration channel?

step 3: Multi-node cluster

Goal: Use SUC to update a multi-node cluster.

- Create a multi-node cluster

- Deploy application

- call the helm update/install only on control plane?

- Install App updates through helm update

- Prepare a SUC for OS update (k3s also? How?)

- https://github.com/rancher/system-upgrade-controller

- https://documentation.suse.com/cloudnative/k3s/latest/en/upgrades/automated.html

- Update/deploy the SUC?

- Update/deploy the SUC CRD with the update procedure

Enhance setup wizard for Uyuni by PSuarezHernandez

Description

This project wants to enhance the intial setup on Uyuni after its installation, so it's easier for a user to start using with it.

Uyuni currently uses "uyuni-tools" (mgradm) as the installation entrypoint, to trigger the installation of Uyuni in the given host, but does not really perform an initial setup, for instance:

- user creation

- adding products / channels

- generating bootstrap repos

- create activation keys

- ...

Goals

- Provide initial setup wizard as part of mgradm uyuni installation

Resources

Switch software-o-o to store repomd in a database by hennevogel

Description

The openSUSE Software portal is a web app to explore binary packages of openSUSE distributions. Kind of like an package manager / app store.

https://software.opensuse.org/

This app has been around forever (August 2007) and it's architecture is a bit brittle. It acts as a frontend to the OBS distributions and published binary search APIs, calculates and caches a lot of stuff in memory and needs code changes nearly every openSUSE release to keep up.

As you can imagine, it's a heavy user of the OBS API, especially when caches are cold.

Goals

I want to change the app to cache repomod data in a (postgres) database structure

- Distributions have many Repositories

- Repositories have many Packages

- Packages have many Patches

The UI workflows will be as following

- As an admin I setup Distribution and it's repositories

- As an admin I sync all repositories repomd files into to the database

- As a user I browse a Distribution by category

- As a user I search for Package of a Distribution in it's Repositories

- As a user I extend the search to Package build on OBS for this Distribution

This has a couple of pro's:

- Less traffic on the OBS API as the usual Packages are inside the database

- Easier base to add features to this page. Like comments, ratings, openSUSE specific screenshots etc.

- Separating the Distribution package search from searching through OBS will hopefully make more clear for newbies that enabling extra repositories is kind of dangerous.

And one con:

- You can't search for packages build for foreign distributions with this app anymore (although we could consume their repomd etc. but I doubt we have the audience on an opensuse.org domain...)

TODO

Introduce a PG database

Introduce a PG database Add clockworkd as scheduler and delayed_job as ActiveJob backend

Add clockworkd as scheduler and delayed_job as ActiveJob backend Introduce ActiveStorage

Introduce ActiveStorage Build initial data model

Build initial data model Introduce repomd to database sync

Introduce repomd to database sync

Adapt repomd sync to Leap 16.0 repomod layout changes (single arch, no update repo)

Adapt repomd sync to Leap 16.0 repomod layout changes (single arch, no update repo) Make repomd sync idempotent

Make repomd sync idempotent

Introduce database search

Introduce database search Setup foreman to run

Setup foreman to run rails sandrake jobs:workoff- Adapt UI

Build Category Browsing

Build Category Browsing Build Admin Distribution CRUD interface

Build Admin Distribution CRUD interface

git-fs: file system representation of a git repository by fgonzalez

Description

This project aims to create a Linux equivalent to the git/fs concept from git9. Now, I'm aware that git provides worktrees, but they are not enough for many use cases. Having a read-only representation of the whole repository simplifies scripting by quite a bit and, most importantly, reduces disk space usage. For instance, during kernel livepatching development, we need to process and analyze the source code of hundreds of kernel versions simultaneously.This is rather painful with git-worktrees, as each kernel branch requires no less than 1G of disk space.

As for the technical details, I'll implement the file system using FUSE. The project itself should not take much time to complete, but let's see where it takes me.

I'll try to keep the same design as git9, so the file system will look something like:

/mnt/git

+-- ctl

+-- HEAD

| +-- tree

| | +--files

| | +--in

| | +--head

| |

| +-- hash

| +-- msg

| +-- parent

|

+-- branch

| |

| +-- heads

| | +-- master

| | +-- [commit files, see HEAD]

| +-- remotes

| +-- origin

| +-- master

| +-- [commit files, see HEAD]

+-- object

+-- 00051fd3f066e8c05ae7d3cf61ee363073b9535f # blob contents

+-- 00051fd3f066e8c05ae7d3cf61ee363073b9535c

+-- [tree contents, see HEAD/tree]

+-- 3f5dbc97ae6caba9928843ec65fb3089b96c9283

+-- [commit files, see HEAD]

So, if you wanted to look at the commit message of the current branch, you could simply do:

cat /mnt/git/HEAD/msg No collaboration needed. This is a solo project.

Goals

Implement a working prototype.

Measure and improve the performance if possible. This step will be the most crucial one. User space filesystems are slower by nature.

Resources

https://docs.kernel.org/filesystems/fuse/fuse.html

pudc - A PID 1 process that barks to the internet by mssola

Description

As a fun exercise in order to dig deeper into the Linux kernel, its interfaces, the RISC-V architecture, and all the dragons in between; I'm building a blog site cooked like this:

- The backend is written in a mixture of C and RISC-V assembly.

- The backend is actually PID1 (for real, not within a container).

- We poll and parse incoming HTTP requests ourselves.

- The frontend is a mere HTML page with htmx.

The project is meant to be Linux-specific, so I'm going to use io_uring, pidfs, namespaces, and Linux-specific features in order to drive all of this.

I'm open for suggestions and so on, but this is meant to be a solo project, as this is more of a learning exercise for me than anything else.

Goals

- Have a better understanding of different Linux features from user space down to the kernel internals.

- Most importantly: have fun.

Resources

Enhance setup wizard for Uyuni by PSuarezHernandez

Description

This project wants to enhance the intial setup on Uyuni after its installation, so it's easier for a user to start using with it.

Uyuni currently uses "uyuni-tools" (mgradm) as the installation entrypoint, to trigger the installation of Uyuni in the given host, but does not really perform an initial setup, for instance:

- user creation

- adding products / channels

- generating bootstrap repos

- create activation keys

- ...

Goals

- Provide initial setup wizard as part of mgradm uyuni installation

Resources

Enhance setup wizard for Uyuni by PSuarezHernandez

Description

This project wants to enhance the intial setup on Uyuni after its installation, so it's easier for a user to start using with it.

Uyuni currently uses "uyuni-tools" (mgradm) as the installation entrypoint, to trigger the installation of Uyuni in the given host, but does not really perform an initial setup, for instance:

- user creation

- adding products / channels

- generating bootstrap repos

- create activation keys

- ...

Goals

- Provide initial setup wizard as part of mgradm uyuni installation

Resources

openQA tests needles elaboration using AI image recognition by mdati

Description

In the openQA test framework, to identify the status of a target SUT image, a screenshots of GUI or CLI-terminal images,

the needles framework scans the many pictures in its repository, having associated a given set of tags (strings), selecting specific smaller parts of each available image. For the needles management actually we need to keep stored many screenshots, variants of GUI and CLI-terminal images, eachone accompanied by a dedicated set of data references (json).

A smarter framework, using image recognition based on AI or other image elaborations tools, nowadays widely available, could improve the matching process and hopefully reduce time and errors, during the images verification and detection process.

Goals

Main scope of this idea is to match a "graphical" image of the console or GUI status of a running openQA test, an image of a shell console or application-GUI screenshot, using less time and resources and with less errors in data preparation and use, than the actual openQA needles framework; that is:

- having a given SUT (system under test) GUI or CLI-terminal screenshot, with a local distribution of pixels or text commands related to a running test status,

- we want to identify a desired target, e.g. a screen image status or data/commands context,

- based on AI/ML-pretrained archives containing object or other proper elaboration tools,

- possibly able to identify also object not present in the archive, i.e. by means of AI/ML mechanisms.

- the matching result should be then adapted to continue working in the openQA test, likewise and in place of the same result that would have been produced by the original openQA needles framework.

- We expect an improvement of the matching-time(less time), reliability of the expected result(less error) and simplification of archive maintenance in adding/removing objects(smaller DB and less actions).

Hackweek POC:

Main steps

- Phase 1 - Plan

- study the available tools

- prepare a plan for the process to build

- Phase 2 - Implement

- write and build a draft application

- Phase 3 - Data

- prepare the data archive from a subset of needles

- initialize/pre-train the base archive

- select a screenshot from the subset, removing/changing some part

- Phase 4 - Test

- run the POC application

- expect the image type is identified in a good %.

Resources

First step of this project is quite identification of useful resources for the scope; some possibilities are:

- SUSE AI and other ML tools (i.e. Tensorflow)

- Tools able to manage images

- RPA test tools (like i.e. Robot framework)

- other.

Project references

- Repository: openqa-needles-AI-driven

Multimachine on-prem test with opentofu, ansible and Robot Framework by apappas

Description

A long time ago I explored using the Robot Framework for testing. A big deficiency over our openQA setup is that bringing up and configuring the connection to a test machine is out of scope.

Nowadays we have a way¹ to deploy SUTs outside openqa, but we only use if for cloud tests in conjuction with openqa. Using knowledge gained from that project I am going to try to create a test scenario that replicates an openqa test but this time including the deployment and setup of the SUT.

Goals

Create a simple multimachine test scenario with the support server and SUT all created by the robot framework.

Resources

- https://github.com/SUSE/qe-sap-deployment

- terraform-libvirt-provider

OS self documentation, health check and troubleshooting by roseswe

Project Description

The aim of this hackweek project is to improve the utility "cfg2html" so that it is even more usable under SLES and perhaps also under Rancher.

cfg2html (see also https://github.com/cfg2html/cfg2html) itself is a very mature utility for collecting and documenting information of an operating system like Linux, AIX, HP-UX and others.

Goal for this Hackweek

The aim is to extend cfg2html

- for SLES and SLES-for-SAP apps, high availability

- Improve code for MicroOS 5.x, SUMA, Edge and k8s environments

- fix shellbeauity warnings

- possibly add more plugins

- SUMA/Salt integration to collect.

Resources

Required skills: Bash, shell script and the SUSE products mentioned.

https://github.com/cfg2html/cfg2html

https://www.cfg2html.com/

VimGolf Station by emiler

Description

VimGolf is a challenge game where the goal is to edit a given piece of text into a desired final form using as few keystrokes as possible in Vim.

Some time ago, I built a rough portable station using a Raspberry Pi and a spare monitor. It was initially used to play VimGolf at the office and later repurposed for publicity at several events. This project aims to create a more robust version of that station and provide the necessary scripts and Ansible playbooks to make configuring your own VimGolf station easy.

Goals

- Refactor old existing scripts

- Implement challenge selecion

- Load external configuration files

- Create Ansible playbooks

- Publish on GitHub

Resources

- https://www.vimgolf.com/

- https://github.com/dstein64/vimgolf

- https://github.com/igrigorik/vimgolf

SUSE Health Check Tools by roseswe

SUSE HC Tools Overview

A collection of tools written in Bash or Go 1.24++ to make life easier with handling of a bunch of tar.xz balls created by supportconfig.

Background: For SUSE HC we receive a bunch of supportconfig tar balls to check them for misconfiguration, areas for improvement or future changes.

Main focus on these HC are High Availability (pacemaker), SLES itself and SAP workloads, esp. around the SUSE best practices.

Goals

- Overall improvement of the tools

- Adding new collectors

- Add support for SLES16

Resources

csv2xls* example.sh go.mod listprodids.txt sumtext* trails.go README.md csv2xls.go exceltest.go go.sum m.sh* sumtext.go vercheck.py* config.ini csvfiles/ getrpm* listprodids* rpmdate.sh* sumxls* verdriver* credtest.go example.py getrpm.go listprodids.go sccfixer.sh* sumxls.go verdriver.go

docollall.sh* extracthtml.go gethostnamectl* go.sum numastat.go cpuvul* extractcluster.go firmwarebug* gethostnamectl.go m.sh* numastattest.go cpuvul.go extracthtml* firmwarebug.go go.mod numastat* xtr_cib.sh*

$ getrpm -r pacemaker

>> Product ID: 2795 (SUSE Linux Enterprise Server for SAP Applications 15 SP7 x86_64), RPM Name:

+--------------+----------------------------+--------+--------------+--------------------+

| Package Name | Version | Arch | Release | Repository |

+--------------+----------------------------+--------+--------------+--------------------+

| pacemaker | 2.1.10+20250718.fdf796ebc8 | x86_64 | 150700.3.3.1 | sle-ha/15.7/x86_64 |

| pacemaker | 2.1.9+20250410.471584e6a2 | x86_64 | 150700.1.9 | sle-ha/15.7/x86_64 |

+--------------+----------------------------+--------+--------------+--------------------+

Total packages found: 2

Collection and organisation of information about Bulgarian schools by iivanov

Description

To achieve this it will be necessary:

- Collect/download raw data from various government and non-governmental organizations

- Clean up raw data and organise it in some kind database.

- Create tool to make queries easy.

- Or perhaps dump all data into AI and ask questions in natural language.

Goals

By selecting particular school information like this will be provided:

- School scores on national exams.

- School scores from the external evaluations exams.

- School town, municipality and region.

- Employment rate in a town or municipality.

- Average health of the population in the region.

Resources

Some of these are available only in bulgarian.

- https://danybon.com/klasazia

- https://nvoresults.com/index.html

- https://ri.mon.bg/active-institutions

- https://www.nsi.bg/nrnm/ekatte/archive

Results

- Information about all Bulgarian schools with their scores during recent years cleaned and organised into SQL tables

- Information about all Bulgarian villages, cities, municipalities and districts cleaned and organised into SQL tables

- Information about all Bulgarian villages and cities census since beginning of this century cleaned and organised into SQL tables.

- Information about all Bulgarian municipalities about religion, ethnicity cleaned and organised into SQL tables.

- Data successfully loaded to locally running Ollama with help to Vanna.AI

- Seems to be usable.

TODO

- Add more statistical information about municipalities and ....

Code and data

Song Search with CLAP by gcolangiuli

Description

Contrastive Language-Audio Pretraining (CLAP) is an open-source library that enables the training of a neural network on both Audio and Text descriptions, making it possible to search for Audio using a Text input. Several pre-trained models for song search are already available on huggingface

Goals

Evaluate how CLAP can be used for song searching and determine which types of queries yield the best results by developing a Minimum Viable Product (MVP) in Python. Based on the results of this MVP, future steps could include:

- Music Tagging;

- Free text search;

- Integration with an LLM (for example, with MCP or the OpenAI API) for music suggestions based on your own library.

The code for this project will be entirely written using AI to better explore and demonstrate AI capabilities.

Result

In this MVP we implemented:

- Async Song Analysis with Clap model

- Free Text Search of the songs

- Similar song search based on vector representation

- Containerised version with web interface

We also documented what went well and what can be improved in the use of AI.

You can have a look at the result here:

Future implementation can be related to performance improvement and stability of the analysis.

References

- CLAP: The main model being researched;

- huggingface: Pre-trained models for CLAP;

- Free Music Archive: Creative Commons songs that can be used for testing;

Help Create A Chat Control Resistant Turnkey Chatmail/Deltachat Relay Stack - Rootless Podman Compose, OpenSUSE BCI, Hardened, & SELinux by 3nd5h1771fy

Description

The Mission: Decentralized & Sovereign Messaging

FYI: If you have never heard of "Chatmail", you can visit their site here, but simply put it can be thought of as the underlying protocol/platform decentralized messengers like DeltaChat use for their communications. Do not confuse it with the honeypot looking non-opensource paid for prodect with better seo that directs you to chatmailsecure(dot)com

In an era of increasing centralized surveillance by unaccountable bad actors (aka BigTech), "Chat Control," and the erosion of digital privacy, the need for sovereign communication infrastructure is critical. Chatmail is a pioneering initiative that bridges the gap between classic email and modern instant messaging, offering metadata-minimized, end-to-end encrypted (E2EE) communication that is interoperable and open.

However, unless you are a seasoned sysadmin, the current recommended deployment method of a Chatmail relay is rigid, fragile, difficult to properly secure, and effectively takes over the entire host the "relay" is deployed on.

Why This Matters

A simple, host agnostic, reproducible deployment lowers the entry cost for anyone wanting to run a privacy‑preserving, decentralized messaging relay. In an era of perpetually resurrected chat‑control legislation threats, EU digital‑sovereignty drives, and many dangers of using big‑tech messaging platforms (Apple iMessage, WhatsApp, FB Messenger, Instagram, SMS, Google Messages, etc...) for any type of communication, providing an easy‑to‑use alternative empowers:

- Censorship resistance - No single entity controls the relay; operators can spin up new nodes quickly.

- Surveillance mitigation - End‑to‑end OpenPGP encryption ensures relay operators never see plaintext.

- Digital sovereignty - Communities can host their own infrastructure under local jurisdiction, aligning with national data‑policy goals.

By turning the Chatmail relay into a plug‑and‑play container stack, we enable broader adoption, foster a resilient messaging fabric, and give developers, activists, and hobbyists a concrete tool to defend privacy online.

Goals

As I indicated earlier, this project aims to drastically simplify the deployment of Chatmail relay. By converting this architecture into a portable, containerized stack using Podman and OpenSUSE base container images, we can allow anyone to deploy their own censorship-resistant, privacy-preserving communications node in minutes.

Our goal for Hack Week: package every component into containers built on openSUSE/MicroOS base images, initially orchestrated with a single container-compose.yml (podman-compose compatible). The stack will:

- Run on any host that supports Podman (including optimizations and enhancements for SELinux‑enabled systems).

- Allow network decoupling by refactoring configurations to move from file-system constrained Unix sockets to internal TCP networking, allowing containers achieve stricter isolation.

- Utilize Enhanced Security with SELinux by using purpose built utilities such as udica we can quickly generate custom SELinux policies for the container stack, ensuring strict confinement superior to standard/typical Docker deployments.

- Allow the use of bind or remote mounted volumes for shared data (

/var/vmail, DKIM keys, TLS certs, etc.). - Replace the local DNS server requirement with a remote DNS‑provider API for DKIM/TXT record publishing.

By delivering a turnkey, host agnostic, reproducible deployment, we lower the barrier for individuals and small communities to launch their own chatmail relays, fostering a decentralized, censorship‑resistant messaging ecosystem that can serve DeltaChat users and/or future services adopting this protocol

Resources

- The links included above

- https://chatmail.at/doc/relay/

- https://delta.chat/en/help

- Project repo -> https://codeberg.org/EndShittification/containerized-chatmail-relay

Improve chore and screen time doc generator script `wochenplaner` by gniebler

Description

I wrote a little Python script to generate PDF docs, which can be used to track daily chore completion and screen time usage for several people, with one page per person/week.

I named this script wochenplaner and have been using it for a few months now.

It needs some improvements and adjustments in how the screen time should be tracked and how chores are displayed.

Goals

- Fix chore field separation lines

- Change screen time tracking logic from "global" (week-long) to daily subtraction and weekly addition of remainders (more intuitive than current "weekly time budget method)

- Add logic to fill in chore fields/lines, ideally with pictures, falling back to text.

Resources

tbd (Gitlab repo)

Improvements to osc (especially with regards to the Git workflow) by mcepl

Description

There is plenty of hacking on osc, where we could spent some fun time. I would like to see a solution for https://github.com/openSUSE/osc/issues/2006 (which is sufficiently non-serious, that it could be part of HackWeek project).

Contribute to terraform-provider-libvirt by pinvernizzi

Description

The SUSE Manager (SUMA) teams' main tool for infrastructure automation, Sumaform, largely relies on terraform-provider-libvirt. That provider is also widely used by other teams, both inside and outside SUSE.

It would be good to help the maintainers of this project and give back to the community around it, after all the amazing work that has been already done.

If you're interested in any of infrastructure automation, Terraform, virtualization, tooling development, Go (...) it is also a good chance to learn a bit about them all by putting your hands on an interesting, real-use-case and complex project.

Goals

- Get more familiar with Terraform provider development and libvirt bindings in Go

- Solve some issues and/or implement some features

- Get in touch with the community around the project

Resources

- CONTRIBUTING readme

- Go libvirt library in use by the project

- Terraform plugin development

- "Good first issue" list

Multimachine on-prem test with opentofu, ansible and Robot Framework by apappas

Description

A long time ago I explored using the Robot Framework for testing. A big deficiency over our openQA setup is that bringing up and configuring the connection to a test machine is out of scope.

Nowadays we have a way¹ to deploy SUTs outside openqa, but we only use if for cloud tests in conjuction with openqa. Using knowledge gained from that project I am going to try to create a test scenario that replicates an openqa test but this time including the deployment and setup of the SUT.

Goals

Create a simple multimachine test scenario with the support server and SUT all created by the robot framework.

Resources

- https://github.com/SUSE/qe-sap-deployment

- terraform-libvirt-provider

terraform-provider-feilong by e_bischoff

Project Description

People need to test operating systems and applications on s390 platform. While this is straightforward with KVM, this is very difficult with z/VM.

IBM Cloud Infrastructure Center (ICIC) harnesses the Feilong API, but you can use Feilong without installing ICIC(see this schema).

What about writing a terraform Feilong provider, just like we have the terraform libvirt provider? That would allow to transparently call Feilong from your main.tf files to deploy and destroy resources on your z/VM system.

Goal for Hackweek 23

I would like to be able to easily deploy and provision VMs automatically on a z/VM system, in a way that people might enjoy even outside of SUSE.

My technical preference is to write a terraform provider plugin, as it is the approach that involves the least software components for our deployments, while remaining clean, and compatible with our existing development infrastructure.

Goals for Hackweek 24

Feilong provider works and is used internally by SUSE Manager team. Let's push it forward!

Let's add support for fiberchannel disks and multipath.

Goals for Hackweek 25

Modernization, maturity, and maintenance: support for SLES 16 and openTofu, new API calls, fixes...

Resources

Outcome

Rancher/k8s Trouble-Maker by tonyhansen

Project Description

When studying for my RHCSA, I found trouble-maker, which is a program that breaks a Linux OS and requires you to fix it. I want to create something similar for Rancher/k8s that can allow for troubleshooting an unknown environment.

Goals for Hackweek 25

- Update to modern Rancher and verify that existing tests still work

- Change testing logic to populate secrets instead of requiring a secondary script

- Add new tests

Goals for Hackweek 24 (Complete)

- Create a basic framework for creating Rancher/k8s cluster lab environments as needed for the Break/Fix

- Create at least 5 modules that can be applied to the cluster and require troubleshooting

Resources

- https://github.com/celidon/rancher-troublemaker

- https://github.com/rancher/terraform-provider-rancher2

- https://github.com/rancher/tf-rancher-up

- https://github.com/rancher/quickstart

Move Uyuni Test Framework from Selenium to Playwright + AI by oscar-barrios

Description

This project aims to migrate the existing Uyuni Test Framework from Selenium to Playwright. The move will improve the stability, speed, and maintainability of our end-to-end tests by leveraging Playwright's modern features. We'll be rewriting the current Selenium code in Ruby to Playwright code in TypeScript, which includes updating the test framework runner, step definitions, and configurations. This is also necessary because we're moving from Cucumber Ruby to CucumberJS.

If you're still curious about the AI in the title, it was just a way to grab your attention. Thanks for your understanding.

Nah, let's be honest ![]() AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

Goals

- Migrate Core tests including Onboarding of clients

- Improve test reliabillity: Measure and confirm a significant reduction of flakiness.

- Implement a robust framework: Establish a well-structured and reusable Playwright test framework using the CucumberJS

Resources

- Existing Uyuni Test Framework (Cucumber Ruby + Capybara + Selenium)

- My Template for CucumberJS + Playwright in TypeScript

- Started Hackweek Project

Create a page with all devel:languages:perl packages and their versions by tinita

Description

Perl projects now live in git: https://src.opensuse.org/perl

It would be useful to have an easy way to check which version of which perl module is in devel:languages:perl. Also we have meta overrides and patches for various modules, and it would be good to have them at a central place, so it is easier to lookup, and we can share with other vendors.

I did some initial data dump here a while ago: https://github.com/perlpunk/cpan-meta

But I never had the time to automate this.

I can also use the data to check if there are necessary updates (currently it uses data from download.opensuse.org, so there is some delay and it depends on building).

Goals

- Have a script that updates a central repository (e.g.

https://src.opensuse.org/perl/_metadata) with metadata by looking at https://src.opensuse.org/perl/_ObsPrj (check if there are any changes from the last run) - Create a HTML page with the list of packages (use Javascript and some table library to make it easily searchable)

Resources

Results

Day 1

- First part of the code which retrieves data from https://src.opensuse.org/perl/_ObsPrj with submodules and creates a YAML and a JSON file.

- Repo: https://github.com/perlpunk/opensuse-perl-meta

- Also a first version of the HTML is live: https://perlpunk.github.io/opensuse-perl-meta/

Day 2

- HTML Page has now links to src.opensuse.org and the date of the last update, plus a short info at the top

- Code is now 100% covered by tests: https://app.codecov.io/gh/perlpunk/opensuse-perl-meta

- I used the modern perl

classfeature, which makes perl classes even nicer and shorter. See example - Tests

- I tried out the mocking feature of the modern Test2::V0 library which provides call tracking. See example

- I tried out comparing data structures with the new Test2::V0 library. It let's you compare parts of the structure with the

likefunction, which only compares the date that is mentioned in the expected data. example

Day 3

- Added various things to the table

- Dependencies column

- Show popup with info for cpanspec, patches and dependencies

- Added last date / commit to the data export.

Plan: With the added date / commit we can now daily check _ObsPrj for changes and only fetch the data for changed packages.

Day 4

Switch software-o-o to store repomd in a database by hennevogel

Description

The openSUSE Software portal is a web app to explore binary packages of openSUSE distributions. Kind of like an package manager / app store.

https://software.opensuse.org/

This app has been around forever (August 2007) and it's architecture is a bit brittle. It acts as a frontend to the OBS distributions and published binary search APIs, calculates and caches a lot of stuff in memory and needs code changes nearly every openSUSE release to keep up.

As you can imagine, it's a heavy user of the OBS API, especially when caches are cold.

Goals

I want to change the app to cache repomod data in a (postgres) database structure

- Distributions have many Repositories

- Repositories have many Packages

- Packages have many Patches

The UI workflows will be as following

- As an admin I setup Distribution and it's repositories

- As an admin I sync all repositories repomd files into to the database

- As a user I browse a Distribution by category

- As a user I search for Package of a Distribution in it's Repositories

- As a user I extend the search to Package build on OBS for this Distribution

This has a couple of pro's:

- Less traffic on the OBS API as the usual Packages are inside the database

- Easier base to add features to this page. Like comments, ratings, openSUSE specific screenshots etc.

- Separating the Distribution package search from searching through OBS will hopefully make more clear for newbies that enabling extra repositories is kind of dangerous.

And one con:

- You can't search for packages build for foreign distributions with this app anymore (although we could consume their repomd etc. but I doubt we have the audience on an opensuse.org domain...)

TODO

Introduce a PG database

Introduce a PG database Add clockworkd as scheduler and delayed_job as ActiveJob backend

Add clockworkd as scheduler and delayed_job as ActiveJob backend Introduce ActiveStorage

Introduce ActiveStorage Build initial data model

Build initial data model Introduce repomd to database sync

Introduce repomd to database sync

Adapt repomd sync to Leap 16.0 repomod layout changes (single arch, no update repo)

Adapt repomd sync to Leap 16.0 repomod layout changes (single arch, no update repo) Make repomd sync idempotent

Make repomd sync idempotent

Introduce database search

Introduce database search Setup foreman to run

Setup foreman to run rails sandrake jobs:workoff- Adapt UI

Build Category Browsing

Build Category Browsing Build Admin Distribution CRUD interface

Build Admin Distribution CRUD interface

Try out Neovim Plugins supporting AI Providers by enavarro_suse

Description

Experiment with several Neovim plugins that integrate AI model providers such as Gemini and Ollama.

Goals

Evaluate how these plugins enhance the development workflow, how they differ in capabilities, and how smoothly they integrate into Neovim for day-to-day coding tasks.

Resources

- Neovim 0.11.5

- AI-enabled Neovim plugins:

- avante.nvim: https://github.com/yetone/avante.nvim

- Gp.nvim: https://github.com/Robitx/gp.nvim

- parrot.nvim: https://github.com/frankroeder/parrot.nvim

- gemini.nvim: https://dotfyle.com/plugins/kiddos/gemini.nvim

- ...

- Accounts or API keys for AI model providers.

- Local model serving setup (e.g., Ollama)

- Test projects or codebases for practical evaluation:

- OBS: https://build.opensuse.org/

- OBS blog and landing page: https://openbuildservice.org/

- ...

Improvements to osc (especially with regards to the Git workflow) by mcepl

Description

There is plenty of hacking on osc, where we could spent some fun time. I would like to see a solution for https://github.com/openSUSE/osc/issues/2006 (which is sufficiently non-serious, that it could be part of HackWeek project).

Improve GNOME user documentation infrastructure and content by pkovar

Description

The GNOME user documentation infrastructure has been recently upgraded with a new site running at help.gnome.org. This is an ongoing project with a number of outstanding major issues to be resolved. When these issues are addressed, it will benefit both the upstream community and downstream projects and products consuming the GNOME user docs, including openSUSE.

Goals

Address primarily infrastructure-related issues filed for https://gitlab.gnome.org/Teams/Websites/help.gnome.org/, https://github.com/projectmallard and https://github.com/itstool/itstool projects. Work on contributor guides ported from https://wiki.gnome.org/DocumentationProject.html.

Resources

- https://gitlab.gnome.org/Teams/Websites/help.gnome.org/

- https://github.com/projectmallard/pintail

- https://github.com/projectmallard/projectmallard.org

- https://github.com/itstool/itstool

Docs Navigator MCP: SUSE Edition by mackenzie.techdocs

Description