Description

For now, there is no possible HA setup for Uyuni. The idea is to explore setting up a read-only shadow instance of an Uyuni and make it as useful as possible.

Possible things to look at:

- live sync of the database, probably using the WAL. Some of the tables may have to be skipped or some features disabled on the RO instance (taskomatic, PXT sessions…)

- Can we use a load balancer that routes read-only queries to either instance and the other to the RW one? For example, packages or PXE data can be served by both, the API GET requests too. The rest would be RW.

Goals

- Prepare a document explaining how to do it.

- PR with the needed code changes to support it

Looking for hackers with the skills:

This project is part of:

Hack Week 25

Activity

Comments

-

2 months ago by epenchev | Reply

Hi, I think there are a few solutions that might help.

Since I'm dealing a lot with HA and databases, would like to share my thoughts.

One possible solution would be to go with pgpool-II - Scaling PostgreSQL Master-Replica Load Balancing and Automatic Failover.

Such approach is described very much in details -> https://medium.com/@deysouvik700/scaling-postgresql-with-pgpool-ii-master-replica-load-balancing-and-automatic-failover-091983d4dd9a. In the example architecture the PgPool proxy itself is a single point of failure. The example setup could be extended by adding an additional proxy instance. Both proxy instances could be managed by keepalived + VirtualIP config. Of course there are other resources you can refer to as well.

Another possible solution which is kind of more automated would be to go with cnpg. This would require however to have a K8s cluster for your statefull PostgreSQL workload. So ideally you would need at least 3 Nodes HA K8s cluster. This is the minimal setup and all 3 nodes should be (control plane + worker roles) otherwise the standard setup will go up to 5 nodes (control planes and additional worker nodes.) With cnpg you can create multiple services (rw, ro, r) within you cluster and point clients to them https://cloudnative-pg.io/documentation/1.27/service_management/ and https://cloudnative-pg.io/documentation/1.27/architecture/.

Something more experimental that I'm working on recently and hoping to be way easier in operational perspective is https://github.com/kqlite/kqlite. It's a SQLite over the PostgreSQL wire protocol, with support for replication and clustering. However this limits the scope of database functionality down to SQLite only. Unfortunately using any PostgreSQL specific features and data types will not work with kqlite .

P.S. Also there is plenty of documentation on going with the standard approach patroni + HAProxy + etcd.

-

2 months ago by cbosdonnat | Reply

The first issue will be the replication of the DB itself. Since we have sequences and those are not logically replicated, we will have to check the possible options there.

-

Similar Projects

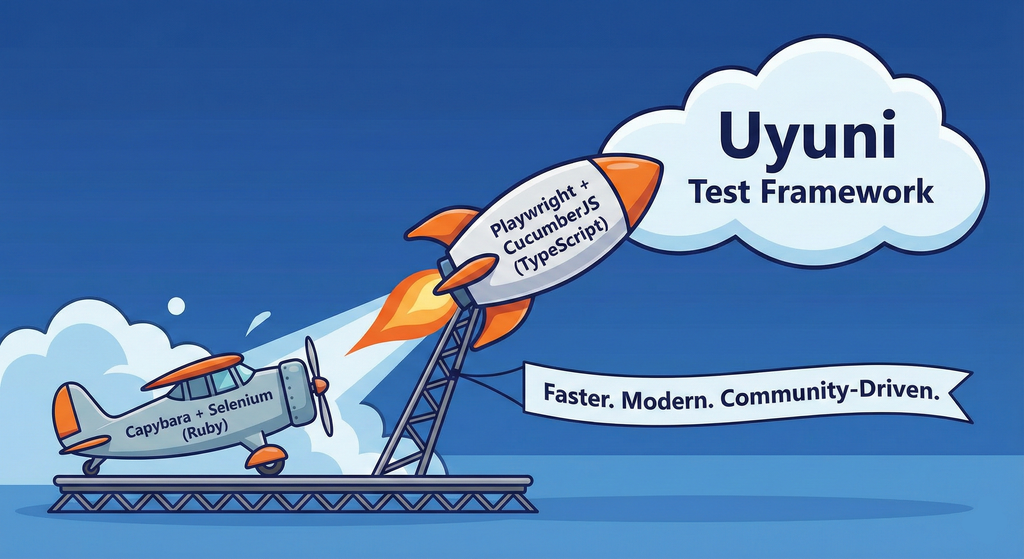

Move Uyuni Test Framework from Selenium to Playwright + AI by oscar-barrios

Description

This project aims to migrate the existing Uyuni Test Framework from Selenium to Playwright. The move will improve the stability, speed, and maintainability of our end-to-end tests by leveraging Playwright's modern features. We'll be rewriting the current Selenium code in Ruby to Playwright code in TypeScript, which includes updating the test framework runner, step definitions, and configurations. This is also necessary because we're moving from Cucumber Ruby to CucumberJS.

If you're still curious about the AI in the title, it was just a way to grab your attention. Thanks for your understanding.

Nah, let's be honest ![]() AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

Goals

- Migrate Core tests including Onboarding of clients

- Improve test reliabillity: Measure and confirm a significant reduction of flakiness.

- Implement a robust framework: Establish a well-structured and reusable Playwright test framework using the CucumberJS

Resources

- Existing Uyuni Test Framework (Cucumber Ruby + Capybara + Selenium)

- My Template for CucumberJS + Playwright in TypeScript

- Started Hackweek Project

mgr-ansible-ssh - Intelligent, Lightweight CLI for Distributed Remote Execution by deve5h

Description

By the end of Hack Week, the target will be to deliver a minimal functional version 1 (MVP) of a custom command-line tool named mgr-ansible-ssh (a unified wrapper for BOTH ad-hoc shell & playbooks) that allows operators to:

- Execute arbitrary shell commands on thousand of remote machines simultaneously using Ansible Runner with artifacts saved locally.

- Pass runtime options such as inventory file, remote command string/ playbook execution, parallel forks, limits, dry-run mode, or no-std-ansible-output.

- Leverage existing SSH trust relationships without additional setup.

- Provide a clean, intuitive CLI interface with --help for ease of use. It should provide consistent UX & CI-friendly interface.

- Establish a foundation that can later be extended with advanced features such as logging, grouping, interactive shell mode, safe-command checks, and parallel execution tuning.

The MVP should enable day-to-day operations to efficiently target thousands of machines with a single, consistent interface.

Goals

Primary Goals (MVP):

Build a functional CLI tool (mgr-ansible-ssh) capable of executing shell commands on multiple remote hosts using Ansible Runner. Test the tool across a large distributed environment (1000+ machines) to validate its performance and reliability.

Looking forward to significantly reducing the zypper deployment time across all 351 RMT VM servers in our MLM cluster by eliminating the dependency on the taskomatic service, bringing execution down to a fraction of the current duration. The tool should also support multiple runtime flags, such as:

mgr-ansible-ssh: Remote command execution wrapper using Ansible Runner

Usage: mgr-ansible-ssh [--help] [--version] [--inventory INVENTORY]

[--run RUN] [--playbook PLAYBOOK] [--limit LIMIT]

[--forks FORKS] [--dry-run] [--no-ansible-output]

Required Arguments

--inventory, -i Path to Ansible inventory file to use

Any One of the Arguments Is Required

--run, -r Execute the specified shell command on target hosts

--playbook, -p Execute the specified Ansible playbook on target hosts

Optional Arguments

--help, -h Show the help message and exit

--version, -v Show the version and exit

--limit, -l Limit execution to specific hosts or groups

--forks, -f Number of parallel Ansible forks

--dry-run Run in Ansible check mode (requires -p or --playbook)

--no-ansible-output Suppress Ansible stdout output

Secondary/Stretched Goals (if time permits):

- Add pretty output formatting (success/failure summary per host).

- Implement basic logging of executed commands and results.

- Introduce safety checks for risky commands (shutdown, rm -rf, etc.).

- Package the tool so it can be installed with pip or stored internally.

Resources

Collaboration is welcome from anyone interested in CLI tooling, automation, or distributed systems. Skills that would be particularly valuable include:

- Python especially around CLI dev (argparse, click, rich)

Uyuni Health-check Grafana AI Troubleshooter by ygutierrez

Description

This project explores the feasibility of using the open-source Grafana LLM plugin to enhance the Uyuni Health-check tool with LLM capabilities. The idea is to integrate a chat-based "AI Troubleshooter" directly into existing dashboards, allowing users to ask natural-language questions about errors, anomalies, or performance issues.

Goals

- Investigate if and how the

grafana-llm-appplug-in can be used within the Uyuni Health-check tool. - Investigate if this plug-in can be used to query LLMs for troubleshooting scenarios.

- Evaluate support for local LLMs and external APIs through the plugin.

- Evaluate if and how the Uyuni MCP server could be integrated as another source of information.

Resources

Ansible to Salt integration by vizhestkov

Description

We already have initial integration of Ansible in Salt with the possibility to run playbooks from the salt-master on the salt-minion used as an Ansible Control node.

In this project I want to check if it possible to make Ansible working on the transport of Salt. Basically run playbooks with Ansible through existing established Salt (ZeroMQ) transport and not using ssh at all.

It could be a good solution for the end users to reuse Ansible playbooks or run Ansible modules they got used to with no effort of complex configuration with existing Salt (or Uyuni/SUSE Multi Linux Manager) infrastructure.

Goals

- [v] Prepare the testing environment with Salt and Ansible installed

- [v] Discover Ansible codebase to figure out possible ways of integration

- [v] Create Salt/Uyuni inventory module

- [v] Make basic modules to work with no using separate ssh connection, but reusing existing Salt connection

- [v] Test some most basic playbooks

Resources

Testing and adding GNU/Linux distributions on Uyuni by juliogonzalezgil

Join the Gitter channel! https://gitter.im/uyuni-project/hackweek

Uyuni is a configuration and infrastructure management tool that saves you time and headaches when you have to manage and update tens, hundreds or even thousands of machines. It also manages configuration, can run audits, build image containers, monitor and much more!

Currently there are a few distributions that are completely untested on Uyuni or SUSE Manager (AFAIK) or just not tested since a long time, and could be interesting knowing how hard would be working with them and, if possible, fix whatever is broken.

For newcomers, the easiest distributions are those based on DEB or RPM packages. Distributions with other package formats are doable, but will require adapting the Python and Java code to be able to sync and analyze such packages (and if salt does not support those packages, it will need changes as well). So if you want a distribution with other packages, make sure you are comfortable handling such changes.

No developer experience? No worries! We had non-developers contributors in the past, and we are ready to help as long as you are willing to learn. If you don't want to code at all, you can also help us preparing the documentation after someone else has the initial code ready, or you could also help with testing :-)

The idea is testing Salt (including bootstrapping with bootstrap script) and Salt-ssh clients

To consider that a distribution has basic support, we should cover at least (points 3-6 are to be tested for both salt minions and salt ssh minions):

- Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)

- Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)

- Package management (install, remove, update...)

- Patching

- Applying any basic salt state (including a formula)

- Salt remote commands

- Bonus point: Java part for product identification, and monitoring enablement

- Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)

- Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)

- Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

If something is breaking: we can try to fix it, but the main idea is research how supported it is right now. Beyond that it's up to each project member how much to hack :-)

- If you don't have knowledge about some of the steps: ask the team

- If you still don't know what to do: switch to another distribution and keep testing.

This card is for EVERYONE, not just developers. Seriously! We had people from other teams helping that were not developers, and added support for Debian and new SUSE Linux Enterprise and openSUSE Leap versions :-)

In progress/done for Hack Week 25

Guide

We started writin a Guide: Adding a new client GNU Linux distribution to Uyuni at https://github.com/uyuni-project/uyuni/wiki/Guide:-Adding-a-new-client-GNU-Linux-distribution-to-Uyuni, to make things easier for everyone, specially those not too familiar wht Uyuni or not technical.

openSUSE Leap 16.0

The distribution will all love!

https://en.opensuse.org/openSUSE:Roadmap#DRAFTScheduleforLeap16.0

Curent Status We started last year, it's complete now for Hack Week 25! :-D

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file) NOTE: Done, client tools for SLMicro6 are using as those for SLE16.0/openSUSE Leap 16.0 are not available yet[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...). Works, even reboot requirement detection

GRIT: GRaphs In Time by fvanlankvelt

Description

The current implementation of the Time-Travelling Topology database, StackGraph, has served SUSE Observability well over the years. But it is dependent on a number of complex components - Zookeeper, HDFS, HBase, Tephra. These lead to a large number of failure scenarios and parameters to tweak for optimal performance.

The goal of this project is to take the high-level requirements (time-travelling topology, querying over time, transactional changes to topology, scalability) and design/prototype key components, to see where they would lead us if we were to start from scratch today.

An example would be to use RocksDB to persist topology history. Its user-defined timestamps seem to match well with time-travelling, has transaction support with fine-grained conflict detection.

Goals

Determine feasibility of implementing the model on a whole new architecture. See how to model the graph and its history such that updates and querying are performant, transactional conflicts are minimized. Build a prototype to validate the model.

Resources

Backend developers, preferably experienced in distributed systems. Programming language: scala 3 with some C++ for low-level.

Progress

The project has started at github GRaphs In Time - a C++ project that

- embeds RocksDB for persistence,

- uses (nu)Raft for replication/consensus,

- supports large transactions, with

- SNAPSHOT isolation

- time-travel

- graph operations (add/remove vertex/edge, indexes)

Casky – Lightweight C Key-Value Engine with Crash Recovery by pperego

Description

Casky is a lightweight, crash-safe key-value store written in C, designed for fast storage and retrieval of data with a minimal footprint. Built using Test-Driven Development (TDD), Casky ensures reliability while keeping the codebase clean and maintainable. It is inspired by Bitcask and aims to provide a simple, embeddable storage engine that can be integrated into microservices, IoT devices, and other C-based applications.

Objectives:

- Implement a minimal key-value store with append-only file storage.

- Support crash-safe persistence and recovery.

- Expose a simple public API: store(key, value), load(key), delete(key).

- Follow TDD methodology for robust and testable code.

- Provide a foundation for future extensions, such as in-memory caching, compaction, and eventual integration with vector-based databases like PixelDB.

Why This Project is Interesting:

Casky combines low-level C programming with modern database concepts, making it an ideal playground to explore storage engines, crash safety, and performance optimization. It’s small enough to complete during Hackweek, yet it provides a solid base for future experiments and more complex projects.

Goals

- Working prototype with append-only storage and memtable.

- TDD test suite covering core functionality and recovery.

- Demonstration of basic operations: insert, load, delete.

- Optional bonus: LRU caching, file compaction, performance benchmarks.

Future Directions:

After Hackweek, Casky can evolve into a backend engine for projects like PixelDB, supporting vector storage and approximate nearest neighbor search, combining low-level performance with cutting-edge AI retrieval applications.

Resources

The Bitcask paper: https://riak.com/assets/bitcask-intro.pdf The Casky repository: https://github.com/thesp0nge/casky

Day 1

[0.10.0] - 2025-12-01

Added

- Core in-memory KeyDir and EntryNode structures

- API functions: caskyopen, caskyclose, caskyput, caskyget, casky_delete

- Hash function: caskydjb2hash_xor

- Error handling via casky_errno

- Unit tests for all APIs using standard asserts

- Test cleanup of temporary files

Changed

- None (first MVP)

Fixed

- None (first MVP)

Day 2

[0.20.0] - 2025-12-02

Work on kqlite (Lightweight remote SQLite with high availability and auto failover). by epenchev

Description

Continue the work on kqlite (Lightweight remote SQLite with high availability and auto failover).

It's a solution for applications that require High Availability but don't need all the features of a complete RDBMS and can fit SQLite in their use case.

Also kqlite can be considered to be used as a lightweight storage backend for K8s (https://docs.k3s.io/datastore) and the Edge, and allowing to have only 2 Nodes for HA.

Goals

Push kqlite to a beta version.

kqlite as library for Go programs.

Resources

https://github.com/kqlite/kqlite

Collection and organisation of information about Bulgarian schools by iivanov

Description

To achieve this it will be necessary:

- Collect/download raw data from various government and non-governmental organizations

- Clean up raw data and organise it in some kind database.

- Create tool to make queries easy.

- Or perhaps dump all data into AI and ask questions in natural language.

Goals

By selecting particular school information like this will be provided:

- School scores on national exams.

- School scores from the external evaluations exams.

- School town, municipality and region.

- Employment rate in a town or municipality.

- Average health of the population in the region.

Resources

Some of these are available only in bulgarian.

- https://danybon.com/klasazia

- https://nvoresults.com/index.html

- https://ri.mon.bg/active-institutions

- https://www.nsi.bg/nrnm/ekatte/archive

Results

- Information about all Bulgarian schools with their scores during recent years cleaned and organised into SQL tables

- Information about all Bulgarian villages, cities, municipalities and districts cleaned and organised into SQL tables

- Information about all Bulgarian villages and cities census since beginning of this century cleaned and organised into SQL tables.

- Information about all Bulgarian municipalities about religion, ethnicity cleaned and organised into SQL tables.

- Data successfully loaded to locally running Ollama with help to Vanna.AI

- Seems to be usable.

TODO

- Add more statistical information about municipalities and ....

Code and data

Port the classic browser game HackTheNet to PHP 8 by dgedon

Description

The classic browser game HackTheNet from 2004 still runs on PHP 4/5 and MySQL 5 and needs a port to PHP 8 and e.g. MariaDB.

Goals

- Port the game to PHP 8 and MariaDB 11

- Create a container where the game server can simply be started/stopped

Resources

- https://github.com/nodeg/hackthenet