an invention by terezacerna

Description

This project aims to explore the popularity and developer sentiment around SUSE and its technologies compared to Red Hat and their technologies. Using publicly available data sources, I will analyze search trends, developer preferences, repository activity, and media presence. The final outcome will be an interactive Power BI dashboard that provides insights into how SUSE is perceived and discussed across the web and among developers.

Goals

- Assess the popularity of SUSE products and brand compared to Red Hat using Google Trends.

- Analyze developer satisfaction and usage trends from the Stack Overflow Developer Survey.

- Use the GitHub API to compare SUSE and Red Hat repositories in terms of stars, forks, contributors, and issue activity.

- Perform sentiment analysis on GitHub issue comments to measure community tone and engagement using built-in Copilot capabilities.

- Perform sentiment analysis on Reddit comments related to SUSE technologies using built-in Copilot capabilities.

- Use Gnews.io to track and compare the volume of news articles mentioning SUSE and Red Hat technologies.

- Test the integration of Copilot (AI) within Power BI for enhanced data analysis and visualization.

- Deliver a comprehensive Power BI report summarizing findings and insights.

- Test the full potential of Power BI, including its AI features and native language Q&A.

Resources

- Google Trends: Web scraping for search popularity data

- Stack Overflow Developer Survey: For technology popularity and satisfaction comparison

- GitHub API: For repository data (stars, forks, contributors, issues, comments).

- Gnews.io API: For article volume and mentions analysis.

- Reddit: SUSE related topics with comments.

This project is part of:

Hack Week 25

Activity

Comments

-

2 months ago by terezacerna | Reply

This project provides a comprehensive, data-driven assessment of SUSE’s presence, perception, and alignment within the global developer and open-source landscape. By integrating insights from the Stack Overflow Developer Survey, Google Trends, GitHub activity, GitHub issue sentiment, and Reddit discussions, the analysis offers a multi-layered view of how SUSE compares with key competitors—particularly Red Hat—and how the broader technical community engages with SUSE technologies. It is important to note that GitHub Issues and Reddit data were limited to approximately one month of available data, which constrains the depth of historical trend analysis, though still provides valuable directional insights into current community sentiment and interaction patterns.

The Developer Survey analysis reveals how Linux users differ from non-Linux users in terms of platform choices, programming languages, professional roles, and technology preferences. This highlights the size and characteristics of SUSE’s core audience, while also identifying the tools and languages most relevant to SUSE’s ecosystem. Analyses of DevOps, SREs, SysAdmins, and cloud-native roles further quantify SUSE’s addressable market and assess alignment with industry trends.

The Google Trends analysis adds an external perspective on brand interest, showing how public attention toward SUSE and Red Hat evolves over time and across regions. Related search terms provide insight into how each brand is associated with specific technologies and topics, highlighting opportunities for increased visibility or repositioning.

The GitHub repository overview offers a look at SUSE’s open-source footprint relative to Red Hat, focusing on repository activity, stars, forks, issues, and programming language diversity. Trends in repository creation and updates illustrate innovation momentum and community engagement, while language usage highlights SUSE’s technical direction and ecosystem breadth.

The SUSE GitHub Issues analysis deepens understanding of community interaction by examining issue volume, resolution speed, contributor patterns, and sentiment expressed in issue titles, bodies, and comments. Although based on one month of data, this analysis provides meaningful insights into developer satisfaction, recurring challenges, and project health. Categorization of issues helps identify potential areas for product improvement or documentation enhancement.

The Reddit analysis extends sentiment exploration into broader public discussions, comparing SUSE-related and Red Hat–related posts and comments. Despite the one-month limitation, sentiment trends, discussion categories, and key influencers reveal how SUSE is perceived in informal technical communities and what factors drive positive or negative sentiment.

Together, these components create a holistic view of SUSE’s position across developer preferences, market interest, community engagement, and open-source activity. The combined insights support strategic decision-making for product development, community outreach, marketing, and competitive positioning—helping SUSE understand where it stands today and where the strongest opportunities exist within the modern infrastructure and cloud-native ecosystem.

-

2 months ago by terezacerna | Reply

Demo View: LINK

Full Power BI Report: LINK (additional access may be required)

-

2 months ago by terezacerna | Reply

Obstacles and limitations I have encountered:

I was limited with the amount of items I could have scraped with API from GitHub and Reddit and I only could have got the last month of data from both platforms.

Since I last explored, the cognitive AI analysis like sentiment analysis or categorization was moved by Microsoft behind a separate licensing, which we don't have available in SUSE. Thus I had to change my plan and use Gemini outside of Power BI for these analysis.

Analyzing Stack Overflow could and should take much longer to really get a real profile of a SUSE community user. I would however need a help from a person who knows SUSE products technically well and potentially have some marketing knowledge as well.

The next steps of this analysis could be to analyze when a community user becomes a paying customer.

Similar Projects

Backporting patches using LLM by jankara

Description

Backporting Linux kernel fixes (either for CVE issues or as part of general git-fixes workflow) is boring and mostly mechanical work (dealing with changes in context, renamed variables, new helper functions etc.). The idea of this project is to explore usage of LLM for backporting Linux kernel commits to SUSE kernels using LLM.

Goals

- Create safe environment allowing LLM to run and backport patches without exposing the whole filesystem to it (for privacy and security reasons).

- Write prompt that will guide LLM through the backporting process. Fine tune it based on experimental results.

- Explore success rate of LLMs when backporting various patches.

Resources

- Docker

- Gemini CLI

Repository

Current version of the container with some instructions for use are at: https://gitlab.suse.de/jankara/gemini-cli-backporter

Enable more features in mcp-server-uyuni by j_renner

Description

I would like to contribute to mcp-server-uyuni, the MCP server for Uyuni / Multi-Linux Manager) exposing additional features as tools. There is lots of relevant features to be found throughout the API, for example:

- System operations and infos

- System groups

- Maintenance windows

- Ansible

- Reporting

- ...

At the end of the week I managed to enable basic system group operations:

- List all system groups visible to the user

- Create new system groups

- List systems assigned to a group

- Add and remove systems from groups

Goals

- Set up test environment locally with the MCP server and client + a recent MLM server [DONE]

- Identify features and use cases offering a benefit with limited effort required for enablement [DONE]

- Create a PR to the repo [DONE]

Resources

Kubernetes-Based ML Lifecycle Automation by lmiranda

Description

This project aims to build a complete end-to-end Machine Learning pipeline running entirely on Kubernetes, using Go, and containerized ML components.

The pipeline will automate the lifecycle of a machine learning model, including:

- Data ingestion/collection

- Model training as a Kubernetes Job

- Model artifact storage in an S3-compatible registry (e.g. Minio)

- A Go-based deployment controller that automatically deploys new model versions to Kubernetes using Rancher

- A lightweight inference service that loads and serves the latest model

- Monitoring of model performance and service health through Prometheus/Grafana

The outcome is a working prototype of an MLOps workflow that demonstrates how AI workloads can be trained, versioned, deployed, and monitored using the Kubernetes ecosystem.

Goals

By the end of Hack Week, the project should:

Produce a fully functional ML pipeline running on Kubernetes with:

- Data collection job

- Training job container

- Storage and versioning of trained models

- Automated deployment of new model versions

- Model inference API service

- Basic monitoring dashboards

Showcase a Go-based deployment automation component, which scans the model registry and automatically generates & applies Kubernetes manifests for new model versions.

Enable continuous improvement by making the system modular and extensible (e.g., additional models, metrics, autoscaling, or drift detection can be added later).

Prepare a short demo explaining the end-to-end process and how new models flow through the system.

Resources

Updates

- Training pipeline and datasets

- Inference Service py

MCP Server for SCC by digitaltomm

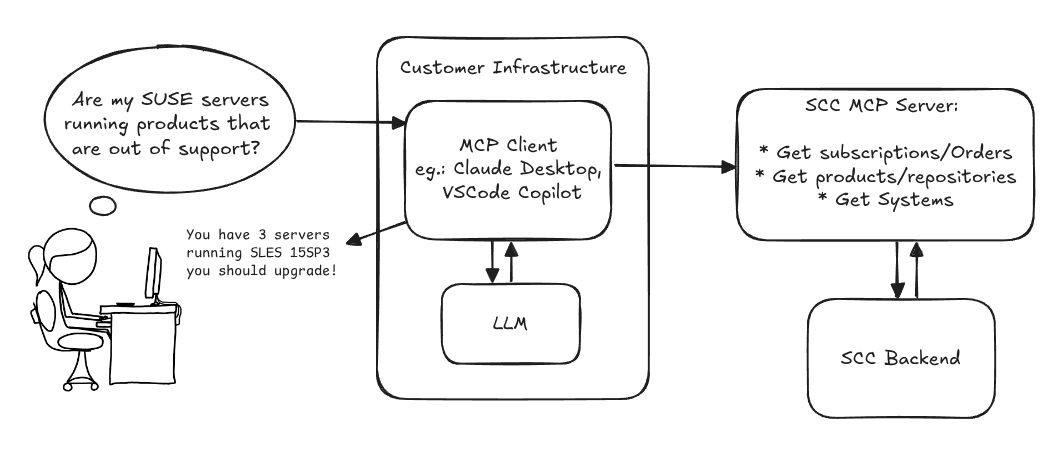

Description

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json:

Try out Neovim Plugins supporting AI Providers by enavarro_suse

Description

Experiment with several Neovim plugins that integrate AI model providers such as Gemini and Ollama.

Goals

Evaluate how these plugins enhance the development workflow, how they differ in capabilities, and how smoothly they integrate into Neovim for day-to-day coding tasks.

Resources

- Neovim 0.11.5

- AI-enabled Neovim plugins:

- avante.nvim: https://github.com/yetone/avante.nvim

- Gp.nvim: https://github.com/Robitx/gp.nvim

- parrot.nvim: https://github.com/frankroeder/parrot.nvim

- gemini.nvim: https://dotfyle.com/plugins/kiddos/gemini.nvim

- ...

- Accounts or API keys for AI model providers.

- Local model serving setup (e.g., Ollama)

- Test projects or codebases for practical evaluation:

- OBS: https://build.opensuse.org/

- OBS blog and landing page: https://openbuildservice.org/

- ...

The Agentic Rancher Experiment: Do Androids Dream of Electric Cattle? by moio

Rancher is a beast of a codebase. Let's investigate if the new 2025 generation of GitHub Autonomous Coding Agents and Copilot Workspaces can actually tame it.

The Plan

Create a sandbox GitHub Organization, clone in key Rancher repositories, and let the AI loose to see if it can handle real-world enterprise OSS maintenance - or if it just hallucinates new breeds of Kubernetes resources!

Specifically, throw "Agentic Coders" some typical tasks in a complex, long-lived open-source project, such as:

❥ The Grunt Work: generate missing GoDocs, unit tests, and refactorings. Rebase PRs.

❥ The Complex Stuff: fix actual (historical) bugs and feature requests to see if they can traverse the complexity without (too much) human hand-holding.

❥ Hunting Down Gaps: find areas lacking in docs, areas of improvement in code, dependency bumps, and so on.

If time allows, also experiment with Model Context Protocol (MCP) to give agents context on our specific build pipelines and CI/CD logs.

Why?

We know AI can write "Hello World." and also moderately complex programs from a green field. But can it rebase a 3-month-old PR with conflicts in rancher/rancher? I want to find the breaking point of current AI agents to determine if and how they can help us to reduce our technical debt, work faster and better. At the same time, find out about pitfalls and shortcomings.

The CONCLUSION!!!

A ![]() State of the Union

State of the Union ![]() document was compiled to summarize lessons learned this week. For more gory details, just read on the diary below!

document was compiled to summarize lessons learned this week. For more gory details, just read on the diary below! ![]()

issuefs: FUSE filesystem representing issues (e.g. JIRA) for the use with AI agents code-assistants by llansky3

Description

Creating a FUSE filesystem (issuefs) that mounts issues from various ticketing systems (Github, Jira, Bugzilla, Redmine) as files to your local file system.

And why this is good idea?

- User can use favorite command line tools to view and search the tickets from various sources

- User can use AI agents capabilities from your favorite IDE or cli to ask question about the issues, project or functionality while providing relevant tickets as context without extra work.

- User can use it during development of the new features when you let the AI agent to jump start the solution. The issuefs will give the AI agent the context (AI agents just read few more files) about the bug or requested features. No need for copying and pasting issues to user prompt or by using extra MCP tools to access the issues. These you can still do but this approach is on purpose different.

Goals

- Add Github issue support

- Proof the concept/approach by apply the approach on itself using Github issues for tracking and development of new features

- Add support for Bugzilla and Redmine using this approach in the process of doing it. Record a video of it.

- Clean-up and test the implementation and create some documentation

- Create a blog post about this approach

Resources

There is a prototype implementation here. This currently sort of works with JIRA only.