Description

Large language models like ChatGPT have demonstrated remarkable capabilities across a variety of applications. However, their potential for enhancing the Linux development and user ecosystem remains largely unexplored. This project seeks to bridge that gap by researching practical applications of LLMs to improve workflows in areas such as backporting, packaging, log analysis, system migration, and more. By identifying patterns that LLMs can leverage, we aim to uncover new efficiencies and automation strategies that can benefit developers, maintainers, and end users alike.

Goals

- Evaluate Existing LLM Capabilities: Research and document the current state of LLM usage in open-source and Linux development projects, noting successes and limitations.

- Prototype Tools and Scripts: Develop proof-of-concept scripts or tools that leverage LLMs to perform specific tasks like automated log analysis, assisting with backporting patches, or generating packaging metadata.

- Assess Performance and Reliability: Test the tools' effectiveness on real-world Linux data and analyze their accuracy, speed, and reliability.

- Identify Best Use Cases: Pinpoint which tasks are most suitable for LLM support, distinguishing between high-impact and impractical applications.

- Document Findings and Recommendations: Summarize results with clear documentation and suggest next steps for potential integration or further development.

Resources

- Local LLM Implementations: Access to locally hosted LLMs such as LLaMA, GPT-J, or similar open-source models that can be run and fine-tuned on local hardware.

- Computing Resources: Workstations or servers capable of running LLMs locally, equipped with sufficient GPU power for training and inference.

- Sample Data: Logs, source code, patches, and packaging data from openSUSE or SUSE repositories for model training and testing.

- Public LLMs for Benchmarking: Access to APIs from platforms like OpenAI or Hugging Face for comparative testing and performance assessment.

- Existing NLP Tools: Libraries such as spaCy, Hugging Face Transformers, and PyTorch for building and interacting with local LLMs.

- Technical Documentation: Tutorials and resources focused on setting up and optimizing local LLMs for tasks relevant to Linux development.

- Collaboration: Engagement with community experts and teams experienced in AI and Linux for feedback and joint exploration.

Looking for hackers with the skills:

This project is part of:

Hack Week 24

Activity

Comments

-

-

about 1 year ago by jiriwiesner | Reply

I would like to ask an LLM instance about the inner workings on the Linux kernel code. It is a common task of mine to look for a bug in a subsystem or a layer that can easily have tens of thousands of lines of code (e.g. bsc 1216813). I know having an understanding of the Linux code is what we do as developers but my understanding and knowledge is always limited because I simply do not have the time to read all of the code possibly involved in an issue. If the LLM was trained to process the source code of a specific version of Linux a developer could then ask involved questions about the code using the terms found in the code base. It should basically be something that allows a developer find the interesting parts of the code better than when using just grep.

-

about 1 year ago by anicka | Reply

Actually, it looks like that off-the-shelf ChatGPT 4 can be already quite helpful in such tasks.

But training something like code llama on our kernels is something I indeed want to look into next time because if there is any way how to leverage LLMs in our bugfixing or backporting, this is it.

-

Similar Projects

Liz - Prompt autocomplete by ftorchia

Description

Liz is the Rancher AI assistant for cluster operations.

Goals

We want to help users when sending new messages to Liz, by adding an autocomplete feature to complete their requests based on the context.

Example:

- User prompt: "Can you show me the list of p"

- Autocomplete suggestion: "Can you show me the list of p...od in local cluster?"

Example:

- User prompt: "Show me the logs of #rancher-"

- Chat console: It shows a drop-down widget, next to the # character, with the list of available pod names starting with "rancher-".

Technical Overview

- The AI agent should expose a new ws/autocomplete endpoint to proxy autocomplete messages to the LLM.

- The UI extension should be able to display prompt suggestions and allow users to apply the autocomplete to the Prompt via keyboard shortcuts.

Resources

MCP Server for SCC by digitaltomm

Description

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

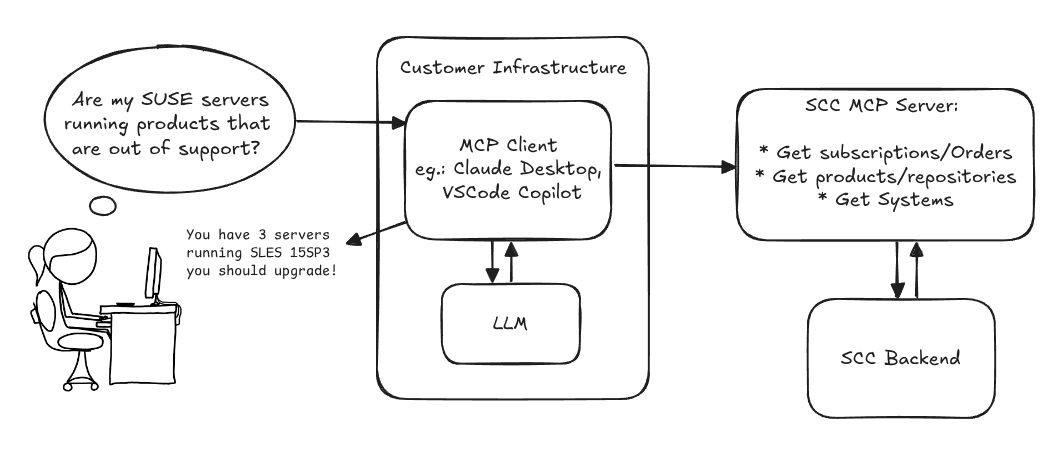

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json:

Flaky Tests AI Finder for Uyuni and MLM Test Suites by oscar-barrios

Description

Our current Grafana dashboards provide a great overview of test suite health, including a panel for "Top failed tests." However, identifying which of these failures are due to legitimate bugs versus intermittent "flaky tests" is a manual, time-consuming process. These flaky tests erode trust in our test suites and slow down development.

This project aims to build a simple but powerful Python script that automates flaky test detection. The script will directly query our Prometheus instance for the historical data of each failed test, using the jenkins_build_test_case_failure_age metric. It will then format this data and send it to the Gemini API with a carefully crafted prompt, asking it to identify which tests show a flaky pattern.

The final output will be a clean JSON list of the most probable flaky tests, which can then be used to populate a new "Top Flaky Tests" panel in our existing Grafana test suite dashboard.

Goals

By the end of Hack Week, we aim to have a single, working Python script that:

- Connects to Prometheus and executes a query to fetch detailed test failure history.

- Processes the raw data into a format suitable for the Gemini API.

- Successfully calls the Gemini API with the data and a clear prompt.

- Parses the AI's response to extract a simple list of flaky tests.

- Saves the list to a JSON file that can be displayed in Grafana.

- New panel in our Dashboard listing the Flaky tests

Resources

- Jenkins Prometheus Exporter: https://github.com/uyuni-project/jenkins-exporter/

- Data Source: Our internal Prometheus server.

- Key Metric:

jenkins_build_test_case_failure_age{jobname, buildid, suite, case, status, failedsince}. - Existing Query for Reference:

count by (suite) (max_over_time(jenkins_build_test_case_failure_age{status=~"FAILED|REGRESSION", jobname="$jobname"}[$__range])). - AI Model: The Google Gemini API.

- Example about how to interact with Gemini API: https://github.com/srbarrios/FailTale/

- Visualization: Our internal Grafana Dashboard.

- Internal IaC: https://gitlab.suse.de/galaxy/infrastructure/-/tree/master/srv/salt/monitoring

Outcome

- Jenkins Flaky Test Detector: https://github.com/srbarrios/jenkins-flaky-tests-detector and its container

- IaC on MLM Team: https://gitlab.suse.de/galaxy/infrastructure/-/tree/master/srv/salt/monitoring/jenkinsflakytestsdetector?reftype=heads, https://gitlab.suse.de/galaxy/infrastructure/-/blob/master/srv/salt/monitoring/grafana/dashboards/flaky-tests.json?ref_type=heads, and others.

- Grafana Dashboard: https://grafana.mgr.suse.de/d/flaky-tests/flaky-tests-detection @ @ text

Exploring Modern AI Trends and Kubernetes-Based AI Infrastructure by jluo

Description

Build a solid understanding of the current landscape of Artificial Intelligence and how modern cloud-native technologies—especially Kubernetes—support AI workloads.

Goals

Use Gemini Learning Mode to guide the exploration, surface relevant concepts, and structure the learning journey:

- Gain insight into the latest AI trends, tools, and architectural concepts.

- Understand how Kubernetes and related cloud-native technologies are used in the AI ecosystem (model training, deployment, orchestration, MLOps).

Resources

Red Hat AI Topic Articles

- https://www.redhat.com/en/topics/ai

Kubeflow Documentation

- https://www.kubeflow.org/docs/

Q4 2025 CNCF Technology Landscape Radar report:

- https://www.cncf.io/announcements/2025/11/11/cncf-and-slashdata-report-finds-leading-ai-tools-gaining-adoption-in-cloud-native-ecosystems/

- https://www.cncf.io/wp-content/uploads/2025/11/cncfreporttechradar_111025a.pdf

Agent-to-Agent (A2A) Protocol

- https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

MCP Trace Suite by r1chard-lyu

Description

This project plans to create an MCP Trace Suite, a system that consolidates commonly used Linux debugging tools such as bpftrace, perf, and ftrace.

The suite is implemented as an MCP Server. This architecture allows an AI agent to leverage the server to diagnose Linux issues and perform targeted system debugging by remotely executing and retrieving tracing data from these powerful tools.

- Repo: https://github.com/r1chard-lyu/systracesuite

- Demo: Slides

Goals

Build an MCP Server that can integrate various Linux debugging and tracing tools, including bpftrace, perf, ftrace, strace, and others, with support for future expansion of additional tools.

Perform testing by intentionally creating bugs or issues that impact system performance, allowing an AI agent to analyze the root cause and identify the underlying problem.

Resources

- Gemini CLI: https://geminicli.com/

- eBPF: https://ebpf.io/

- bpftrace: https://github.com/bpftrace/bpftrace/

- perf: https://perfwiki.github.io/main/

- ftrace: https://github.com/r1chard-lyu/tracium/