a project by PSuarezHernandez

Description

Using Ollama you can easily run different LLM models in your local computer. This project is about exploring Ollama, testing different LLMs and try to fine tune them. Also, explore potential ways of integration with Uyuni.

Goals

- Explore Ollama

- Test different models

- Fine tuning

- Explore possible integration in Uyuni

Resources

- https://ollama.com/

- https://huggingface.co/

- https://apeatling.com/articles/part-2-building-your-training-data-for-fine-tuning/

This project is part of:

Hack Week 24

Activity

Comments

-

about 1 year ago by PSuarezHernandez | Reply

Some conclusions after Hackweek 24:

- ollama + open-webui is a nice combo to allow running LLMs locally (tried also Local AI)

- open-webui allows you to add custom knoweldge bases (collections) to feed models.

- Uyuni documentation, Salt documentation can be used on this collections to make models to learn.

- Using a tailored documentation works better to feed models.

- Tried different models: llama3.1, mistral, mistral-nemo, gemma2, phi3,..

- Getting promising results, particularly with

mistral-nemo.. but also getting model hallutinations - model parameters can be adjusted to reduce them.

Takeaways

- Small models runs fairly well with CPU only.

- Making an expert assistance on Uyuni, with an extensive knowledge based on documentation, might be something to keep exploring.

Next steps

- Make the model to understand Uyuni API, so it is able to translate user requests to actual call to Uyuni API.

-

6 months ago by rudrakshkarpe | Reply

Hi @PSuarezHernandez ,

will this project be part of Hackweek 2025?

Similar Projects

Set Uyuni to manage edge clusters at scale by RDiasMateus

Description

Prepare a Poc on how to use MLM to manage edge clusters. Those cluster are normally equal across each location, and we have a large number of them.

The goal is to produce a set of sets/best practices/scripts to help users manage this kind of setup.

Goals

step 1: Manual set-up

Goal: Have a running application in k3s and be able to update it using System Update Controler (SUC)

- Deploy Micro 6.2 machine

Deploy k3s - single node

- https://docs.k3s.io/quick-start

Build/find a simple web application (static page)

- Build/find a helmchart to deploy the application

Deploy the application on the k3s cluster

Install App updates through helm update

Install OS updates using MLM

step 2: Automate day 1

Goal: Trigger the application deployment and update from MLM

- Salt states For application (with static data)

- Deploy the application helmchart, if not present

- install app updates through helmchart parameters

- Link it to GIT

- Define how to link the state to the machines (based in some pillar data? Using configuration channels by importing the state? Naming convention?)

- Use git update to trigger helmchart app update

- Recurrent state applying configuration channel?

step 3: Multi-node cluster

Goal: Use SUC to update a multi-node cluster.

- Create a multi-node cluster

- Deploy application

- call the helm update/install only on control plane?

- Install App updates through helm update

- Prepare a SUC for OS update (k3s also? How?)

- https://github.com/rancher/system-upgrade-controller

- https://documentation.suse.com/cloudnative/k3s/latest/en/upgrades/automated.html

- Update/deploy the SUC?

- Update/deploy the SUC CRD with the update procedure

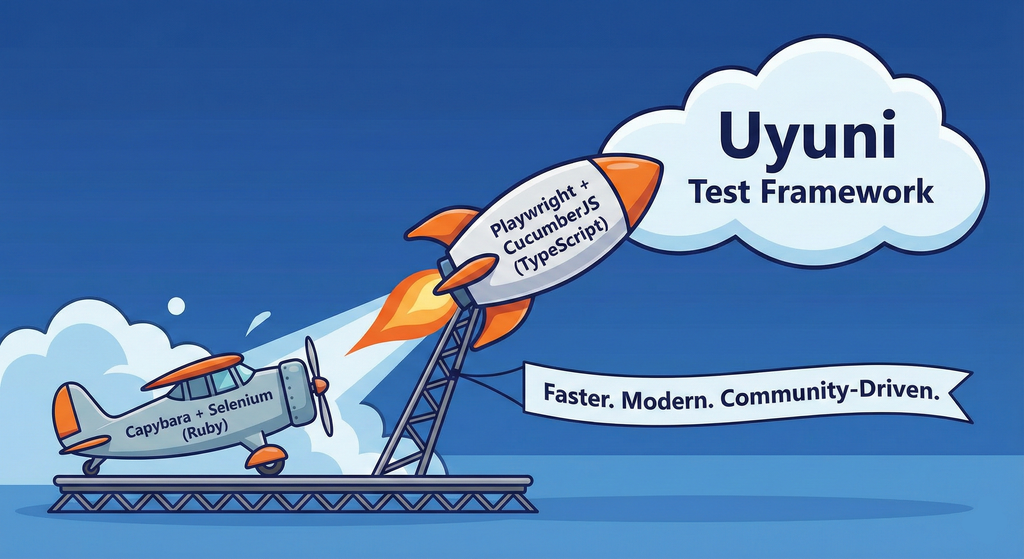

Move Uyuni Test Framework from Selenium to Playwright + AI by oscar-barrios

Description

This project aims to migrate the existing Uyuni Test Framework from Selenium to Playwright. The move will improve the stability, speed, and maintainability of our end-to-end tests by leveraging Playwright's modern features. We'll be rewriting the current Selenium code in Ruby to Playwright code in TypeScript, which includes updating the test framework runner, step definitions, and configurations. This is also necessary because we're moving from Cucumber Ruby to CucumberJS.

If you're still curious about the AI in the title, it was just a way to grab your attention. Thanks for your understanding.

Nah, let's be honest ![]() AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

Goals

- Migrate Core tests including Onboarding of clients

- Improve test reliabillity: Measure and confirm a significant reduction of flakiness.

- Implement a robust framework: Establish a well-structured and reusable Playwright test framework using the CucumberJS

Resources

- Existing Uyuni Test Framework (Cucumber Ruby + Capybara + Selenium)

- My Template for CucumberJS + Playwright in TypeScript

- Started Hackweek Project

Testing and adding GNU/Linux distributions on Uyuni by juliogonzalezgil

Join the Gitter channel! https://gitter.im/uyuni-project/hackweek

Uyuni is a configuration and infrastructure management tool that saves you time and headaches when you have to manage and update tens, hundreds or even thousands of machines. It also manages configuration, can run audits, build image containers, monitor and much more!

Currently there are a few distributions that are completely untested on Uyuni or SUSE Manager (AFAIK) or just not tested since a long time, and could be interesting knowing how hard would be working with them and, if possible, fix whatever is broken.

For newcomers, the easiest distributions are those based on DEB or RPM packages. Distributions with other package formats are doable, but will require adapting the Python and Java code to be able to sync and analyze such packages (and if salt does not support those packages, it will need changes as well). So if you want a distribution with other packages, make sure you are comfortable handling such changes.

No developer experience? No worries! We had non-developers contributors in the past, and we are ready to help as long as you are willing to learn. If you don't want to code at all, you can also help us preparing the documentation after someone else has the initial code ready, or you could also help with testing :-)

The idea is testing Salt (including bootstrapping with bootstrap script) and Salt-ssh clients

To consider that a distribution has basic support, we should cover at least (points 3-6 are to be tested for both salt minions and salt ssh minions):

- Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)

- Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)

- Package management (install, remove, update...)

- Patching

- Applying any basic salt state (including a formula)

- Salt remote commands

- Bonus point: Java part for product identification, and monitoring enablement

- Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)

- Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)

- Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

If something is breaking: we can try to fix it, but the main idea is research how supported it is right now. Beyond that it's up to each project member how much to hack :-)

- If you don't have knowledge about some of the steps: ask the team

- If you still don't know what to do: switch to another distribution and keep testing.

This card is for EVERYONE, not just developers. Seriously! We had people from other teams helping that were not developers, and added support for Debian and new SUSE Linux Enterprise and openSUSE Leap versions :-)

In progress/done for Hack Week 25

Guide

We started writin a Guide: Adding a new client GNU Linux distribution to Uyuni at https://github.com/uyuni-project/uyuni/wiki/Guide:-Adding-a-new-client-GNU-Linux-distribution-to-Uyuni, to make things easier for everyone, specially those not too familiar wht Uyuni or not technical.

openSUSE Leap 16.0

The distribution will all love!

https://en.opensuse.org/openSUSE:Roadmap#DRAFTScheduleforLeap16.0

Curent Status We started last year, it's complete now for Hack Week 25! :-D

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file) NOTE: Done, client tools for SLMicro6 are using as those for SLE16.0/openSUSE Leap 16.0 are not available yet[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...). Works, even reboot requirement detection

Enhance setup wizard for Uyuni by PSuarezHernandez

Description

This project wants to enhance the intial setup on Uyuni after its installation, so it's easier for a user to start using with it.

Uyuni currently uses "uyuni-tools" (mgradm) as the installation entrypoint, to trigger the installation of Uyuni in the given host, but does not really perform an initial setup, for instance:

- user creation

- adding products / channels

- generating bootstrap repos

- create activation keys

- ...

Goals

- Provide initial setup wizard as part of mgradm uyuni installation

Resources

Enable more features in mcp-server-uyuni by j_renner

Description

I would like to contribute to mcp-server-uyuni, the MCP server for Uyuni / Multi-Linux Manager) exposing additional features as tools. There is lots of relevant features to be found throughout the API, for example:

- System operations and infos

- System groups

- Maintenance windows

- Ansible

- Reporting

- ...

At the end of the week I managed to enable basic system group operations:

- List all system groups visible to the user

- Create new system groups

- List systems assigned to a group

- Add and remove systems from groups

Goals

- Set up test environment locally with the MCP server and client + a recent MLM server [DONE]

- Identify features and use cases offering a benefit with limited effort required for enablement [DONE]

- Create a PR to the repo [DONE]

Resources

issuefs: FUSE filesystem representing issues (e.g. JIRA) for the use with AI agents code-assistants by llansky3

Description

Creating a FUSE filesystem (issuefs) that mounts issues from various ticketing systems (Github, Jira, Bugzilla, Redmine) as files to your local file system.

And why this is good idea?

- User can use favorite command line tools to view and search the tickets from various sources

- User can use AI agents capabilities from your favorite IDE or cli to ask question about the issues, project or functionality while providing relevant tickets as context without extra work.

- User can use it during development of the new features when you let the AI agent to jump start the solution. The issuefs will give the AI agent the context (AI agents just read few more files) about the bug or requested features. No need for copying and pasting issues to user prompt or by using extra MCP tools to access the issues. These you can still do but this approach is on purpose different.

Goals

- Add Github issue support

- Proof the concept/approach by apply the approach on itself using Github issues for tracking and development of new features

- Add support for Bugzilla and Redmine using this approach in the process of doing it. Record a video of it.

- Clean-up and test the implementation and create some documentation

- Create a blog post about this approach

Resources

There is a prototype implementation here. This currently sort of works with JIRA only.

Song Search with CLAP by gcolangiuli

Description

Contrastive Language-Audio Pretraining (CLAP) is an open-source library that enables the training of a neural network on both Audio and Text descriptions, making it possible to search for Audio using a Text input. Several pre-trained models for song search are already available on huggingface

Goals

Evaluate how CLAP can be used for song searching and determine which types of queries yield the best results by developing a Minimum Viable Product (MVP) in Python. Based on the results of this MVP, future steps could include:

- Music Tagging;

- Free text search;

- Integration with an LLM (for example, with MCP or the OpenAI API) for music suggestions based on your own library.

The code for this project will be entirely written using AI to better explore and demonstrate AI capabilities.

Result

In this MVP we implemented:

- Async Song Analysis with Clap model

- Free Text Search of the songs

- Similar song search based on vector representation

- Containerised version with web interface

We also documented what went well and what can be improved in the use of AI.

You can have a look at the result here:

Future implementation can be related to performance improvement and stability of the analysis.

References

- CLAP: The main model being researched;

- huggingface: Pre-trained models for CLAP;

- Free Music Archive: Creative Commons songs that can be used for testing;

Creating test suite using LLM on existing codebase of a solar router by fcrozat

Description

Two years ago, I evaluated solar routers as part of hackweek24, I've assembled one and it is running almost smoothly.

However, its code quality is not perfect and the codebase doesn't have any testcase (which is tricky, since it is embedded code and rely on getting external data to react).

Before improving the code itself, a testsuite should be created to ensure code additional don't cause regression.

Goals

Create a testsuite, allowing to test solar router code in a virtual environment. Using LLM to help to create this test suite.

If succesful, try to improve the codebase itself by having it reviewed by LLM.

Resources

Self-Scaling LLM Infrastructure Powered by Rancher by ademicev0

Self-Scaling LLM Infrastructure Powered by Rancher

Description

The Problem

Running LLMs can get expensive and complex pretty quickly.

Today there are typically two choices:

- Use cloud APIs like OpenAI or Anthropic. Easy to start with, but costs add up at scale.

- Self-host everything - set up Kubernetes, figure out GPU scheduling, handle scaling, manage model serving... it's a lot of work.

What if there was a middle ground?

What if infrastructure scaled itself instead of making you scale it?

Can we use existing Rancher capabilities like CAPI, autoscaling, and GitOps to make this simpler instead of building everything from scratch?

Project Repository: github.com/alexander-demicev/llmserverless

What This Project Does

A key feature is hybrid deployment: requests can be routed based on complexity or privacy needs. Simple or low-sensitivity queries can use public APIs (like OpenAI), while complex or private requests are handled in-house on local infrastructure. This flexibility allows balancing cost, privacy, and performance - using cloud for routine tasks and on-premises resources for sensitive or demanding workloads.

A complete, self-scaling LLM infrastructure that:

- Scales to zero when idle (no idle costs)

- Scales up automatically when requests come in

- Adds more nodes when needed, removes them when demand drops

- Runs on any infrastructure - laptop, bare metal, or cloud

Think of it as "serverless for LLMs" - focus on building, the infrastructure handles itself.

How It Works

A combination of open source tools working together:

Flow:

- Users interact with OpenWebUI (chat interface)

- Requests go to LiteLLM Gateway

- LiteLLM routes requests to:

- Ollama (Knative) for local model inference (auto-scales pods)

- Or cloud APIs for fallback

SUSE Observability MCP server by drutigliano

Description

The idea is to implement the SUSE Observability Model Context Protocol (MCP) Server as a specialized, middle-tier API designed to translate the complex, high-cardinality observability data from StackState (topology, metrics, and events) into highly structured, contextually rich, and LLM-ready snippets.

This MCP Server abstract the StackState APIs. Its primary function is to serve as a Tool/Function Calling target for AI agents. When an AI receives an alert or a user query (e.g., "What caused the outage?"), the AI calls an MCP Server endpoint. The server then fetches the relevant operational facts, summarizes them, normalizes technical identifiers (like URNs and raw metric names) into natural language concepts, and returns a concise JSON or YAML payload. This payload is then injected directly into the LLM's prompt, ensuring the final diagnosis or action is grounded in real-time, accurate SUSE Observability data, effectively minimizing hallucinations.

Goals

- Grounding AI Responses: Ensure that all AI diagnoses, root cause analyses, and action recommendations are strictly based on verifiable, real-time data retrieved from the SUSE Observability StackState platform.

- Simplifying Data Access: Abstract the complexity of StackState's native APIs (e.g., Time Travel, 4T Data Model) into simple, semantic functions that can be easily invoked by LLM tool-calling mechanisms.

- Data Normalization: Convert complex, technical identifiers (like component URNs, raw metric names, and proprietary health states) into standardized, natural language terms that an LLM can easily reason over.

- Enabling Automated Remediation: Define clear, action-oriented MCP endpoints (e.g., execute_runbook) that allow the AI agent to initiate automated operational workflows (e.g., restarts, scaling) after a diagnosis, closing the loop on observability.

Hackweek STEP

- Create a functional MCP endpoint exposing one (or more) tool(s) to answer queries like "What is the health of service X?") by fetching, normalizing, and returning live StackState data in an LLM-ready format.

Scope

- Implement read-only MCP server that can:

- Connect to a live SUSE Observability instance and authenticate (with API token)

- Use tools to fetch data for a specific component URN (e.g., current health state, metrics, possibly topology neighbors, ...).

- Normalize response fields (e.g., URN to "Service Name," health state DEVIATING to "Unhealthy", raw metrics).

- Return the data as a structured JSON payload compliant with the MCP specification.

Deliverables

- MCP Server v0.1 A running Golang MCP server with at least one tool.

- A README.md and a test script (e.g., curl commands or a simple notebook) showing how an AI agent would call the endpoint and the resulting JSON payload.

Outcome A functional and testable API endpoint that proves the core concept: translating complex StackState data into a simple, LLM-ready format. This provides the foundation for developing AI-driven diagnostics and automated remediation.

Resources

- https://www.honeycomb.io/blog/its-the-end-of-observability-as-we-know-it-and-i-feel-fine

- https://www.datadoghq.com/blog/datadog-remote-mcp-server

- https://modelcontextprotocol.io/specification/2025-06-18/index

- https://modelcontextprotocol.io/docs/develop/build-server

Basic implementation

- https://github.com/drutigliano19/suse-observability-mcp-server

Results

Successfully developed and delivered a fully functional SUSE Observability MCP Server that bridges language models with SUSE Observability's operational data. This project demonstrates how AI agents can perform intelligent troubleshooting and root cause analysis using structured access to real-time infrastructure data.

Example execution

Try out Neovim Plugins supporting AI Providers by enavarro_suse

Description

Experiment with several Neovim plugins that integrate AI model providers such as Gemini and Ollama.

Goals

Evaluate how these plugins enhance the development workflow, how they differ in capabilities, and how smoothly they integrate into Neovim for day-to-day coding tasks.

Resources

- Neovim 0.11.5

- AI-enabled Neovim plugins:

- avante.nvim: https://github.com/yetone/avante.nvim

- Gp.nvim: https://github.com/Robitx/gp.nvim

- parrot.nvim: https://github.com/frankroeder/parrot.nvim

- gemini.nvim: https://dotfyle.com/plugins/kiddos/gemini.nvim

- ...

- Accounts or API keys for AI model providers.

- Local model serving setup (e.g., Ollama)

- Test projects or codebases for practical evaluation:

- OBS: https://build.opensuse.org/

- OBS blog and landing page: https://openbuildservice.org/

- ...

Testing and adding GNU/Linux distributions on Uyuni by juliogonzalezgil

Join the Gitter channel! https://gitter.im/uyuni-project/hackweek

Uyuni is a configuration and infrastructure management tool that saves you time and headaches when you have to manage and update tens, hundreds or even thousands of machines. It also manages configuration, can run audits, build image containers, monitor and much more!

Currently there are a few distributions that are completely untested on Uyuni or SUSE Manager (AFAIK) or just not tested since a long time, and could be interesting knowing how hard would be working with them and, if possible, fix whatever is broken.

For newcomers, the easiest distributions are those based on DEB or RPM packages. Distributions with other package formats are doable, but will require adapting the Python and Java code to be able to sync and analyze such packages (and if salt does not support those packages, it will need changes as well). So if you want a distribution with other packages, make sure you are comfortable handling such changes.

No developer experience? No worries! We had non-developers contributors in the past, and we are ready to help as long as you are willing to learn. If you don't want to code at all, you can also help us preparing the documentation after someone else has the initial code ready, or you could also help with testing :-)

The idea is testing Salt (including bootstrapping with bootstrap script) and Salt-ssh clients

To consider that a distribution has basic support, we should cover at least (points 3-6 are to be tested for both salt minions and salt ssh minions):

- Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)

- Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)

- Package management (install, remove, update...)

- Patching

- Applying any basic salt state (including a formula)

- Salt remote commands

- Bonus point: Java part for product identification, and monitoring enablement

- Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)

- Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)

- Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

If something is breaking: we can try to fix it, but the main idea is research how supported it is right now. Beyond that it's up to each project member how much to hack :-)

- If you don't have knowledge about some of the steps: ask the team

- If you still don't know what to do: switch to another distribution and keep testing.

This card is for EVERYONE, not just developers. Seriously! We had people from other teams helping that were not developers, and added support for Debian and new SUSE Linux Enterprise and openSUSE Leap versions :-)

In progress/done for Hack Week 25

Guide

We started writin a Guide: Adding a new client GNU Linux distribution to Uyuni at https://github.com/uyuni-project/uyuni/wiki/Guide:-Adding-a-new-client-GNU-Linux-distribution-to-Uyuni, to make things easier for everyone, specially those not too familiar wht Uyuni or not technical.

openSUSE Leap 16.0

The distribution will all love!

https://en.opensuse.org/openSUSE:Roadmap#DRAFTScheduleforLeap16.0

Curent Status We started last year, it's complete now for Hack Week 25! :-D

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file) NOTE: Done, client tools for SLMicro6 are using as those for SLE16.0/openSUSE Leap 16.0 are not available yet[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...). Works, even reboot requirement detection

Help Create A Chat Control Resistant Turnkey Chatmail/Deltachat Relay Stack - Rootless Podman Compose, OpenSUSE BCI, Hardened, & SELinux by 3nd5h1771fy

Description

The Mission: Decentralized & Sovereign Messaging

FYI: If you have never heard of "Chatmail", you can visit their site here, but simply put it can be thought of as the underlying protocol/platform decentralized messengers like DeltaChat use for their communications. Do not confuse it with the honeypot looking non-opensource paid for prodect with better seo that directs you to chatmailsecure(dot)com

In an era of increasing centralized surveillance by unaccountable bad actors (aka BigTech), "Chat Control," and the erosion of digital privacy, the need for sovereign communication infrastructure is critical. Chatmail is a pioneering initiative that bridges the gap between classic email and modern instant messaging, offering metadata-minimized, end-to-end encrypted (E2EE) communication that is interoperable and open.

However, unless you are a seasoned sysadmin, the current recommended deployment method of a Chatmail relay is rigid, fragile, difficult to properly secure, and effectively takes over the entire host the "relay" is deployed on.

Why This Matters

A simple, host agnostic, reproducible deployment lowers the entry cost for anyone wanting to run a privacy‑preserving, decentralized messaging relay. In an era of perpetually resurrected chat‑control legislation threats, EU digital‑sovereignty drives, and many dangers of using big‑tech messaging platforms (Apple iMessage, WhatsApp, FB Messenger, Instagram, SMS, Google Messages, etc...) for any type of communication, providing an easy‑to‑use alternative empowers:

- Censorship resistance - No single entity controls the relay; operators can spin up new nodes quickly.

- Surveillance mitigation - End‑to‑end OpenPGP encryption ensures relay operators never see plaintext.

- Digital sovereignty - Communities can host their own infrastructure under local jurisdiction, aligning with national data‑policy goals.

By turning the Chatmail relay into a plug‑and‑play container stack, we enable broader adoption, foster a resilient messaging fabric, and give developers, activists, and hobbyists a concrete tool to defend privacy online.

Goals

As I indicated earlier, this project aims to drastically simplify the deployment of Chatmail relay. By converting this architecture into a portable, containerized stack using Podman and OpenSUSE base container images, we can allow anyone to deploy their own censorship-resistant, privacy-preserving communications node in minutes.

Our goal for Hack Week: package every component into containers built on openSUSE/MicroOS base images, initially orchestrated with a single container-compose.yml (podman-compose compatible). The stack will:

- Run on any host that supports Podman (including optimizations and enhancements for SELinux‑enabled systems).

- Allow network decoupling by refactoring configurations to move from file-system constrained Unix sockets to internal TCP networking, allowing containers achieve stricter isolation.

- Utilize Enhanced Security with SELinux by using purpose built utilities such as udica we can quickly generate custom SELinux policies for the container stack, ensuring strict confinement superior to standard/typical Docker deployments.

- Allow the use of bind or remote mounted volumes for shared data (

/var/vmail, DKIM keys, TLS certs, etc.). - Replace the local DNS server requirement with a remote DNS‑provider API for DKIM/TXT record publishing.

By delivering a turnkey, host agnostic, reproducible deployment, we lower the barrier for individuals and small communities to launch their own chatmail relays, fostering a decentralized, censorship‑resistant messaging ecosystem that can serve DeltaChat users and/or future services adopting this protocol

Resources

- The links included above

- https://chatmail.at/doc/relay/

- https://delta.chat/en/help

- Project repo -> https://codeberg.org/EndShittification/containerized-chatmail-relay

Update M2Crypto by mcepl

There are couple of projects I work on, which need my attention and putting them to shape:

Goal for this Hackweek

- Put M2Crypto into better shape (most issues closed, all pull requests processed)

- More fun to learn jujutsu

- Play more with Gemini, how much it help (or not).

- Perhaps, also (just slightly related), help to fix vis to work with LuaJIT, particularly to make vis-lspc working.

Collection and organisation of information about Bulgarian schools by iivanov

Description

To achieve this it will be necessary:

- Collect/download raw data from various government and non-governmental organizations

- Clean up raw data and organise it in some kind database.

- Create tool to make queries easy.

- Or perhaps dump all data into AI and ask questions in natural language.

Goals

By selecting particular school information like this will be provided:

- School scores on national exams.

- School scores from the external evaluations exams.

- School town, municipality and region.

- Employment rate in a town or municipality.

- Average health of the population in the region.

Resources

Some of these are available only in bulgarian.

- https://danybon.com/klasazia

- https://nvoresults.com/index.html

- https://ri.mon.bg/active-institutions

- https://www.nsi.bg/nrnm/ekatte/archive

Results

- Information about all Bulgarian schools with their scores during recent years cleaned and organised into SQL tables

- Information about all Bulgarian villages, cities, municipalities and districts cleaned and organised into SQL tables

- Information about all Bulgarian villages and cities census since beginning of this century cleaned and organised into SQL tables.

- Information about all Bulgarian municipalities about religion, ethnicity cleaned and organised into SQL tables.

- Data successfully loaded to locally running Ollama with help to Vanna.AI

- Seems to be usable.

TODO

- Add more statistical information about municipalities and ....

Code and data

Bring to Cockpit + System Roles capabilities from YAST by miguelpc

Bring to Cockpit + System Roles features from YAST

Cockpit and System Roles have been added to SLES 16 There are several capabilities in YAST that are not yet present in Cockpit and System Roles We will follow the principle of "automate first, UI later" being System Roles the automation component and Cockpit the UI one.

Goals

The idea is to implement service configuration in System Roles and then add an UI to manage these in Cockpit. For some capabilities it will be required to have an specific Cockpit Module as they will interact with a reasource already configured.

Resources

A plan on capabilities missing and suggested implementation is available here: https://docs.google.com/spreadsheets/d/1ZhX-Ip9MKJNeKSYV3bSZG4Qc5giuY7XSV0U61Ecu9lo/edit

Linux System Roles:

- https://linux-system-roles.github.io/

- https://build.opensuse.org/package/show/openSUSE:Factory/ansible-linux-system-roles Package on sle16 ansible-linux-system-roles

First meeting Hackweek catchup

- Monday, December 1 · 11:00 – 12:00

- Time zone: Europe/Madrid

- Google Meet link: https://meet.google.com/rrc-kqch-hca

Docs Navigator MCP: SUSE Edition by mackenzie.techdocs

Description

Docs Navigator MCP: SUSE Edition is an AI-powered documentation navigator that makes finding information across SUSE, Rancher, K3s, and RKE2 documentation effortless. Built as a Model Context Protocol (MCP) server, it enables semantic search, intelligent Q&A, and documentation summarization using 100% open-source AI models (no API keys required!). The project also allows you to bring your own keys from Anthropic and Open AI for parallel processing.

Goals

- [ X ] Build functional MCP server with documentation tools

- [ X ] Implement semantic search with vector embeddings

- [ X ] Create user-friendly web interface

- [ X ] Optimize indexing performance (parallel processing)

- [ X ] Add SUSE branding and polish UX

- [ X ] Stretch Goal: Add more documentation sources

- [ X ] Stretch Goal: Implement document change detection for auto-updates

Coming Soon!

- Community Feedback: Test with real users and gather improvement suggestions

Resources

- Repository: Docs Navigator MCP: SUSE Edition GitHub

- UI Demo: Live UI Demo of Docs Navigator MCP: SUSE Edition

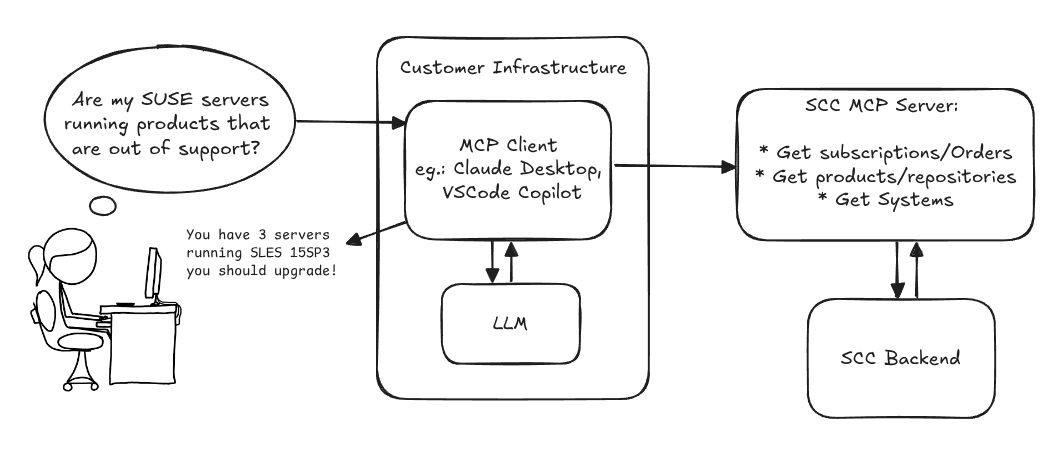

MCP Server for SCC by digitaltomm

Description

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json:

Try AI training with ROCm and LoRA by bmwiedemann

Description

I want to setup a Radeon RX 9600 XT 16 GB at home with ROCm on Slowroll.

Goals

I want to test how fast AI inference can get with the GPU and if I can use LoRA to re-train an existing free model for some task.

Resources

- https://rocm.docs.amd.com/en/latest/compatibility/compatibility-matrix.html

- https://build.opensuse.org/project/show/science:GPU:ROCm

- https://src.opensuse.org/ROCm/

- https://www.suse.com/c/lora-fine-tuning-llms-for-text-classification/

Results

got inference working with llama.cpp:

export LLAMACPP_ROCM_ARCH=gfx1200

HIPCXX="$(hipconfig -l)/clang" HIP_PATH="$(hipconfig -R)" \

cmake -S . -B build -DGGML_HIP=ON -DAMDGPU_TARGETS=$LLAMACPP_ROCM_ARCH \

-DCMAKE_BUILD_TYPE=Release -DLLAMA_CURL=ON \

-Dhipblas_DIR=/usr/lib64/cmake/hipblaslt/ \

&& cmake --build build --config Release -j8

m=models/gpt-oss-20b-mxfp4.gguf

cd $P/llama.cpp && build/bin/llama-server --model $m --threads 8 --port 8005 --host 0.0.0.0 --device ROCm0 --n-gpu-layers 999

Without the --device option it faulted. Maybe because my APU also appears there?

I updated/fixed various related packages: https://src.opensuse.org/ROCm/rocm-examples/pulls/1 https://src.opensuse.org/ROCm/hipblaslt/pulls/1 SR 1320959

benchmark

I benchmarked inference with llama.cpp + gpt-oss-20b-mxfp4.gguf and ROCm offloading to a Radeon RX 9060 XT 16GB. I varied the number of layers that went to the GPU:

- 0 layers 14.49 tokens/s (8 CPU cores)

- 9 layers 17.79 tokens/s 34% VRAM

- 15 layers 22.39 tokens/s 51% VRAM

- 20 layers 27.49 tokens/s 64% VRAM

- 24 layers 41.18 tokens/s 74% VRAM

- 25+ layers 86.63 tokens/s 75% VRAM (only 200% CPU load)

So there is a significant performance-boost if the whole model fits into the GPU's VRAM.

Local AI assistant with optional integrations and mobile companion by livdywan

Description

Setup a local AI assistant for research, brainstorming and proof reading. Look into SurfSense, Open WebUI and possibly alternatives. Explore integration with services like openQA. There should be no cloud dependencies. Mobile phone support or an additional companion app would be a bonus. The goal is not to develop everything from scratch.

User Story

- Allison Average wants a one-click local AI assistent on their openSUSE laptop.

- Ash Awesome wants AI on their phone without an expensive subscription.

Goals

- Evaluate a local SurfSense setup for day to day productivity

- Test opencode for vibe coding and tool calling

Timeline

Day 1

- Took a look at SurfSense and started setting up a local instance.

- Unfortunately the container setup did not work well. Tho this was a great opportunity to learn some new podman commands and refresh my memory on how to recover a corrupted btrfs filesystem.

Day 2

- Due to its sheer size and complexity SurfSense seems to have triggered btrfs fragmentation. Naturally this was not visible in any podman-related errors or in the journal. So this took up much of my second day.

Day 3

- Trying out opencode with Qwen3-Coder and Qwen2.5-Coder.

Day 4

- Context size is a thing, and models are not equally usable for vibe coding.

- Through arduous browsing for ollama models I did find some like

myaniu/qwen2.5-1m:7bwith 1m but even then it is not obvious if they are meant for tool calls.

Day 5

- Whilst trying to make opencode usable I discovered ramalama which worked instantly and very well.

Outcomes

surfsense

I could not easily set this up completely. Maybe in part due to my filesystem issues. Was expecting this to be less of an effort.

opencode

Installing opencode and ollama in my distrobox container along with the following configs worked for me.

When preparing a new project from scratch it is a good idea to start out with a template.

opencode.json

``` {

SUSE Edge Image Builder MCP by eminguez

Description

Based on my other hackweek project, SUSE Edge Image Builder's Json Schema I would like to build also a MCP to be able to generate EIB config files the AI way.

Realistically I don't think I'll be able to have something consumable at the end of this hackweek but at least I would like to start exploring MCPs, the difference between an API and MCP, etc.

Goals

- Familiarize myself with MCPs

- Unrealistic: Have an MCP that can generate an EIB config file

Resources

Result

https://github.com/e-minguez/eib-mcp

I've extensively used antigravity and its agent mode to code this. This heavily uses https://hackweek.opensuse.org/25/projects/suse-edge-image-builder-json-schema for the MCP to be built.

I've ended up learning a lot of things about "prompting", json schemas in general, some golang, MCPs and AI in general :)

Example:

Generate an Edge Image Builder configuration for an ISO image based on slmicro-6.2.iso, targeting x86_64 architecture. The output name should be 'my-edge-image' and it should install to /dev/sda. It should deploy

a 3 nodes kubernetes cluster with nodes names "node1", "node2" and "node3" as:

* hostname: node1, IP: 1.1.1.1, role: initializer

* hostname: node2, IP: 1.1.1.2, role: agent

* hostname: node3, IP: 1.1.1.3, role: agent

The kubernetes version should be k3s 1.33.4-k3s1 and it should deploy a cert-manager helm chart (the latest one available according to https://cert-manager.io/docs/installation/helm/). It should create a user

called "suse" with password "suse" and set ntp to "foo.ntp.org". The VIP address for the API should be 1.2.3.4

Generates:

``` apiVersion: "1.0" image: arch: x86_64 baseImage: slmicro-6.2.iso imageType: iso outputImageName: my-edge-image kubernetes: helm: charts: - name: cert-manager repositoryName: jetstack