Project Description

I have all my photos on a private NAS running nextcloud.

This NAS has an ARM CPU and 1GB of RAM, which means I cannot run the face recognition plugin because it requires a GPU, 2 GB of RAM, and PDLib is not available for this arch (I know I could build it and package it ... but doesn't sound fun ;) )

However, I have a Coral TPU connected to a USB port (Thanks to my super friend Marc!):

https://coral.ai/products/accelerator

Where I could run Tensorflow Lite... you see where this is going, don't you?

Goal for this Hackweek

The goal is to run face recognition on the Coral TPU using tensorflow lite and then using the nextcloud API to tag the images.

Resources

This project is part of:

Hack Week 20

Activity

Comments

Be the first to comment!

Similar Projects

Kubernetes-Based ML Lifecycle Automation by lmiranda

Description

This project aims to build a complete end-to-end Machine Learning pipeline running entirely on Kubernetes, using Go, and containerized ML components.

The pipeline will automate the lifecycle of a machine learning model, including:

- Data ingestion/collection

- Model training as a Kubernetes Job

- Model artifact storage in an S3-compatible registry (e.g. Minio)

- A Go-based deployment controller that automatically deploys new model versions to Kubernetes using Rancher

- A lightweight inference service that loads and serves the latest model

- Monitoring of model performance and service health through Prometheus/Grafana

The outcome is a working prototype of an MLOps workflow that demonstrates how AI workloads can be trained, versioned, deployed, and monitored using the Kubernetes ecosystem.

Goals

By the end of Hack Week, the project should:

Produce a fully functional ML pipeline running on Kubernetes with:

- Data collection job

- Training job container

- Storage and versioning of trained models

- Automated deployment of new model versions

- Model inference API service

- Basic monitoring dashboards

Showcase a Go-based deployment automation component, which scans the model registry and automatically generates & applies Kubernetes manifests for new model versions.

Enable continuous improvement by making the system modular and extensible (e.g., additional models, metrics, autoscaling, or drift detection can be added later).

Prepare a short demo explaining the end-to-end process and how new models flow through the system.

Resources

Updates

- Training pipeline and datasets

- Inference Service py

Exploring Modern AI Trends and Kubernetes-Based AI Infrastructure by jluo

Description

Build a solid understanding of the current landscape of Artificial Intelligence and how modern cloud-native technologies—especially Kubernetes—support AI workloads.

Goals

Use Gemini Learning Mode to guide the exploration, surface relevant concepts, and structure the learning journey:

- Gain insight into the latest AI trends, tools, and architectural concepts.

- Understand how Kubernetes and related cloud-native technologies are used in the AI ecosystem (model training, deployment, orchestration, MLOps).

Resources

Red Hat AI Topic Articles

- https://www.redhat.com/en/topics/ai

Kubeflow Documentation

- https://www.kubeflow.org/docs/

Q4 2025 CNCF Technology Landscape Radar report:

- https://www.cncf.io/announcements/2025/11/11/cncf-and-slashdata-report-finds-leading-ai-tools-gaining-adoption-in-cloud-native-ecosystems/

- https://www.cncf.io/wp-content/uploads/2025/11/cncfreporttechradar_111025a.pdf

Agent-to-Agent (A2A) Protocol

- https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

MCP Trace Suite by r1chard-lyu

Description

This project plans to create an MCP Trace Suite, a system that consolidates commonly used Linux debugging tools such as bpftrace, perf, and ftrace.

The suite is implemented as an MCP Server. This architecture allows an AI agent to leverage the server to diagnose Linux issues and perform targeted system debugging by remotely executing and retrieving tracing data from these powerful tools.

- Repo: https://github.com/r1chard-lyu/systracesuite

- Demo: Slides

Goals

Build an MCP Server that can integrate various Linux debugging and tracing tools, including bpftrace, perf, ftrace, strace, and others, with support for future expansion of additional tools.

Perform testing by intentionally creating bugs or issues that impact system performance, allowing an AI agent to analyze the root cause and identify the underlying problem.

Resources

- Gemini CLI: https://geminicli.com/

- eBPF: https://ebpf.io/

- bpftrace: https://github.com/bpftrace/bpftrace/

- perf: https://perfwiki.github.io/main/

- ftrace: https://github.com/r1chard-lyu/tracium/

MCP Server for SCC by digitaltomm

Description

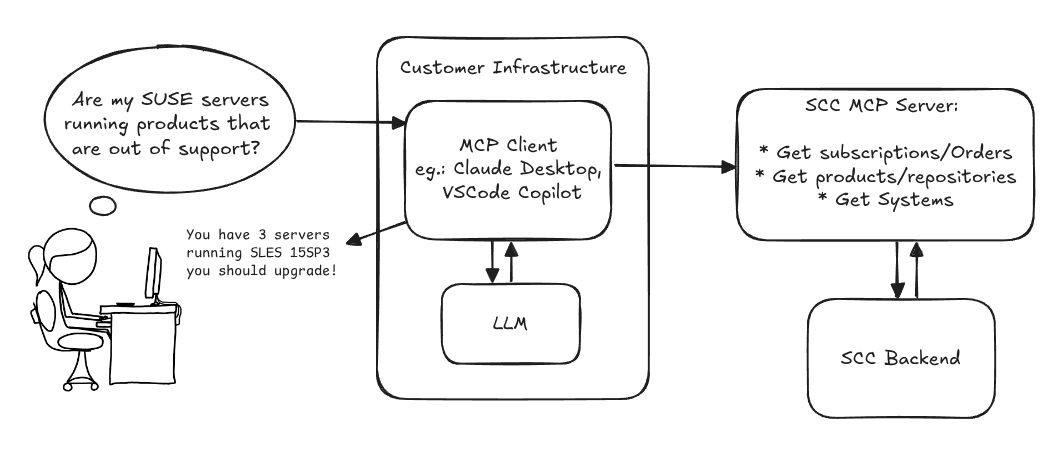

Provide an MCP Server implementation for customers to access data on scc.suse.com via MCP protocol. The core benefit of this MCP interface is that it has direct (read) access to customer data in SCC, so the AI agent gets enhanced knowledge about individual customer data, like subscriptions, orders and registered systems.

Architecture

Goals

We want to demonstrate a proof of concept to connect to the SCC MCP server with any AI agent, for example gemini-cli or codex. Enabling the user to ask questions regarding their SCC inventory.

For this Hackweek, we target that users get proper responses to these example questions:

- Which of my currently active systems are running products that are out of support?

- Do I have ready to use registration codes for SLES?

- What are the latest 5 released patches for SLES 15 SP6? Output as a list with release date, patch name, affected package names and fixed CVEs.

- Which versions of kernel-default are available on SLES 15 SP6?

Technical Notes

Similar to the organization APIs, this can expose to customers data about their subscriptions, orders, systems and products. Authentication should be done by organization credentials, similar to what needs to be provided to RMT/MLM. Customers can connect to the SCC MCP server from their own MCP-compatible client and Large Language Model (LLM), so no third party is involved.

Milestones

[x] Basic MCP API setup MCP endpoints [x] Products / Repositories [x] Subscriptions / Orders [x] Systems [x] Packages [x] Document usage with Gemini CLI, Codex

Resources

Gemini CLI setup:

~/.gemini/settings.json:

Self-Scaling LLM Infrastructure Powered by Rancher by ademicev0

Self-Scaling LLM Infrastructure Powered by Rancher

Description

The Problem

Running LLMs can get expensive and complex pretty quickly.

Today there are typically two choices:

- Use cloud APIs like OpenAI or Anthropic. Easy to start with, but costs add up at scale.

- Self-host everything - set up Kubernetes, figure out GPU scheduling, handle scaling, manage model serving... it's a lot of work.

What if there was a middle ground?

What if infrastructure scaled itself instead of making you scale it?

Can we use existing Rancher capabilities like CAPI, autoscaling, and GitOps to make this simpler instead of building everything from scratch?

Project Repository: github.com/alexander-demicev/llmserverless

What This Project Does

A key feature is hybrid deployment: requests can be routed based on complexity or privacy needs. Simple or low-sensitivity queries can use public APIs (like OpenAI), while complex or private requests are handled in-house on local infrastructure. This flexibility allows balancing cost, privacy, and performance - using cloud for routine tasks and on-premises resources for sensitive or demanding workloads.

A complete, self-scaling LLM infrastructure that:

- Scales to zero when idle (no idle costs)

- Scales up automatically when requests come in

- Adds more nodes when needed, removes them when demand drops

- Runs on any infrastructure - laptop, bare metal, or cloud

Think of it as "serverless for LLMs" - focus on building, the infrastructure handles itself.

How It Works

A combination of open source tools working together:

Flow:

- Users interact with OpenWebUI (chat interface)

- Requests go to LiteLLM Gateway

- LiteLLM routes requests to:

- Ollama (Knative) for local model inference (auto-scales pods)

- Or cloud APIs for fallback

Uyuni Health-check Grafana AI Troubleshooter by ygutierrez

Description

This project explores the feasibility of using the open-source Grafana LLM plugin to enhance the Uyuni Health-check tool with LLM capabilities. The idea is to integrate a chat-based "AI Troubleshooter" directly into existing dashboards, allowing users to ask natural-language questions about errors, anomalies, or performance issues.

Goals

- Investigate if and how the

grafana-llm-appplug-in can be used within the Uyuni Health-check tool. - Investigate if this plug-in can be used to query LLMs for troubleshooting scenarios.

- Evaluate support for local LLMs and external APIs through the plugin.

- Evaluate if and how the Uyuni MCP server could be integrated as another source of information.

Resources