Project Description

This Hack Week i want to figure out how to best use Playwright to test Mojolicious applications like openQA in unit tests. Playwright is a (mostly better) alternative to Selenium for browser automation. I'd like to find a way to write entire unit tests in JavaScript, and have those run right next to existing Perl tests with the same test runner using Node Tap and the Test Anything Protocol.

Goal for this Hackweek

- Get to know Playwright and its features

- Write a node library to start and manage a Mojolicious web server

- Combine TAP and Playwright into unit tests

- Find a way to manage database fixtures for the Mojolicious application to be tested

- Have fun along the way

Looking for hackers with the skills:

This project is part of:

Hack Week 20

Activity

Comments

Be the first to comment!

Similar Projects

Kudos aka openSUSE Recognition Platform by lkocman

Description

Relevant blog post at news-o-o

I started the Kudos application shortly after Leap 16.0 to create a simple, friendly way to recognize people for their work and contributions to openSUSE. There’s so much more to our community than just submitting requests in OBS or gitea we have translations (not only in Weblate), wiki edits, forum and social media moderation, infrastructure maintenance, booth participation, talks, manual testing, openQA test suites, and more!

Goals

Kudos under github.com/openSUSE/kudos with build previews aka netlify

Have a kudos.opensuse.org instance running in production

Build an easy-to-contribute recognition platform for the openSUSE community a place where everyone can send and receive appreciation for their work, across all areas of contribution.

In the future, we could even explore reward options such as vouchers for t-shirts or other community swag, small tokens of appreciation to make recognition more tangible.

Resources

(Do not create new badge requests during hackweek, unless you'll make the badge during hackweek)

- Source code: openSUSE/kudos

- Badges: openSUSE/kudos-badges

- Issue tracker: kudos/issues

MCP Perl SDK by kraih

Description

We've been using the MCP Perl SDK to connect openQA with AI. And while the basics are working pretty well, the SDK is not fully spec compliant yet. So let's change that!

Goals

- Support for Resources

- All response types (Audio, Resource Links, Embedded Resources...)

- Tool/Prompt/Resource update notifications

- Dynamic Tool/Prompt/Resource lists

- New authentication mechanisms

Resources

Create a page with all devel:languages:perl packages and their versions by tinita

Description

Perl projects now live in git: https://src.opensuse.org/perl

It would be useful to have an easy way to check which version of which perl module is in devel:languages:perl. Also we have meta overrides and patches for various modules, and it would be good to have them at a central place, so it is easier to lookup, and we can share with other vendors.

I did some initial data dump here a while ago: https://github.com/perlpunk/cpan-meta

But I never had the time to automate this.

I can also use the data to check if there are necessary updates (currently it uses data from download.opensuse.org, so there is some delay and it depends on building).

Goals

- Have a script that updates a central repository (e.g.

https://src.opensuse.org/perl/_metadata) with metadata by looking at https://src.opensuse.org/perl/_ObsPrj (check if there are any changes from the last run) - Create a HTML page with the list of packages (use Javascript and some table library to make it easily searchable)

Resources

Results

Day 1

- First part of the code which retrieves data from https://src.opensuse.org/perl/_ObsPrj with submodules and creates a YAML and a JSON file.

- Repo: https://github.com/perlpunk/opensuse-perl-meta

- Also a first version of the HTML is live: https://perlpunk.github.io/opensuse-perl-meta/

Day 2

- HTML Page has now links to src.opensuse.org and the date of the last update, plus a short info at the top

- Code is now 100% covered by tests: https://app.codecov.io/gh/perlpunk/opensuse-perl-meta

- I used the modern perl

classfeature, which makes perl classes even nicer and shorter. See example - Tests

- I tried out the mocking feature of the modern Test2::V0 library which provides call tracking. See example

- I tried out comparing data structures with the new Test2::V0 library. It let's you compare parts of the structure with the

likefunction, which only compares the date that is mentioned in the expected data. example

Day 3

- Added various things to the table

- Dependencies column

- Show popup with info for cpanspec, patches and dependencies

- Added last date / commit to the data export.

Plan: With the added date / commit we can now daily check _ObsPrj for changes and only fetch the data for changed packages.

Day 4

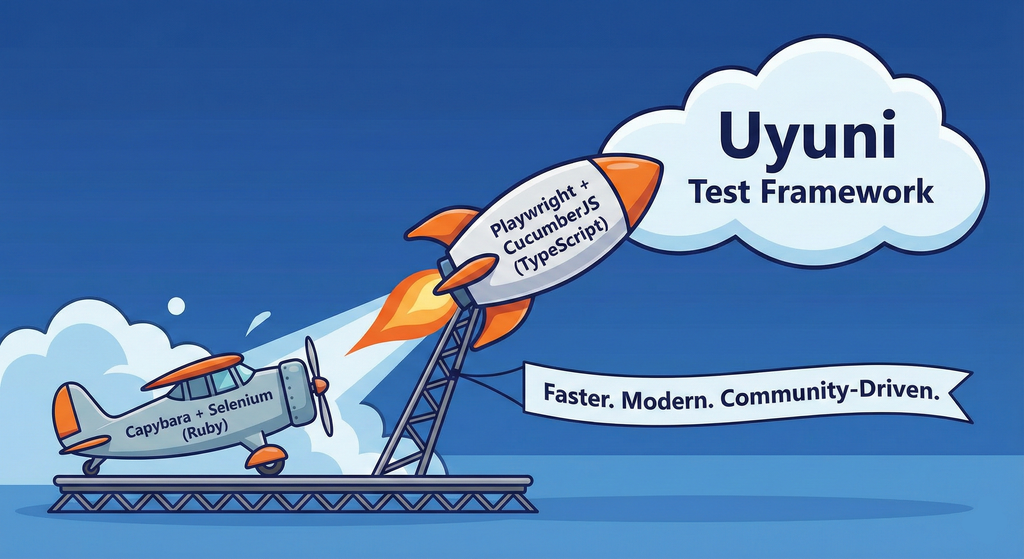

Move Uyuni Test Framework from Selenium to Playwright + AI by oscar-barrios

Description

This project aims to migrate the existing Uyuni Test Framework from Selenium to Playwright. The move will improve the stability, speed, and maintainability of our end-to-end tests by leveraging Playwright's modern features. We'll be rewriting the current Selenium code in Ruby to Playwright code in TypeScript, which includes updating the test framework runner, step definitions, and configurations. This is also necessary because we're moving from Cucumber Ruby to CucumberJS.

If you're still curious about the AI in the title, it was just a way to grab your attention. Thanks for your understanding.

Nah, let's be honest ![]() AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

AI helped a lot to vibe code a good part of the Ruby methods of the Test framework, moving them to Typescript, along with the migration from Capybara to Playwright. I've been using "Cline" as plugin for WebStorm IDE, using Gemini API behind it.

Goals

- Migrate Core tests including Onboarding of clients

- Improve test reliabillity: Measure and confirm a significant reduction of flakiness.

- Implement a robust framework: Establish a well-structured and reusable Playwright test framework using the CucumberJS

Resources

- Existing Uyuni Test Framework (Cucumber Ruby + Capybara + Selenium)

- My Template for CucumberJS + Playwright in TypeScript

- Started Hackweek Project

openQA tests needles elaboration using AI image recognition by mdati

Description

In the openQA test framework, to identify the status of a target SUT image, a screenshots of GUI or CLI-terminal images,

the needles framework scans the many pictures in its repository, having associated a given set of tags (strings), selecting specific smaller parts of each available image. For the needles management actually we need to keep stored many screenshots, variants of GUI and CLI-terminal images, eachone accompanied by a dedicated set of data references (json).

A smarter framework, using image recognition based on AI or other image elaborations tools, nowadays widely available, could improve the matching process and hopefully reduce time and errors, during the images verification and detection process.

Goals

Main scope of this idea is to match a "graphical" image of the console or GUI status of a running openQA test, an image of a shell console or application-GUI screenshot, using less time and resources and with less errors in data preparation and use, than the actual openQA needles framework; that is:

- having a given SUT (system under test) GUI or CLI-terminal screenshot, with a local distribution of pixels or text commands related to a running test status,

- we want to identify a desired target, e.g. a screen image status or data/commands context,

- based on AI/ML-pretrained archives containing object or other proper elaboration tools,

- possibly able to identify also object not present in the archive, i.e. by means of AI/ML mechanisms.

- the matching result should be then adapted to continue working in the openQA test, likewise and in place of the same result that would have been produced by the original openQA needles framework.

- We expect an improvement of the matching-time(less time), reliability of the expected result(less error) and simplification of archive maintenance in adding/removing objects(smaller DB and less actions).

Hackweek POC:

Main steps

- Phase 1 - Plan

- study the available tools

- prepare a plan for the process to build

- Phase 2 - Implement

- write and build a draft application

- Phase 3 - Data

- prepare the data archive from a subset of needles

- initialize/pre-train the base archive

- select a screenshot from the subset, removing/changing some part

- Phase 4 - Test

- run the POC application

- expect the image type is identified in a good %.

Resources

First step of this project is quite identification of useful resources for the scope; some possibilities are:

- SUSE AI and other ML tools (i.e. Tensorflow)

- Tools able to manage images

- RPA test tools (like i.e. Robot framework)

- other.

Project references

- Repository: openqa-needles-AI-driven

MCP Perl SDK by kraih

Description

We've been using the MCP Perl SDK to connect openQA with AI. And while the basics are working pretty well, the SDK is not fully spec compliant yet. So let's change that!

Goals

- Support for Resources

- All response types (Audio, Resource Links, Embedded Resources...)

- Tool/Prompt/Resource update notifications

- Dynamic Tool/Prompt/Resource lists

- New authentication mechanisms

Resources

openQA log viewer by mpagot

Description

*** Warning: Are You at Risk for VOMIT? ***

Do you find yourself staring at a screen, your eyes glossing over as thousands of lines of text scroll by? Do you feel a wave of text-based nausea when someone asks you to "just check the logs"?

You may be suffering from VOMIT (Verbose Output Mental Irritation Toxicity).

This dangerous, work-induced ailment is triggered by exposure to an overwhelming quantity of log data, especially from parallel systems. The human brain, not designed to mentally process 12 simultaneous autoinst-log.txt files, enters a state of toxic shock. It rejects the "Verbose Output," making it impossible to find the one critical error line buried in a 50,000-line sea of "INFO: doing a thing."

Before you're forced to rm -rf /var/log in a fit of desperation, we present the digital antacid.

No panic: we have The openQA Log Visualizer

This is the UI antidote for handling toxic log environments. It bravely dives into the chaotic, multi-machine mess of your openQA test runs, finds all the related, verbose logs, and force-feeds them into a parser.

Goals

Work on the existing POC openqa-log-visualizer about few specific tasks:

- add support for more type of logs

- extend the configuration file syntax beyond the actual one

- work on log parsing performance

Find some beta-tester and collect feedback and ideas about features

If time allow for it evaluate other UI frameworks and solutions (something more simple to distribute and run, maybe more low level to gain in performance).

Resources

Bring up Agama based tests for openSUSE Tumbleweed by szarate

Description

Agama has been around for some time already, and we have some tests for it on Tumbleweed however they are only on the development job group and are too few to be helpful in assessing the quality of a build

This project aims at enabling and creating new testsuites for the agama flavor, using the already existsing DVD and NET flavors as starting points

Goals

- Introduce tests based on the Agama flavor in the main Tumbleweed job group

- Create Tumbleweed yaml schedules for agama installer and its own jsonette profile (The one being used now are reused from leap)

- Fan out tests that have long runtimes (i.e tackle this ticket)

- Reduce redundancy in tests

Resources

Kudos aka openSUSE Recognition Platform by lkocman

Description

Relevant blog post at news-o-o

I started the Kudos application shortly after Leap 16.0 to create a simple, friendly way to recognize people for their work and contributions to openSUSE. There’s so much more to our community than just submitting requests in OBS or gitea we have translations (not only in Weblate), wiki edits, forum and social media moderation, infrastructure maintenance, booth participation, talks, manual testing, openQA test suites, and more!

Goals

Kudos under github.com/openSUSE/kudos with build previews aka netlify

Have a kudos.opensuse.org instance running in production

Build an easy-to-contribute recognition platform for the openSUSE community a place where everyone can send and receive appreciation for their work, across all areas of contribution.

In the future, we could even explore reward options such as vouchers for t-shirts or other community swag, small tokens of appreciation to make recognition more tangible.

Resources

(Do not create new badge requests during hackweek, unless you'll make the badge during hackweek)

- Source code: openSUSE/kudos

- Badges: openSUSE/kudos-badges

- Issue tracker: kudos/issues