Make it faster!

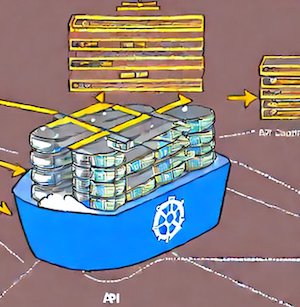

There are use cases that put the Kubernetes API under heavy load - using Rancher at scale can be one of them.

Also, there are use cases in which a connection to the Kubernetes API might not always be present, or with good bandwidth - using Rancher for edge use cases can be one of them.

This project aims to create a local cache serving data from the Kubernetes API - with good performance and displaying last-good-results on a flaky connection.

Goal for this Hackweek

Implement Proof-Of-Concept client-go components backed by SQLite.

https://github.com/moio/vai

Resources

Golang and ideally Kubernetes hackers are more than welcome!

Looking for hackers with the skills:

kubernetes k8s api golang go performance testautomation scalability

This project is part of:

Hack Week 22

Activity

Comments

Similar Projects

Exploring Modern AI Trends and Kubernetes-Based AI Infrastructure by jluo

Description

Build a solid understanding of the current landscape of Artificial Intelligence and how modern cloud-native technologies—especially Kubernetes—support AI workloads.

Goals

Use Gemini Learning Mode to guide the exploration, surface relevant concepts, and structure the learning journey:

- Gain insight into the latest AI trends, tools, and architectural concepts.

- Understand how Kubernetes and related cloud-native technologies are used in the AI ecosystem (model training, deployment, orchestration, MLOps).

Resources

Red Hat AI Topic Articles

- https://www.redhat.com/en/topics/ai

Kubeflow Documentation

- https://www.kubeflow.org/docs/

Q4 2025 CNCF Technology Landscape Radar report:

- https://www.cncf.io/announcements/2025/11/11/cncf-and-slashdata-report-finds-leading-ai-tools-gaining-adoption-in-cloud-native-ecosystems/

- https://www.cncf.io/wp-content/uploads/2025/11/cncfreporttechradar_111025a.pdf

Agent-to-Agent (A2A) Protocol

- https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

Self-Scaling LLM Infrastructure Powered by Rancher by ademicev0

Self-Scaling LLM Infrastructure Powered by Rancher

Description

The Problem

Running LLMs can get expensive and complex pretty quickly.

Today there are typically two choices:

- Use cloud APIs like OpenAI or Anthropic. Easy to start with, but costs add up at scale.

- Self-host everything - set up Kubernetes, figure out GPU scheduling, handle scaling, manage model serving... it's a lot of work.

What if there was a middle ground?

What if infrastructure scaled itself instead of making you scale it?

Can we use existing Rancher capabilities like CAPI, autoscaling, and GitOps to make this simpler instead of building everything from scratch?

Project Repository: github.com/alexander-demicev/llmserverless

What This Project Does

A key feature is hybrid deployment: requests can be routed based on complexity or privacy needs. Simple or low-sensitivity queries can use public APIs (like OpenAI), while complex or private requests are handled in-house on local infrastructure. This flexibility allows balancing cost, privacy, and performance - using cloud for routine tasks and on-premises resources for sensitive or demanding workloads.

A complete, self-scaling LLM infrastructure that:

- Scales to zero when idle (no idle costs)

- Scales up automatically when requests come in

- Adds more nodes when needed, removes them when demand drops

- Runs on any infrastructure - laptop, bare metal, or cloud

Think of it as "serverless for LLMs" - focus on building, the infrastructure handles itself.

How It Works

A combination of open source tools working together:

Flow:

- Users interact with OpenWebUI (chat interface)

- Requests go to LiteLLM Gateway

- LiteLLM routes requests to:

- Ollama (Knative) for local model inference (auto-scales pods)

- Or cloud APIs for fallback

Technical talks at universities by agamez

Description

This project aims to empower the next generation of tech professionals by offering hands-on workshops on containerization and Kubernetes, with a strong focus on open-source technologies. By providing practical experience with these cutting-edge tools and fostering a deep understanding of open-source principles, we aim to bridge the gap between academia and industry.

For now, the scope is limited to Spanish universities, since we already have the contacts and have started some conversations.

Goals

- Technical Skill Development: equip students with the fundamental knowledge and skills to build, deploy, and manage containerized applications using open-source tools like Kubernetes.

- Open-Source Mindset: foster a passion for open-source software, encouraging students to contribute to open-source projects and collaborate with the global developer community.

- Career Readiness: prepare students for industry-relevant roles by exposing them to real-world use cases, best practices, and open-source in companies.

Resources

- Instructors: experienced open-source professionals with deep knowledge of containerization and Kubernetes.

- SUSE Expertise: leverage SUSE's expertise in open-source technologies to provide insights into industry trends and best practices.

A CLI for Harvester by mohamed.belgaied

Harvester does not officially come with a CLI tool, the user is supposed to interact with Harvester mostly through the UI. Though it is theoretically possible to use kubectl to interact with Harvester, the manipulation of Kubevirt YAML objects is absolutely not user friendly. Inspired by tools like multipass from Canonical to easily and rapidly create one of multiple VMs, I began the development of Harvester CLI. Currently, it works but Harvester CLI needs some love to be up-to-date with Harvester v1.0.2 and needs some bug fixes and improvements as well.

Project Description

Harvester CLI is a command line interface tool written in Go, designed to simplify interfacing with a Harvester cluster as a user. It is especially useful for testing purposes as you can easily and rapidly create VMs in Harvester by providing a simple command such as:

harvester vm create my-vm --count 5

to create 5 VMs named my-vm-01 to my-vm-05.

Harvester CLI is functional but needs a number of improvements: up-to-date functionality with Harvester v1.0.2 (some minor issues right now), modifying the default behaviour to create an opensuse VM instead of an ubuntu VM, solve some bugs, etc.

Github Repo for Harvester CLI: https://github.com/belgaied2/harvester-cli

Done in previous Hackweeks

- Create a Github actions pipeline to automatically integrate Harvester CLI to Homebrew repositories: DONE

- Automatically package Harvester CLI for OpenSUSE / Redhat RPMs or DEBs: DONE

Goal for this Hackweek

The goal for this Hackweek is to bring Harvester CLI up-to-speed with latest Harvester versions (v1.3.X and v1.4.X), and improve the code quality as well as implement some simple features and bug fixes.

Some nice additions might be: * Improve handling of namespaced objects * Add features, such as network management or Load Balancer creation ? * Add more unit tests and, why not, e2e tests * Improve CI * Improve the overall code quality * Test the program and create issues for it

Issue list is here: https://github.com/belgaied2/harvester-cli/issues

Resources

The project is written in Go, and using client-go the Kubernetes Go Client libraries to communicate with the Harvester API (which is Kubernetes in fact).

Welcome contributions are:

- Testing it and creating issues

- Documentation

- Go code improvement

What you might learn

Harvester CLI might be interesting to you if you want to learn more about:

- GitHub Actions

- Harvester as a SUSE Product

- Go programming language

- Kubernetes API

- Kubevirt API objects (Manipulating VMs and VM Configuration in Kubernetes using Kubevirt)

The Agentic Rancher Experiment: Do Androids Dream of Electric Cattle? by moio

Rancher is a beast of a codebase. Let's investigate if the new 2025 generation of GitHub Autonomous Coding Agents and Copilot Workspaces can actually tame it.

The Plan

Create a sandbox GitHub Organization, clone in key Rancher repositories, and let the AI loose to see if it can handle real-world enterprise OSS maintenance - or if it just hallucinates new breeds of Kubernetes resources!

Specifically, throw "Agentic Coders" some typical tasks in a complex, long-lived open-source project, such as:

❥ The Grunt Work: generate missing GoDocs, unit tests, and refactorings. Rebase PRs.

❥ The Complex Stuff: fix actual (historical) bugs and feature requests to see if they can traverse the complexity without (too much) human hand-holding.

❥ Hunting Down Gaps: find areas lacking in docs, areas of improvement in code, dependency bumps, and so on.

If time allows, also experiment with Model Context Protocol (MCP) to give agents context on our specific build pipelines and CI/CD logs.

Why?

We know AI can write "Hello World." and also moderately complex programs from a green field. But can it rebase a 3-month-old PR with conflicts in rancher/rancher? I want to find the breaking point of current AI agents to determine if and how they can help us to reduce our technical debt, work faster and better. At the same time, find out about pitfalls and shortcomings.

The CONCLUSION!!!

A ![]() State of the Union

State of the Union ![]() document was compiled to summarize lessons learned this week. For more gory details, just read on the diary below!

document was compiled to summarize lessons learned this week. For more gory details, just read on the diary below! ![]()

Bugzilla goes AI - Phase 1 by nwalter

Description

This project, Bugzilla goes AI, aims to boost developer productivity by creating an autonomous AI bug agent during Hackweek. The primary goal is to reduce the time employees spend triaging bugs by integrating Ollama to summarize issues, recommend next steps, and push focused daily reports to a Web Interface.

Goals

To reduce employee time spent on Bugzilla by implementing an AI tool that triages and summarizes bug reports, providing actionable recommendations to the team via Web Interface.

Project Charter

Description

Project Achievements during Hackweek

In this file you can read about what we achieved during Hackweek.

HTTP API for nftables by crameleon

Background

The idea originated in https://progress.opensuse.org/issues/164060 and is about building RESTful API which translates authorized HTTP requests to operations in nftables, possibly utilizing libnftables-json(5).

Originally, I started developing such an interface in Go, utilizing https://github.com/google/nftables. The conversion of string networks to nftables set elements was problematic (unfortunately no record of details), and I started a second attempt in Python, which made interaction much simpler thanks to native nftables Python bindings.

Goals

- Find and track the issue with google/nftables

- Revisit and polish the Go or Python code (prefer Go, but possibly depends on implementing missing functionality), primarily the server component

- Finish functionality to interact with nftables sets (retrieving and updating elements), which are of interest for the originating issue

- Align test suite

- Packaging

Resources

- https://git.netfilter.org/nftables/tree/py/src/nftables.py

- https://git.com.de/Georg/nftables-http-api (to be moved to GitHub)

- https://build.opensuse.org/package/show/home:crameleon:containers/pytest-nftables-container

Results

- Started new https://github.com/tacerus/nftables-http-api.

- First Go nftables issue was related to set elements needing to be added with different start and end addresses - coincidentally, this was recently discovered by someone else, who added a useful helper function for this: https://github.com/google/nftables/pull/342.

- Further improvements submitted: https://github.com/google/nftables/pull/347.

Side results

Upon starting to unify the structure and implementing more functionality, missing JSON output support was noticed for some subcommands in libnftables. Submitted patches here as well:

- https://lore.kernel.org/netfilter-devel/20251203131736.4036382-2-georg@syscid.com/T/#u

Play with the userfaultfd(2) system call and download on demand using HTTP Range Requests with Golang by rbranco

Description

The userfaultfd(2) is a cool system call to handle page faults in user-space. This should allow me to list the contents of an ISO or similar archive without downloading the whole thing. The userfaultfd(2) part can also be done in theory with the PROT_NONE mprotect + SIGSEGV trick, for complete Unix portability, though reportedly being slower.

Goals

- Create my own library for userfaultfd(2) in Golang.

- Create my own library for HTTP Range Requests.

- Complete portability with Unix.

- Benchmarks.

- Contribute some tests to LTP.

Resources

- https://docs.kernel.org/admin-guide/mm/userfaultfd.html

- https://www.cons.org/cracauer/cracauer-userfaultfd.html

Create a go module to wrap happy-compta.fr by cbosdonnat

Description

https://happy-compta.fr is a tool for french work councils simple book keeping. While it does the job, it has no API to work with and it is tedious to enter loads of operations.

Goals

Write a go client module to be used as an API to programmatically manipulate the tool.

Writing an example tool to load data from a CSV file would be good too.

Q2Boot - A handy QEMU VM launcher by amanzini

Description

Q2Boot (Qemu Quick Boot) is a command-line tool that wraps QEMU to provide a streamlined experience for launching virtual machines. It automatically configures common settings like KVM acceleration, virtio drivers, and networking while allowing customization through both configuration files and command-line options.

The project originally was a personal utility in D, now recently rewritten in idiomatic Go. It lives at repository https://github.com/ilmanzo/q2boot

Goals

Improve the project, testing with different scenarios , address issues and propose new features. It will benefit of some basic integration testing by providing small sample disk images.

Updates

- Dec 1, 2025 : refactor command line options, added structured logging. Released v0.0.2

- Dec 2, 2025 : added external monitor via telnet option

- Dec 4, 2025 : released v0.0.3 with architecture auto-detection

- Dec 5, 2025 : filing new issues and general polishment. Designing E2E testing

Resources

Rewrite Distrobox in go (POC) by fabriziosestito

Description

Rewriting Distrobox in Go.

Main benefits:

- Easier to maintain and to test

- Adapter pattern for different container backends (LXC, systemd-nspawn, etc.)

Goals

- Build a minimal starting point with core commands

- Keep the CLI interface compatible: existing users shouldn't notice any difference

- Use a clean Go architecture with adapters for different container backends

- Keep dependencies minimal and binary size small

- Benchmark against the original shell script

Resources

- Upstream project: https://github.com/89luca89/distrobox/

- Distrobox site: https://distrobox.it/

- ArchWiki: https://wiki.archlinux.org/title/Distrobox

go-git: unlocking SHA256-based repository cloning ahead of git v3 by pgomes

Description

The go-git library implements the git internals in pure Go, so that any Go application can handle not only Git repositories, but also lower-level primitives (e.g. packfiles, idxfiles, etc) without needing to shell out to the git binary.

The focus for this Hackweek is to fast track key improvements for the project ahead of the upstream release of Git V3, which may take place at some point next year.

Goals

- Add support for cloning SHA256 repositories.

- Decrease memory churn for very large repositories (e.g. Linux Kernel repository).

- Cut the first alpha version for

go-git/v6.

Stretch goals

- Review and update the official documentation.

- Optimise use of go-git in Fleet.

- Create RFC/example for go-git plugins to improve extensibility.

- Investigate performance bottlenecks for Blame and Status.

Resources

- https://github.com/go-git/go-git/

- https://go-git.github.io/docs/

Create a go module to wrap happy-compta.fr by cbosdonnat

Description

https://happy-compta.fr is a tool for french work councils simple book keeping. While it does the job, it has no API to work with and it is tedious to enter loads of operations.

Goals

Write a go client module to be used as an API to programmatically manipulate the tool.

Writing an example tool to load data from a CSV file would be good too.

Add support for todo.sr.ht to git-bug by mcepl

Description

I am a big fan of distributed issue tracking and the best (and possibly) only credible such issue tracker is now git-bug. It has bridges to another centralized issue trackers, so user can download (and modify) issues on GitHub, GitLab, Launchpad, Jira). I am also a fan of SourceHut, which has its own issue tracker, so I would like it bridge the two. Alas, I don’t know much about Go programming language (which the git-bug is written) and absolutely nothing about GraphQL (which todo.sr.ht uses for communication). AI to the rescue. I would like to vibe code (and eventually debug and make functional) bridge to the SourceHut issue tracker.

Goals

Functional fix for https://github.com/git-bug/git-bug/issues/1024

Resources

- anybody how actually understands how GraphQL and authentication on SourceHut (OAuth2) works

HTTP API for nftables by crameleon

Background

The idea originated in https://progress.opensuse.org/issues/164060 and is about building RESTful API which translates authorized HTTP requests to operations in nftables, possibly utilizing libnftables-json(5).

Originally, I started developing such an interface in Go, utilizing https://github.com/google/nftables. The conversion of string networks to nftables set elements was problematic (unfortunately no record of details), and I started a second attempt in Python, which made interaction much simpler thanks to native nftables Python bindings.

Goals

- Find and track the issue with google/nftables

- Revisit and polish the Go or Python code (prefer Go, but possibly depends on implementing missing functionality), primarily the server component

- Finish functionality to interact with nftables sets (retrieving and updating elements), which are of interest for the originating issue

- Align test suite

- Packaging

Resources

- https://git.netfilter.org/nftables/tree/py/src/nftables.py

- https://git.com.de/Georg/nftables-http-api (to be moved to GitHub)

- https://build.opensuse.org/package/show/home:crameleon:containers/pytest-nftables-container

Results

- Started new https://github.com/tacerus/nftables-http-api.

- First Go nftables issue was related to set elements needing to be added with different start and end addresses - coincidentally, this was recently discovered by someone else, who added a useful helper function for this: https://github.com/google/nftables/pull/342.

- Further improvements submitted: https://github.com/google/nftables/pull/347.

Side results

Upon starting to unify the structure and implementing more functionality, missing JSON output support was noticed for some subcommands in libnftables. Submitted patches here as well:

- https://lore.kernel.org/netfilter-devel/20251203131736.4036382-2-georg@syscid.com/T/#u

Contribute to terraform-provider-libvirt by pinvernizzi

Description

The SUSE Manager (SUMA) teams' main tool for infrastructure automation, Sumaform, largely relies on terraform-provider-libvirt. That provider is also widely used by other teams, both inside and outside SUSE.

It would be good to help the maintainers of this project and give back to the community around it, after all the amazing work that has been already done.

If you're interested in any of infrastructure automation, Terraform, virtualization, tooling development, Go (...) it is also a good chance to learn a bit about them all by putting your hands on an interesting, real-use-case and complex project.

Goals

- Get more familiar with Terraform provider development and libvirt bindings in Go

- Solve some issues and/or implement some features

- Get in touch with the community around the project

Resources

- CONTRIBUTING readme

- Go libvirt library in use by the project

- Terraform plugin development

- "Good first issue" list

Cluster API Provider for Harvester by rcase

Project Description

The Cluster API "infrastructure provider" for Harvester, also named CAPHV, makes it possible to use Harvester with Cluster API. This enables people and organisations to create Kubernetes clusters running on VMs created by Harvester using a declarative spec.

The project has been bootstrapped in HackWeek 23, and its code is available here.

Work done in HackWeek 2023

- Have a early working version of the provider available on Rancher Sandbox : *DONE *

- Demonstrated the created cluster can be imported using Rancher Turtles: DONE

- Stretch goal - demonstrate using the new provider with CAPRKE2: DONE and the templates are available on the repo

DONE in HackWeek 24:

- Add more Unit Tests

- Improve Status Conditions for some phases

- Add cloud provider config generation

- Testing with Harvester v1.3.2

- Template improvements

- Issues creation

DONE in 2025 (out of Hackweek)

- Support of ClusterClass

- Add to

clusterctlcommunity providers, you can add it directly withclusterctl - Testing on newer versions of Harvester v1.4.X and v1.5.X

- Support for

clusterctl generate cluster ... - Improve Status Conditions to reflect current state of Infrastructure

- Improve CI (some bugs for release creation)

Goals for HackWeek 2025

- FIRST and FOREMOST, any topic is important to you

- Add e2e testing

- Certify the provider for Rancher Turtles

- Add Machine pool labeling

- Add PCI-e passthrough capabilities.

- Other improvement suggestions are welcome!

Thanks to @isim and Dominic Giebert for their contributions!

Resources

Looking for help from anyone interested in Cluster API (CAPI) or who wants to learn more about Harvester.

This will be an infrastructure provider for Cluster API. Some background reading for the CAPI aspect:

RMT.rs: High-Performance Registration Path for RMT using Rust by gbasso

Description

The SUSE Repository Mirroring Tool (RMT) is a critical component for managing software updates and subscriptions, especially for our Public Cloud Team (PCT). In a cloud environment, hundreds or even thousands of new SUSE instances (VPS/EC2) can be provisioned simultaneously. Each new instance attempts to register against an RMT server, creating a "thundering herd" scenario.

We have observed that the current RMT server, written in Ruby, faces performance issues under this high-concurrency registration load. This can lead to request overhead, slow registration times, and outright registration failures, delaying the readiness of new cloud instances.

This Hackweek project aims to explore a solution by re-implementing the performance-critical registration path in Rust. The goal is to leverage Rust's high performance, memory safety, and first-class concurrency handling to create an alternative registration endpoint that is fast, reliable, and can gracefully manage massive, simultaneous request spikes.

The new Rust module will be integrated into the existing RMT Ruby application, allowing us to directly compare the performance of both implementations.

Goals

The primary objective is to build and benchmark a high-performance Rust-based alternative for the RMT server registration endpoint.

Key goals for the week:

- Analyze & Identify: Dive into the

SUSE/rmtRuby codebase to identify and map out the exact critical path for server registration (e.g., controllers, services, database interactions). - Develop in Rust: Implement a functionally equivalent version of this registration logic in Rust.

- Integrate: Explore and implement a method for Ruby/Rust integration to "hot-wire" the new Rust module into the RMT application. This may involve using FFI, or libraries like

rb-sysormagnus. - Benchmark: Create a benchmarking script (e.g., using

k6,ab, or a custom tool) that simulates the high-concurrency registration load from thousands of clients. - Compare & Present: Conduct a comparative performance analysis (requests per second, latency, success/error rates, CPU/memory usage) between the original Ruby path and the new Rust path. The deliverable will be this data and a summary of the findings.

Resources

- RMT Source Code (Ruby):

https://github.com/SUSE/rmt

- RMT Documentation:

https://documentation.suse.com/sles/15-SP7/html/SLES-all/book-rmt.html

- Tooling & Stacks:

- RMT/Ruby development environment (for running the base RMT)

- Rust development environment (

rustup,cargo)

- Potential Integration Libraries:

- rb-sys:

https://github.com/oxidize-rb/rb-sys - Magnus:

https://github.com/matsadler/magnus

- rb-sys:

- Benchmarking Tools:

k6(https://k6.io/)ab(ApacheBench)

dynticks-testing: analyse perf / trace-cmd output and aggregate data by m.crivellari

Description

dynticks-testing is a project started years ago by Frederic Weisbecker. One of the feature is to check the actual configuration (isolcpus, irqaffinity etc etc) and give feedback on it.

An important goal of this tool is to parse the output of trace-cmd / perf and provide more readable data, showing the duration of every events grouped by PID (showing also the CPU number, if the tasks has been migrated etc).

An example of data captured on my laptop (incomplete!!):

-0 [005] dN.2. 20310.270699: sched_wakeup: WaylandProxy:46380 [120] CPU:005

-0 [005] d..2. 20310.270702: sched_switch: swapper/5:0 [120] R ==> WaylandProxy:46380 [120]

...

WaylandProxy-46380 [004] d..2. 20310.295397: sched_switch: WaylandProxy:46380 [120] S ==> swapper/4:0 [120]

-0 [006] d..2. 20310.295397: sched_switch: swapper/6:0 [120] R ==> firefox:46373 [120]

firefox-46373 [006] d..2. 20310.295408: sched_switch: firefox:46373 [120] S ==> swapper/6:0 [120]

-0 [004] dN.2. 20310.295466: sched_wakeup: WaylandProxy:46380 [120] CPU:004

Output of noise_parse.py:

Task: WaylandProxy Pid: 46380 cpus: {4, 5} (Migrated!!!)

Wakeup Latency Nr: 24 Duration: 89

Sched switch: kworker/12:2 Nr: 1 Duration: 6

My first contribution is around Nov. 2024!

Goals

- add more features (eg cpuset)

- test / bugfix

Resources

- Frederic's public repository: https://git.kernel.org/pub/scm/linux/kernel/git/frederic/dynticks-testing.git/

- https://docs.kernel.org/timers/no_hz.html#testing

Progresses

isolcpus and cpusets implemented and merged in master: dynticks-testing.git commit

RMT.rs: High-Performance Registration Path for RMT using Rust by gbasso

Description

The SUSE Repository Mirroring Tool (RMT) is a critical component for managing software updates and subscriptions, especially for our Public Cloud Team (PCT). In a cloud environment, hundreds or even thousands of new SUSE instances (VPS/EC2) can be provisioned simultaneously. Each new instance attempts to register against an RMT server, creating a "thundering herd" scenario.

We have observed that the current RMT server, written in Ruby, faces performance issues under this high-concurrency registration load. This can lead to request overhead, slow registration times, and outright registration failures, delaying the readiness of new cloud instances.

This Hackweek project aims to explore a solution by re-implementing the performance-critical registration path in Rust. The goal is to leverage Rust's high performance, memory safety, and first-class concurrency handling to create an alternative registration endpoint that is fast, reliable, and can gracefully manage massive, simultaneous request spikes.

The new Rust module will be integrated into the existing RMT Ruby application, allowing us to directly compare the performance of both implementations.

Goals

The primary objective is to build and benchmark a high-performance Rust-based alternative for the RMT server registration endpoint.

Key goals for the week:

- Analyze & Identify: Dive into the

SUSE/rmtRuby codebase to identify and map out the exact critical path for server registration (e.g., controllers, services, database interactions). - Develop in Rust: Implement a functionally equivalent version of this registration logic in Rust.

- Integrate: Explore and implement a method for Ruby/Rust integration to "hot-wire" the new Rust module into the RMT application. This may involve using FFI, or libraries like

rb-sysormagnus. - Benchmark: Create a benchmarking script (e.g., using

k6,ab, or a custom tool) that simulates the high-concurrency registration load from thousands of clients. - Compare & Present: Conduct a comparative performance analysis (requests per second, latency, success/error rates, CPU/memory usage) between the original Ruby path and the new Rust path. The deliverable will be this data and a summary of the findings.

Resources

- RMT Source Code (Ruby):

https://github.com/SUSE/rmt

- RMT Documentation:

https://documentation.suse.com/sles/15-SP7/html/SLES-all/book-rmt.html

- Tooling & Stacks:

- RMT/Ruby development environment (for running the base RMT)

- Rust development environment (

rustup,cargo)

- Potential Integration Libraries:

- rb-sys:

https://github.com/oxidize-rb/rb-sys - Magnus:

https://github.com/matsadler/magnus

- rb-sys:

- Benchmarking Tools:

k6(https://k6.io/)ab(ApacheBench)