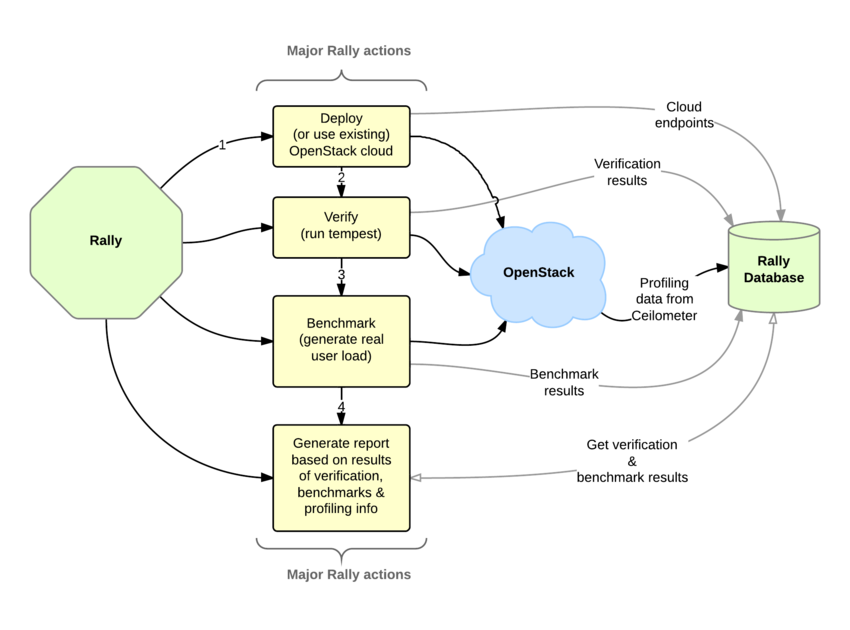

OpenStack is, undoubtedly, a really huge ecosystem of cooperative services. Rally is a benchmarking tool that answers the question: “How does OpenStack work at scale?”. To make this possible, Rally automates and unifies multi-node OpenStack deployment, cloud verification, benchmarking & profiling. Rally does it in a pluggable way, making it possible to check whether OpenStack is going to work well on, say, a 1k-servers installation under high load. Thus it can be used as a basic tool for an OpenStack CI/CD system that would continuously improve its SLA, performance, and stability.

The goal of this project is to use OpenStack Rally as one of our benchmarking tools for SUSE Cloud.

Rally Links

Steps:

- find out how can we effectively use OpenStack Rally for SUSE Cloud testing

- run Rally tests manually

- automate as much as possible

Results

Still needs to be done

New Ideas

Pages

Looking for hackers with the skills:

This project is part of:

Hack Week 15

Activity

Comments

-

almost 9 years ago by kbaikov | Reply

Hello,

If you are interested you can use the rally installed here: backup.cloudadm.qa.suse.de Login with usual credentials. Rally is installed from the SLEopenstackmaster repo.

I already added our 3 qa hardwares which you can see using the command: rally deployment list Use "rally deployment use " to switch to the correct deployment

See the files qa[2-3]-deployment.json in the /root for the parameters that i used creating those.

/root/rally/samples/ contains the sample scenarios. So you can ran any of them using the command e.g.: rally task start rally/samples/tasks/scenarios/nova/boot-and-delete.json Make sure you use the correct regex for the image. Then generate the nice report using this: rally task report --out output.html

If you have any questions please do not hesitate to ask. Thank you.

Similar Projects

Create a Cloud-Native policy engine with notifying capabilities to optimize resource usage by gbazzotti

Description

The goal of this project is to begin the initial phase of development of an all-in-one Cloud-Native Policy Engine that notifies resource owners when their resources infringe predetermined policies. This was inspired by a current issue in the CES-SRE Team where other solutions seemed to not exactly correspond to the needs of the specific workloads running on the Public Cloud Team space.

The initial architecture can be checked out on the Repository listed under Resources.

Among the features that will differ this project from other monitoring/notification systems:

- Pre-defined sensible policies written at the software-level, avoiding a learning curve by requiring users to write their own policies

- All-in-one functionality: logging, mailing and all other actions are not required to install any additional plugins/packages

- Easy account management, being able to parse all required configuration by a single JSON file

- Eliminate integrations by not requiring metrics to go through a data-agreggator

Goals

- Create a minimal working prototype following the workflow specified on the documentation

- Provide instructions on installation/usage

- Work on email notifying capabilities

Resources

Testing and adding GNU/Linux distributions on Uyuni by juliogonzalezgil

Join the Gitter channel! https://gitter.im/uyuni-project/hackweek

Uyuni is a configuration and infrastructure management tool that saves you time and headaches when you have to manage and update tens, hundreds or even thousands of machines. It also manages configuration, can run audits, build image containers, monitor and much more!

Currently there are a few distributions that are completely untested on Uyuni or SUSE Manager (AFAIK) or just not tested since a long time, and could be interesting knowing how hard would be working with them and, if possible, fix whatever is broken.

For newcomers, the easiest distributions are those based on DEB or RPM packages. Distributions with other package formats are doable, but will require adapting the Python and Java code to be able to sync and analyze such packages (and if salt does not support those packages, it will need changes as well). So if you want a distribution with other packages, make sure you are comfortable handling such changes.

No developer experience? No worries! We had non-developers contributors in the past, and we are ready to help as long as you are willing to learn. If you don't want to code at all, you can also help us preparing the documentation after someone else has the initial code ready, or you could also help with testing :-)

The idea is testing Salt (including bootstrapping with bootstrap script) and Salt-ssh clients

To consider that a distribution has basic support, we should cover at least (points 3-6 are to be tested for both salt minions and salt ssh minions):

- Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file)

- Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)

- Package management (install, remove, update...)

- Patching

- Applying any basic salt state (including a formula)

- Salt remote commands

- Bonus point: Java part for product identification, and monitoring enablement

- Bonus point: sumaform enablement (https://github.com/uyuni-project/sumaform)

- Bonus point: Documentation (https://github.com/uyuni-project/uyuni-docs)

- Bonus point: testsuite enablement (https://github.com/uyuni-project/uyuni/tree/master/testsuite)

If something is breaking: we can try to fix it, but the main idea is research how supported it is right now. Beyond that it's up to each project member how much to hack :-)

- If you don't have knowledge about some of the steps: ask the team

- If you still don't know what to do: switch to another distribution and keep testing.

This card is for EVERYONE, not just developers. Seriously! We had people from other teams helping that were not developers, and added support for Debian and new SUSE Linux Enterprise and openSUSE Leap versions :-)

In progress/done for Hack Week 25

Guide

We started writin a Guide: Adding a new client GNU Linux distribution to Uyuni at https://github.com/uyuni-project/uyuni/wiki/Guide:-Adding-a-new-client-GNU-Linux-distribution-to-Uyuni, to make things easier for everyone, specially those not too familiar wht Uyuni or not technical.

openSUSE Leap 16.0

The distribution will all love!

https://en.opensuse.org/openSUSE:Roadmap#DRAFTScheduleforLeap16.0

Curent Status We started last year, it's complete now for Hack Week 25! :-D

[W]Reposync (this will require using spacewalk-common-channels and adding channels to the .ini file) NOTE: Done, client tools for SLMicro6 are using as those for SLE16.0/openSUSE Leap 16.0 are not available yet[W]Onboarding (salt minion from UI, salt minion from bootstrap scritp, and salt-ssh minion) (this will probably require adding OS to the bootstrap repository creator)[W]Package management (install, remove, update...). Works, even reboot requirement detection

openQA tests needles elaboration using AI image recognition by mdati

Description

In the openQA test framework, to identify the status of a target SUT image, a screenshots of GUI or CLI-terminal images,

the needles framework scans the many pictures in its repository, having associated a given set of tags (strings), selecting specific smaller parts of each available image. For the needles management actually we need to keep stored many screenshots, variants of GUI and CLI-terminal images, eachone accompanied by a dedicated set of data references (json).

A smarter framework, using image recognition based on AI or other image elaborations tools, nowadays widely available, could improve the matching process and hopefully reduce time and errors, during the images verification and detection process.

Goals

Main scope of this idea is to match a "graphical" image of the console or GUI status of a running openQA test, an image of a shell console or application-GUI screenshot, using less time and resources and with less errors in data preparation and use, than the actual openQA needles framework; that is:

- having a given SUT (system under test) GUI or CLI-terminal screenshot, with a local distribution of pixels or text commands related to a running test status,

- we want to identify a desired target, e.g. a screen image status or data/commands context,

- based on AI/ML-pretrained archives containing object or other proper elaboration tools,

- possibly able to identify also object not present in the archive, i.e. by means of AI/ML mechanisms.

- the matching result should be then adapted to continue working in the openQA test, likewise and in place of the same result that would have been produced by the original openQA needles framework.

- We expect an improvement of the matching-time(less time), reliability of the expected result(less error) and simplification of archive maintenance in adding/removing objects(smaller DB and less actions).

Hackweek POC:

Main steps

- Phase 1 - Plan

- study the available tools

- prepare a plan for the process to build

- Phase 2 - Implement

- write and build a draft application

- Phase 3 - Data

- prepare the data archive from a subset of needles

- initialize/pre-train the base archive

- select a screenshot from the subset, removing/changing some part

- Phase 4 - Test

- run the POC application

- expect the image type is identified in a good %.

Resources

First step of this project is quite identification of useful resources for the scope; some possibilities are:

- SUSE AI and other ML tools (i.e. Tensorflow)

- Tools able to manage images

- RPA test tools (like i.e. Robot framework)

- other.

Project references

- Repository: openqa-needles-AI-driven

Multimachine on-prem test with opentofu, ansible and Robot Framework by apappas

Description

A long time ago I explored using the Robot Framework for testing. A big deficiency over our openQA setup is that bringing up and configuring the connection to a test machine is out of scope.

Nowadays we have a way¹ to deploy SUTs outside openqa, but we only use if for cloud tests in conjuction with openqa. Using knowledge gained from that project I am going to try to create a test scenario that replicates an openqa test but this time including the deployment and setup of the SUT.

Goals

Create a simple multimachine test scenario with the support server and SUT all created by the robot framework.

Resources

- https://github.com/SUSE/qe-sap-deployment

- terraform-libvirt-provider

Bring to Cockpit + System Roles capabilities from YAST by miguelpc

Bring to Cockpit + System Roles features from YAST

Cockpit and System Roles have been added to SLES 16 There are several capabilities in YAST that are not yet present in Cockpit and System Roles We will follow the principle of "automate first, UI later" being System Roles the automation component and Cockpit the UI one.

Goals

The idea is to implement service configuration in System Roles and then add an UI to manage these in Cockpit. For some capabilities it will be required to have an specific Cockpit Module as they will interact with a reasource already configured.

Resources

A plan on capabilities missing and suggested implementation is available here: https://docs.google.com/spreadsheets/d/1ZhX-Ip9MKJNeKSYV3bSZG4Qc5giuY7XSV0U61Ecu9lo/edit

Linux System Roles:

- https://linux-system-roles.github.io/

- https://build.opensuse.org/package/show/openSUSE:Factory/ansible-linux-system-roles Package on sle16 ansible-linux-system-roles

First meeting Hackweek catchup

- Monday, December 1 · 11:00 – 12:00

- Time zone: Europe/Madrid

- Google Meet link: https://meet.google.com/rrc-kqch-hca

Contribute to terraform-provider-libvirt by pinvernizzi

Description

The SUSE Manager (SUMA) teams' main tool for infrastructure automation, Sumaform, largely relies on terraform-provider-libvirt. That provider is also widely used by other teams, both inside and outside SUSE.

It would be good to help the maintainers of this project and give back to the community around it, after all the amazing work that has been already done.

If you're interested in any of infrastructure automation, Terraform, virtualization, tooling development, Go (...) it is also a good chance to learn a bit about them all by putting your hands on an interesting, real-use-case and complex project.

Goals

- Get more familiar with Terraform provider development and libvirt bindings in Go

- Solve some issues and/or implement some features

- Get in touch with the community around the project

Resources

- CONTRIBUTING readme

- Go libvirt library in use by the project

- Terraform plugin development

- "Good first issue" list

Help Create A Chat Control Resistant Turnkey Chatmail/Deltachat Relay Stack - Rootless Podman Compose, OpenSUSE BCI, Hardened, & SELinux by 3nd5h1771fy

Description

The Mission: Decentralized & Sovereign Messaging

FYI: If you have never heard of "Chatmail", you can visit their site here, but simply put it can be thought of as the underlying protocol/platform decentralized messengers like DeltaChat use for their communications. Do not confuse it with the honeypot looking non-opensource paid for prodect with better seo that directs you to chatmailsecure(dot)com

In an era of increasing centralized surveillance by unaccountable bad actors (aka BigTech), "Chat Control," and the erosion of digital privacy, the need for sovereign communication infrastructure is critical. Chatmail is a pioneering initiative that bridges the gap between classic email and modern instant messaging, offering metadata-minimized, end-to-end encrypted (E2EE) communication that is interoperable and open.

However, unless you are a seasoned sysadmin, the current recommended deployment method of a Chatmail relay is rigid, fragile, difficult to properly secure, and effectively takes over the entire host the "relay" is deployed on.

Why This Matters

A simple, host agnostic, reproducible deployment lowers the entry cost for anyone wanting to run a privacy‑preserving, decentralized messaging relay. In an era of perpetually resurrected chat‑control legislation threats, EU digital‑sovereignty drives, and many dangers of using big‑tech messaging platforms (Apple iMessage, WhatsApp, FB Messenger, Instagram, SMS, Google Messages, etc...) for any type of communication, providing an easy‑to‑use alternative empowers:

- Censorship resistance - No single entity controls the relay; operators can spin up new nodes quickly.

- Surveillance mitigation - End‑to‑end OpenPGP encryption ensures relay operators never see plaintext.

- Digital sovereignty - Communities can host their own infrastructure under local jurisdiction, aligning with national data‑policy goals.

By turning the Chatmail relay into a plug‑and‑play container stack, we enable broader adoption, foster a resilient messaging fabric, and give developers, activists, and hobbyists a concrete tool to defend privacy online.

Goals

As I indicated earlier, this project aims to drastically simplify the deployment of Chatmail relay. By converting this architecture into a portable, containerized stack using Podman and OpenSUSE base container images, we can allow anyone to deploy their own censorship-resistant, privacy-preserving communications node in minutes.

Our goal for Hack Week: package every component into containers built on openSUSE/MicroOS base images, initially orchestrated with a single container-compose.yml (podman-compose compatible). The stack will:

- Run on any host that supports Podman (including optimizations and enhancements for SELinux‑enabled systems).

- Allow network decoupling by refactoring configurations to move from file-system constrained Unix sockets to internal TCP networking, allowing containers achieve stricter isolation.

- Utilize Enhanced Security with SELinux by using purpose built utilities such as udica we can quickly generate custom SELinux policies for the container stack, ensuring strict confinement superior to standard/typical Docker deployments.

- Allow the use of bind or remote mounted volumes for shared data (

/var/vmail, DKIM keys, TLS certs, etc.). - Replace the local DNS server requirement with a remote DNS‑provider API for DKIM/TXT record publishing.

By delivering a turnkey, host agnostic, reproducible deployment, we lower the barrier for individuals and small communities to launch their own chatmail relays, fostering a decentralized, censorship‑resistant messaging ecosystem that can serve DeltaChat users and/or future services adopting this protocol

Resources

- The links included above

- https://chatmail.at/doc/relay/

- https://delta.chat/en/help

- Project repo -> https://codeberg.org/EndShittification/containerized-chatmail-relay